來源:半導體行業觀察

$英偉達 (NVDA.US)$的許多競爭對手都想搶佔其市場主導地位。其中一個不斷出現的名字是$博通 (AVGO.US)$。仔細觀察就會知道原因。其 XPU 功耗不到 600 瓦,使其成爲業內最節能的加速器之一。

美國銀行在本週給投資者的一份報告中表示,“將其視爲人工智能的首選。”它並不是在談論 Nvidia,儘管美國銀行認爲 Green Team 是 GPU 之戰中無可爭議的贏家。它指的是博通,該公司最近在其第二季度收益報告中宣佈了 10 比 1 的股票分割和好於預期的收入。該公司預計 2024 財年的銷售額將高於預期的 510 億美元。

美國銀行分析師預測該公司 2025 財年的銷售額將達到 599 億美元,同比增長 16%。分析師指出,博通去年收購VMWare 帶來的效率提升、銷售額的提升以及定製芯片的潛在增長是其 2025 年預測的關鍵指標。如果美國銀行的預測正確,那麼博通的市值可能會使其與其他幾家科技巨頭一起躋身萬億美元俱樂部,其中包括微軟、蘋果、Nvidia、亞馬遜、Alphabet 和 Meta。

爲了實現這一目標,它必須與 Nvidia 展開競爭,後者目前的市值領先博通 8040 億美元,爲 3.4 萬億美元。此外,Nvidia 的 CUDA 架構已在超大規模企業(如 Meta、微軟、谷歌和亞馬遜)的 AI 工作負載方面獲得了近乎壟斷的地位,這些企業是其最大的客戶。它擁有一個龐大的軟件、工具和庫生態系統,這進一步鎖定了客戶,併爲博通等競爭對手設置了很高的進入門檻。

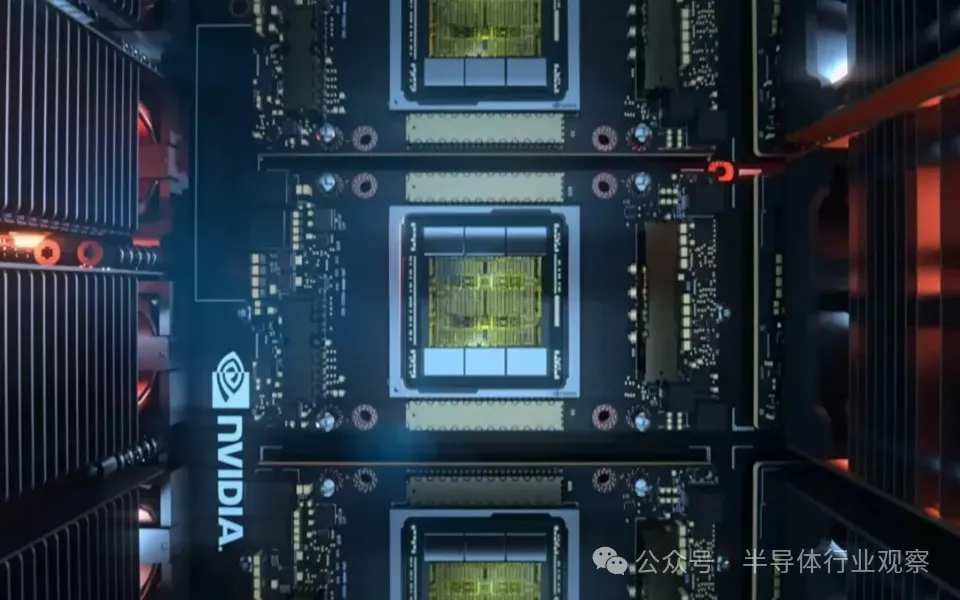

這些公司都希望減少對 Nvidia 的依賴,因此博通將自己定位爲替代方案,爲雲計算和 AI 公司提供定製的 AI 加速器芯片(稱爲 XPU)。在最近的一次活動中,博通指出,對其產品的需求正在滾雪球般增長,並指出兩年前最先進的集群有 4,096 個 XPU。2023 年,它構建了一個擁有超過 10,000 個 XPU 節點的集群,需要兩層 Tomahawk 或 Jericho 交換機。該公司的路線圖是將其擴展到 30,000 多個,最終達到 100 萬個。

博通強調的一項優勢是其 XPU 的能效。其功耗不到 600 瓦,是業內功耗最低的 AI 加速器之一。

博通對芯片市場也有不同的看法,稱芯片市場正從以 CPU 爲中心轉向以連接爲中心。除了 CPU 之外,GPU、NPU 和 LPU 等替代處理器的出現需要高速連接,而這正是博通的專長。

編輯/tolk