① Starting with the HBM4 product, SK Hynix is preparing to use TSMC's advanced logic process to manufacture basic nude films; ② In the past two months, Micron Technology and SK Hynix have successively announced that the HBM3E chip has entered mass production; ③ Samsung Electronics also released the industry's largest HBM3E chip in February this year, which is being verified by Nvidia.

Financial Services Association, April 19 (Editor Shi Zhengcheng) SK Hynix, the Nvidia supplier that just announced mass production of a new generation of HBM3E high-bandwidth memory chips a month ago, is now embarking on a new journey towards the next generation of products.

On Friday local time, SK Hynix and TSMC issued an announcement announcing that the two companies have signed a memorandum of understanding to integrate HBM and logic layer advanced packaging technology. The two sides will cooperate to develop the sixth-generation HBM product (HBM4), which is expected to be put into operation in 2026.

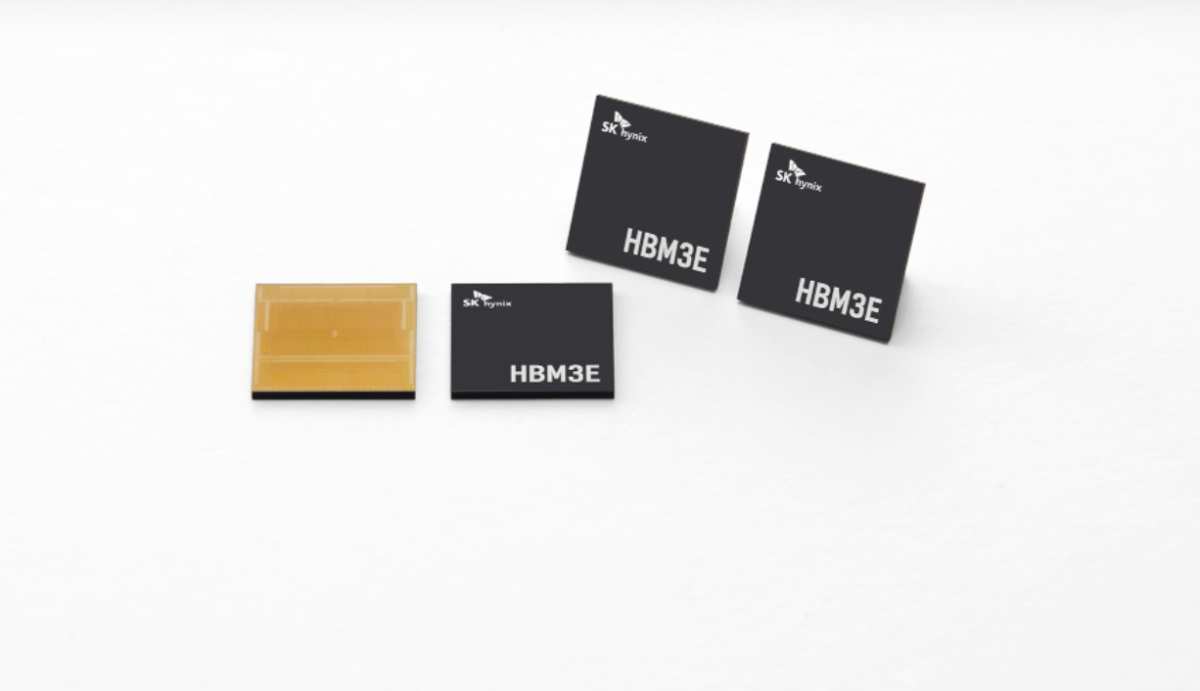

(Source: SK Hynix)

Background: What is high-bandwidth memory

As we all know, High Bandwidth Memory (High Bandwidth Memory) was developed to solve the insufficient bandwidth of traditional DDR memory to meet the needs of high-performance computing. By stacking memory chips and connecting these chips through silicon holes (TSV), memory bandwidth is significantly increased.

SK Hynix first announced the successful development of HBM technology in 2013, and later the chip known as HBM1 first entered the market through AMD's Radeon R9 Fury video card. Subsequently, the HBM family welcomed HBM2, HBM2E, HBM3, and HBM3E. According to SK Hynix, the HBM3E brought about a 10% cooling improvement, while the data processing capacity also reached the level of 1.18 TB per second.

(HBM3E chip finished product, source: SK Hynix)

Iteration of technology has also led to a doubling of parameters. For example, according to Nvidia's official specification table, the H100 product is mainly equipped with an 80GB HBM3, while the H200 product using the HBM3E has almost doubled the memory capacity to 141GB.

What to do with TSMC?

Prior to this cooperation, all Hynix HBM chips were based on the company's own manufacturing process, including manufacturing the bottom layer of the basic die in the package, and then stacking the multi-layer DRAM die on top of the base die film.

(HBM3E demo video, source: SK Hynix)

The two companies said in the announcement that starting with HBM4 products, they are preparing to use TSMC's advanced logic technology to manufacture basic naked films. By adding more functions through ultra-fine technology, the company can produce customized HBM products that better meet customer needs in terms of performance and sharing. In addition, the two sides also plan to cooperate to optimize the integration of HBM products and TSMC's unique CowOS technology (2.5D package).

Through cooperation with TSMC, SK Hynix plans to begin large-scale production of HBM4 chips in 2026. As Nvidia's main supplier, Hynix is supplying HBM3 chips to AI leaders, and will begin delivering HBM3E chips this year.

For TSMC, AI servers are also the strongest driver for maintaining the company's performance at a time when consumer electronics are weak and demand for automobiles is falling. TSMC expects total capital expenditure for the 2024 fiscal year to be between US$28-32 billion, with about 10% investing in advanced packaging capabilities.

The Big Three are fiercely fighting in the HBM market

According to information available in the open market, currently only SK Hynix, Micron Technology, and Samsung Electronics are capable of producing HBM chips compatible with AI computing systems such as the H100. Right now, these three companies are competing fiercely across the Pacific.

About half a month earlier than SK Hynix, Micron Technology also announced the start of mass production of HBM3E chips this year. In February of this year, Samsung, which is stepping up the expansion of HBM production capacity, also released the industry's largest 36GB HBM3E 12H chip. Nvidia said last month that it is qualifying Samsung's chips for use in AI server products.

Research firm Trendforce estimates that SK Hynix can account for 52.5% of the HBM market in 2024, while Samsung and Micron will account for 42.4% and 5.1%. Additionally, in the dynamic random access memory (DRAM) industry, HBM's revenue share will exceed 8% in 2023 and is expected to reach 20% in 2024.

Regarding the partnership between SK Hynix and TSMC, Allen Cheng, director of the PricewaterhouseCoopers Hi-Tech Industry Research Center, thought it was a “smart move.” He said, “TSMC has almost all of the key customers developing cutting-edge AI chips. Further deepening the partnership means that Hynix can attract more customers to use the company's HBM.”