Source: Securities Times

In the early morning of March 26, Google officially launched its new generation large language model, Gemini 2.5.

$Alphabet-C (GOOG.US)$ Gemini 2.5 is defined as the "smartest AI model" the company has developed to date, with the Gemini 2.5 Pro experimental version significantly outperforming OpenAI's o3-mini, Claude3.7 Sonnet, Grok-3, and DeepSeek-R1 in multiple benchmark tests. Alphabet-C DeepMind's Chief Technology Officer Koray Kavukcuoglu stated that Gemini 2.5 represents the next step towards Google's goal of making AI "smarter and more capable of reasoning."

Notably, just about an hour after Alphabet-C released Gemini 2.5, OpenAI urgently launched the most advanced image generator to date, the GPT-4o image generation technology. According to reports, the GPT-4o image generation features accurate text rendering, strict adherence to prompt instructions, deep calls to the 4o knowledge base and conversation context - including re-creation of uploaded images or transforming them into visual inspiration. OpenAI founder and CEO Sam Altman even generated a cartoon image live using GPT-4o.

Notably, just about an hour after Alphabet-C released Gemini 2.5, OpenAI urgently launched the most advanced image generator to date, the GPT-4o image generation technology. According to reports, the GPT-4o image generation features accurate text rendering, strict adherence to prompt instructions, deep calls to the 4o knowledge base and conversation context - including re-creation of uploaded images or transforming them into visual inspiration. OpenAI founder and CEO Sam Altman even generated a cartoon image live using GPT-4o.

Google's new reasoning model excels in its encoding reasoning capabilities.

According to Google, the company has long been exploring how to make AI smarter and more capable of reasoning through techniques such as reinforcement learning and chain-of-thought prompting. In December last year, Google released the Gemini 2.0 Flash Thinking model, a multimodal reasoning model with fast and transparent processing capabilities. On January 22 of this year, Google officially launched an enhanced version of its Gemini 2.0 Flash Thinking reasoning model.

The latest release of the Gemini 2.5 series model is Google's attempt to challenge OpenAI's "o" series reasoning models. As the most advanced complex task model in the series, the Gemini 2.5 Pro experimental version has comprehensively outperformed OpenAI o3-mini, Claude 3.7 Sonnet, Grok-3, and DeepSeek-R1 in multiple benchmark tests, and ranks first with a significant advantage on LMArena (an open-source platform for evaluating large language models). However, Google has not provided comparisons of Gemini 2.5 Pro with models such as OpenAI o1, OpenAI o1-Pro, and OpenAI o3 in benchmark tests.

In terms of coding performance, Gemini 2.5 has made a significant leap compared to 2.0, excelling in creating visually appealing web applications and proxy code applications, as well as code conversion and editing. In the industry-standard SWE-BenchVerified for proxy code evaluation, Gemini 2.5 Pro achieved a score of 63.8% using custom proxy settings.

According to a demo video released by Alphabet-C, Gemini 2.5 Pro can utilize its inference capabilities to create video games by generating executable code from single-line prompts. For example, it can design a dinosaur mini-game, generating pixelated dinosaur images and interesting game backgrounds given a specified programming language.

In terms of reasoning ability, Gemini 2.5 Pro leads in a series of benchmark tests requiring advanced reasoning. In the "Final Exam of Humanity" (Note: "Final Exam of Humanity" is a dataset designed by hundreds of subject experts aimed at capturing the forefront of human knowledge and reasoning), it also achieved the highest score of 18.8% among models that did not use tools, which is currently a state-of-the-art performance.

In addition, Gemini 2.5 Pro has native multimodal processing capabilities and an ultra-long context window, supporting multimodal inputs of text, images, audio, video, and code, with a context window of up to 1 million tokens (approximately 0.75 million words), capable of parsing the complete "The Lord of the Rings" series text, and it will be upgraded to 2 million tokens in the future.

OpenAI urgently launched the 4o image generation feature.

Just an hour after Alphabet-C launched its most powerful reasoning model Gemini 2.5 late at night, OpenAI also quickly rolled out the brand-new image generation feature of GPT-4o.

Prior to this, the text-to-image models under OpenAI were mainly the DALL-E series. Unlike DALL-E, this new image generator from OpenAI is based on its native multimodal GPT-4o model. Ultraman announced during a live event that the native image generation feature is based on the GPT-4o model, eliminating the need to call a separate DALL-E text-to-image model.

According to reports, based on the multimodal capabilities of GPT-4o, ChatGPT can more accurately follow instructions during image generation and render text on images more precisely, easily creating scenes that combine reality and fantasy. Currently, this feature has been gradually rolled out as the default image generator in ChatGPT to Plus, Pro, Team, and free users, with enterprise and education users soon to be allowed access.

According to a case released by OpenAI, the image generation function of GPT-4o can generate handwritten text, accurately understanding every detail of the prompt, and the image clarity can rival that of high-definition photos.

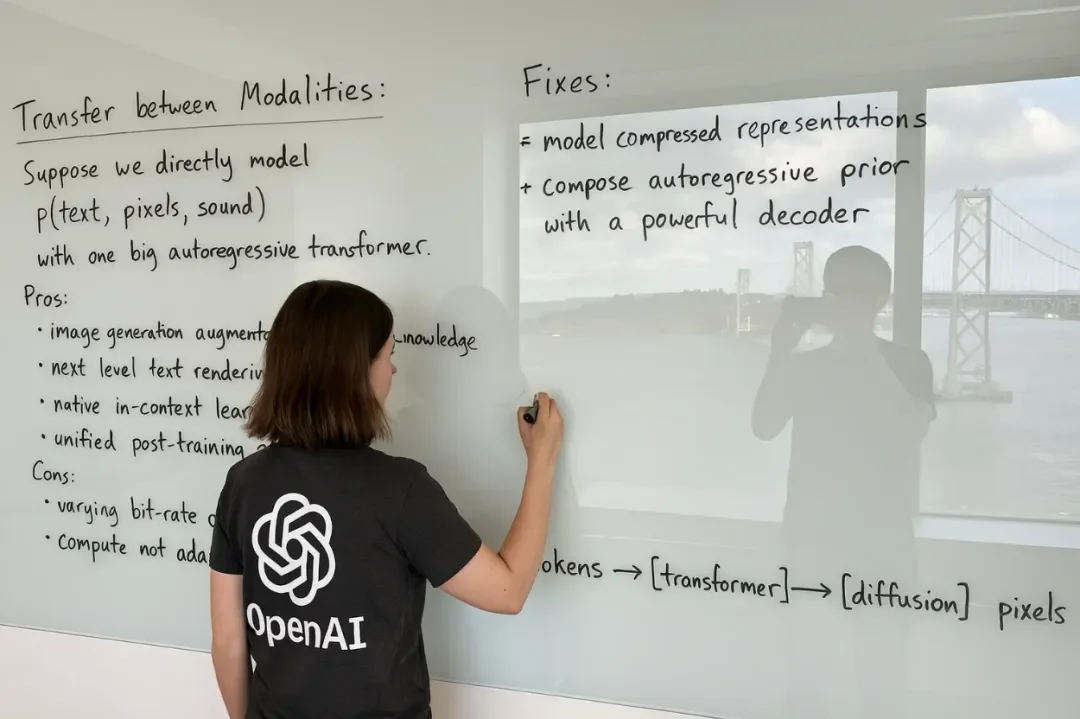

For example, when the prompt "This is a wide-angle image of a glass whiteboard taken with a phone, shot in a room overlooking the Bay Bridge. In the view, a woman can be seen writing, wearing a t-shirt with a large OpenAI symbol. The handwriting looks natural but a bit messy, and we can see the photographer's reflection" is input, the final generated image will reflect details such as "Bay Bridge," "t-shirt with a large OpenAI symbol," and "photographer's reflection."

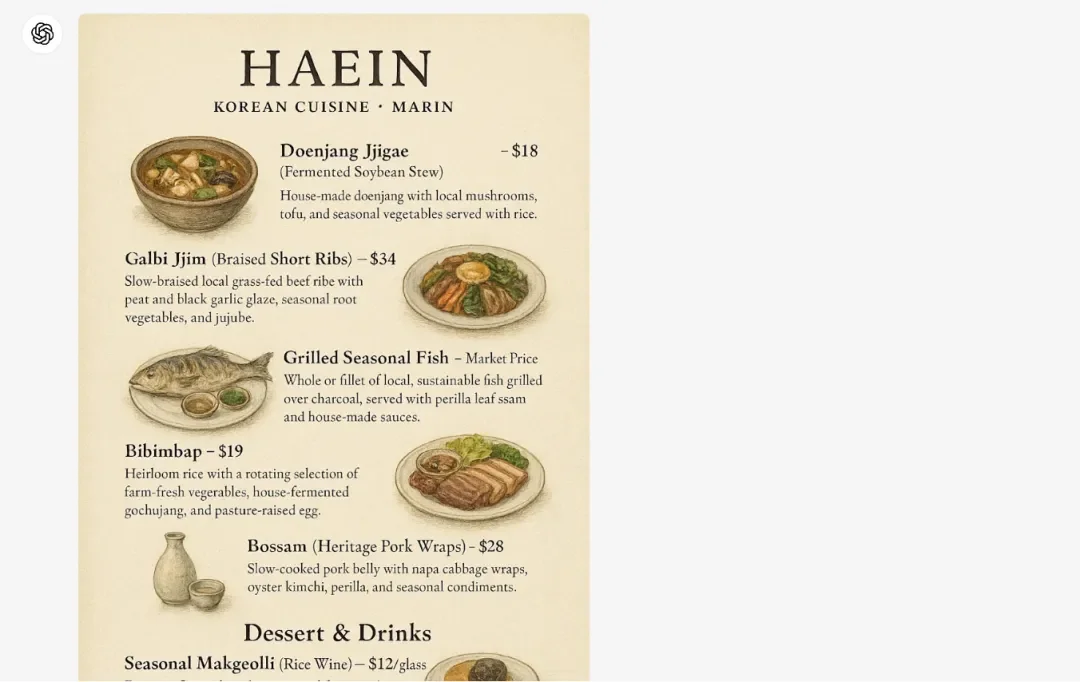

The GPT-4o image generation function can also become a practical productivity tool. For instance, to design a menu image for Restaurants, the user specifies the names of different dishes, prices, and key features in the prompt, and GPT-4o can generate a compliant, commercially usable menu image.

However, OpenAI also admits that the model is not perfect and still has multiple limitations in areas such as cropping, hallucination, and precise drawing. For example, in cases where there is little contextual information, the image generation function may fabricate information, and in more complex situations, it struggles to render the Palatino language and produce erroneous characters. OpenAI stated that it will address these issues through model improvements after the initial release.

On one hand, Alphabet-C has released the most intelligent reasoning model to date, challenging OpenAI's "o" series reasoning models; on the other hand, OpenAI has launched the GPT-4o image generation function to respond to the pressure from Alphabet-C's suite of multimodal capabilities. The competition between these two Silicon Valley tech giants to release new AI products reflects the ongoing escalation of Global AI competition. As AI competition intensifies, companies are accelerating their R&D efforts, whether in reasoning models, multimodal large models, or AI agents, and new technological advancements and breakthroughs are expected to continue.

Editor/Rocky

值得注意的是,就在谷歌发布Gemini 2.5大约一小时后,OpenAI就紧急发布了迄今为止最先进的图像生成器GPT-4o图像生成技术。据介绍,GPT-4o图像生成功能可精准文本渲染、严格遵循指令提示、深度调用4o知识库及对话上下文——包括对上传图像进行二次创作或将其转化为视觉灵感。OpenAI创始人兼CEO山姆·奥特曼在直播中还现场用GPT-4o自拍生成了一张漫画图片。

值得注意的是,就在谷歌发布Gemini 2.5大约一小时后,OpenAI就紧急发布了迄今为止最先进的图像生成器GPT-4o图像生成技术。据介绍,GPT-4o图像生成功能可精准文本渲染、严格遵循指令提示、深度调用4o知识库及对话上下文——包括对上传图像进行二次创作或将其转化为视觉灵感。OpenAI创始人兼CEO山姆·奥特曼在直播中还现场用GPT-4o自拍生成了一张漫画图片。

Comment(0)

Reason For Report