Source: Semiconductor Industry Watch. At yesterday's Conputex conference, Dr. Lisa Su released the latest roadmap. Afterwards, foreign media morethanmoore released the content of Lisa Su's post-conference interview, which we have translated and summarized as follows: Q: How does AI help you personally in your work? A: AI affects everyone's life. Personally, I am a loyal user of GPT and Co-Pilot. I am very interested in the AI used internally by AMD. We often talk about customer AI, but we also prioritize AI because it can make our company better. For example, making better and faster chips, we hope to integrate AI into the development process, as well as marketing, sales, human resources and all other fields. AI will be ubiquitous. Q: NVIDIA has explicitly stated to investors that it plans to shorten the development cycle to once a year, and now AMD also plans to do so. How and why do you do this? A: This is what we see in the market. AI is our company's top priority. We fully utilize the development capabilities of the entire company and increase investment. There are new changes every year, as the market needs updated products and more features. The product portfolio can solve various workloads. Not all customers will use all products, but there will be a new trend every year, and it will be the most competitive. This involves investment, ensuring that hardware/software systems are part of it, and we are committed to making it (AI) our biggest strategic opportunity. Q: The number of TOPs in PC World - Strix Point (Ryzen AI 300) has increased significantly. TOPs cost money. How do you compare TOPs to CPU/GPU? A: Nothing is free! Especially in designs where power and cost are limited. What we see is that AI will be ubiquitous. Currently, CoPilot+ PC and Strix have more than 50 TOPs and will start at the top of the stack. But it (AI) will run through our entire product stack. At the high-end, we will expand TOPs because we believe that the more local TOPs, the stronger the AIPC function, and putting it on the chip will increase its value and help unload part of the computing from the cloud. Q: Last week, you said that AMD will produce 3nm chips using GAA. Samsung foundry is the only one that produces 3nm GAA. Will AMD choose Samsung foundry for this? A: Refer to last week's keynote address at imec. What we talked about is that AMD will always use the most advanced technology. We will use 3nm. We will use 2nm. We did not mention the supplier of 3nm or GAA. Our cooperation with TSMC is currently very strong-we talked about the 3nm products we are currently developing. Q: Regarding sustainability issues. AI means more power consumption. As a chip supplier, is it possible to optimize the power consumption of devices that use AI? A: For everything we do, especially for AI, energy efficiency is as important as performance. We are studying how to improve energy efficiency in every generation of products in the future-we have said that we will improve energy efficiency by 30 times between 2020 and 2025, and we are expected to exceed this goal. Our current goal is to increase energy efficiency by 100 times in the next 4-5 years. So yes, we can focus on energy efficiency, and we must focus on energy efficiency because it will become a limiting factor for future computing. Q: We had CPUs before, then GPUs, now we have NPUs. First, how do you see the scalability of NPUs? Second, what is the next big chip? Neuromorphic chip? A: You need the right engine for each workload. CPUs are very suitable for traditional workloads. GPUs are very suitable for gaming and graphics tasks. NPUs help achieve AI-specific acceleration. As we move forward and research specific new acceleration technologies, we will see some of these technologies evolve-but ultimately it is driven by applications. Q: You initially broke Intel's status quo by increasing the number of cores. But the number of cores of your generations of products (in the consumer aspect) has reached its peak. Is this enough for consumers and the gaming market? Or should we expect an increase in the number of cores in the future? A: I think our strategy is to continuously improve performance. Especially for games, game software developers do not always use all cores. We have no reason not to adopt more than 16 cores. The key is that our development speed allows software developers to and can actually utilize these cores. Q: Regarding desktops, do you think more efficient NPU accelerators are needed? A: We see that NPUs have an impact on desktops. We have been evaluating product segments that can use this function. You will see desktop products with NPUs in the future to expand our product portfolio.

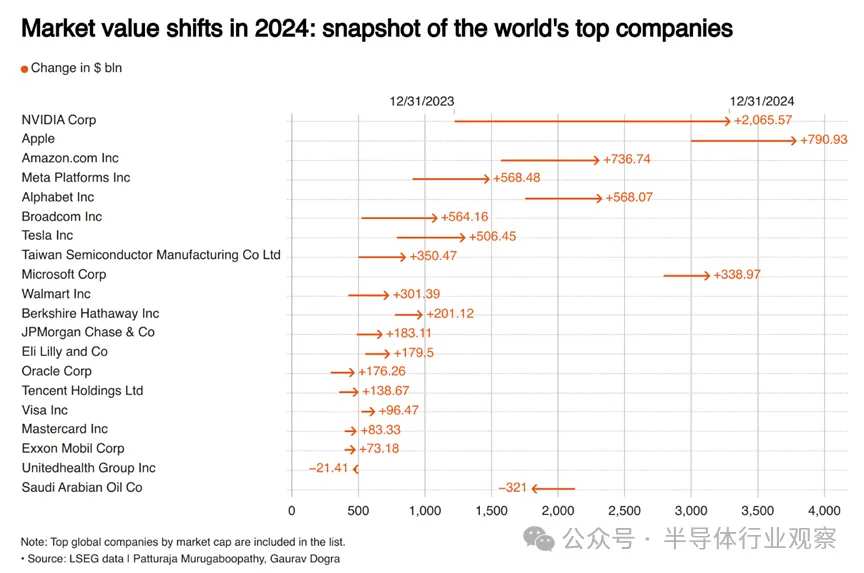

According to Reuters, driven by the surge in interest in AI and strong demand for AI-centric chips across various industries,$NVIDIA (NVDA.US)$it has become the fastest-growing company in Market Cap globally in 2024.

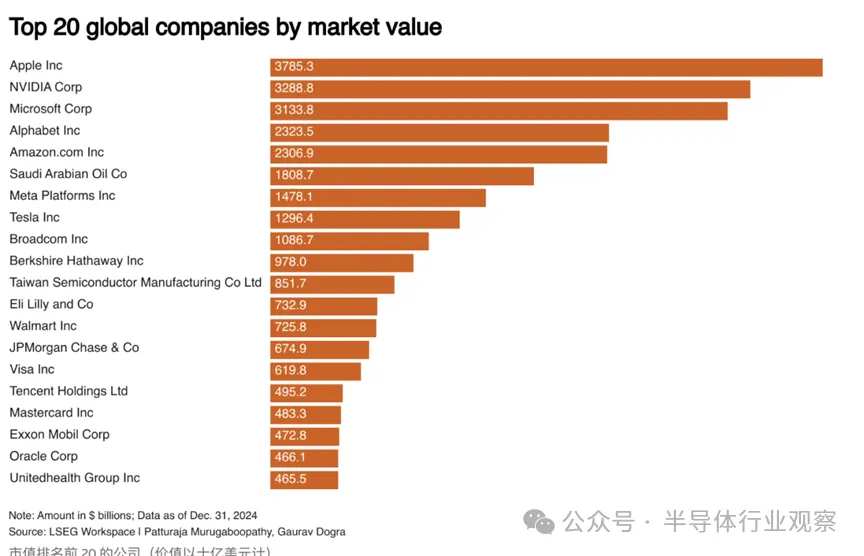

The Market Cap of this chip manufacturer increased by over 0.02 million dollars last year, and the stock price of this chip giant rose by 171% in 2024, making it the third best-performing stock in the S&P 500 Index. Its Market Cap reached 3.28 trillion dollars by the end of 2024, becoming the second most valuable publicly traded company in the world. However, by the end of 2023, its Market Cap was 1.2 trillion dollars.

Meanwhile, Apple continues to lead in global company market cap, nearing a historic 4 trillion dollars. This surge is driven by investor enthusiasm for the company’s anticipated AI-enhanced features, aimed at reviving sluggish iPhone sales. By the end of 2024,$Microsoft (MSFT.US)$it ranks third with a market cap of 3.1 trillion dollars, followed by $Alphabet-C (GOOG.US)$ And$Amazon (AMZN.US)$, each valued at approximately 2.3 trillion dollars.

Meanwhile, Apple continues to lead in global company market cap, nearing a historic 4 trillion dollars. This surge is driven by investor enthusiasm for the company’s anticipated AI-enhanced features, aimed at reviving sluggish iPhone sales. By the end of 2024,$Microsoft (MSFT.US)$it ranks third with a market cap of 3.1 trillion dollars, followed by $Alphabet-C (GOOG.US)$ And$Amazon (AMZN.US)$, each valued at approximately 2.3 trillion dollars.

It is worth mentioning that among the top 20 manufacturers with the highest growth last year, there were also$Broadcom (AVGO.US)$and$Taiwan Semiconductor (TSM.US)$Among them, Broadcom's market cap increased by 564.16 billion dollars, and Taiwan Semiconductor increased by 350.47 billion dollars. This also elevated the market cap of these two companies to the ninth and eleventh positions globally, respectively.

As the Chief Analyst of theCUBE Research, Dave Vellante, said: "The momentum of Nvidia's development is astonishing and has attracted the attention of everyone in the industry. The speed of innovation in the AI ecosystem is remarkable, and Nvidia's rate of innovation is especially impressive."

Looking back at past developments, it is this innovation that has created their present.

Building platforms around AI innovation.

Many people might attribute Nvidia's rapid rise to the top of the technology food chain to its perfect timing of appearing in the right place when AI suddenly became popular.

However, there is more to the story behind the development of this 31-year-old company. Nvidia has followed a well-thought-out strategy, building a complex software stack around its processor product portfolio, enabling it to provide tailored tools and microservices required for AI applications.

"Nvidia bets that what they now call accelerated computing will become mainstream, and by mastering key components - very large and fast GPUs, proprietary networks, and specialized software - it will lead the market, and indeed it has," Vellante said.

In 2006, the company made a significant decision to launch the Compute Unified Device Architecture (CUDA). This parallel computing platform allows developers to use application programming interfaces and supports various functions, including deep learning.

In 2012, the convolutional neural network AlexNet, designed by a team including OpenAI co-founder Ilya Sutskever, won the ImageNet challenge using NVIDIA's CUDA architecture. This demonstrated that NVIDIA's technology could exceed the gaming field where the company had previously made its name.

Tony Baer, head of dbInsight LLC, explained in an analysis for SiliconANGLE: "The download of the AlexNet research paper exceeded 100,000 times, making CUDA widely known."

Although in 2012, AI and deep learning were still seen as smaller, niche projects in the tech world, NVIDIA's Jensen Huang seized this opportunity. His annual speech at the company’s GTC conference began to include NVIDIA’s vision for creating new GPUs for machine learning and deep neural networks that could replace the expensive and time-consuming processes driven by central processing units or CPUs.

"Tasks that previously required 1,000 servers and around 5 million dollars can now be completed with just three GPU-accelerated servers," said Jensen Huang during the keynote speech at GTC 2014. "The computational density is completely different. The energy consumption and cost have been reduced by 100 times."

Two years later, Huang donated the company's first DGX supercomputer to Elon Musk, who was then the co-chair of a newly formed venture, OpenAI. During the development of the device, Musk had expressed interest in it to NVIDIA’s CEO. By the end of 2022, OpenAI launched ChatGPT to the world.

Establishing partnerships with major players

As NVIDIA grew into an AI-driven giant, it actively formed partnerships with a wide range of industry participants, including the world's largest cloud computing providers.

Customers of Amazon Web Services Inc. can reserve hundreds of NVIDIA H100 Tensor Core GPUs, which are co-located with Amazon EC2 UltraClusters for machine learning workloads. In March, Google Cloud announced that it had adopted NVIDIA Grace Blackwell's computing platform, and the chip manufacturer's H100-driven DGX Cloud is now fully available to Google’s customers.

Last August, Microsoft announced that it would provide the strongest NVIDIA GPU through Azure Cloud as part of its v5 virtual machine series. Analysts believe this move is an extension of the cloud provider's significant investment in OpenAI.

The interest of major Cloud Computing companies in NVIDIA's GPU platform stems from a simple reality: Cloud customers want it. Reports suggest that at some point in 2023, the delivery cycle for NVIDIA's H100 AI Chip was about 40 weeks.

This is an ideal situation for any company in the fiercely competitive Technology industry. Over the past 15 years, NVIDIA has successfully navigated the roller-coaster rise in demand from Cryptos mining and online gaming, capitalizing on a wave of technological adoption that began in 2023 and continues to this day.

"Luck combined with vision, skill, and courage," Vellante said. "They were very lucky that the Cryptos boom not only fueled the growth of gaming but also bridged the gap between the world before and after ChatGPT. But they have the skills, expertise, and vision to embrace enormous challenges and create a market."

Shape products according to the competitive landscape.

In establishing a clear lead in the processor market, NVIDIA occupies about 80% of the AI Chip share used in Datacenters, making the company a primary target of competition.

$Advanced Micro Devices (AMD.US)$ The company has $NVIDIA (NVDA.US)$ Intense competition has emerged in the GPU market. In April this year, AMD launched the Ryzen PRO 8040 series processors for AI PCs, which combine CPU, GPU, and on-chip neural processing units to provide dedicated AI processing capabilities. Nvidia launched the RTX A400 GPU to accelerate ray tracing and AI workloads. In June this year, AMD released the Instinct MI325X processor for generating AI workloads, aiming at Nvidia's dominant position in the datacenter GPU sector.

The arms race between Nvidia and AMD is putting pressure on Intel to keep pace. Intel released the third-generation Gaudi AI Chip in April, claiming that this chip will provide four times the performance of the previous generation in data processing for AI applications, surpassing Nvidia's H100 graphics card.

Broadcom is increasingly seen as a major challenger to Nvidia's dominance. Recent reports indicate that major AI chip buyers, such as Microsoft, Meta, and Alphabet-C, are seeking to diversify their supply chains. Broadcom is collaborating with large technology industry participants to develop its own AI chips, which may begin challenging Nvidia's market power in 2025.

Smaller startups also have the potential to challenge Nvidia's dominance in the datacenter GPU market. Reports suggest that Cerebras Systems Inc., which has filed for an IPO, recently announced advancements in materials research that could train large language models faster and at a lower cost.

Observers will learn more about how Nvidia plans to respond to the changing competitive landscape at its annual GTC conference scheduled for mid-March in San Jose, California. Although the company traditionally follows an annual release schedule for next-generation AI chips, there is increasing speculation that the company will accelerate this cycle at GTC next spring.

This may involve the release of GB300 AI servers equipped with Blackwell GPUs and an earlier than expected launch of the Rubin architecture. Rubin is Nvidia's GPU microarchitecture, initially revealed by Jensen Huang at the Taipei International Computer Show in June. It uses a new type of high-bandwidth memory and NVLink 6 Switch, with reports suggesting that Nvidia will move the Rubin timeline up from 2026 to next year.

Leading industry transformation and establishing a leading position.

Factors that may challenge NVIDIA's current dominance may not be related to competition, but rather to speed and scalability. Bandwidth and latency are very important when processing AI workloads, and the current debate about Datacenter Switch technology InfiniBand versus Ethernet may impact this GPU giant.

The reason is that NVIDIA has been highly focused on InfiniBand technology due to its high throughput and low latency structure. However, some analysts predict that as technology advances, the scalability of computing clusters will exceed 100 terabits per second, and the application of Ethernet in AI training will accelerate in the coming years. NVIDIA's latest InfiniBand switch offers an aggregated speed of 51.2 terabits per second, while as the more open, AI-driven computing world evolves, the company's proprietary switching protocol may ultimately put it at a disadvantage.

John Furrier of theCUBE Research stated: "One problem NVIDIA faces is that they may be seen as proprietary technology, and technology-centric. The impact of generative AI is changing computing, storage, and networking, with a new 'computing paradigm' emerging rapidly around cluster systems. I expect NVIDIA to face challenges, not in the GPU and software stack, but in the industry trend towards open, distributed, and heterogeneous computing."

The competitive challenges faced by NVIDIA have not prevented stock market investors from pushing the company's valuation to an all-time high. In June of this year, the company briefly surpassed Microsoft to become the world's most valuable company, and Goldman Sachs's trading division referred to NVIDIA as the "most important stock on the planet" this year.

In the company's latest earnings report, NVIDIA announced a quarterly net income of $19.3 billion, surpassing the income of the previous fiscal year's first nine months.

Holger Mueller of Constellation Research Inc. commented: "This is unprecedented growth and acceleration for such an established company."

Despite the strong demand for NVIDIA's AI processors, the CEO dressed in leather seems to have no illusions about the competitive world he is in. This year is crucial for NVIDIA to scale up and demonstrate its ability to collaborate within a broad technology ecosystem.

"I don't think anyone wants me to go bankrupt—I might know they want to do so, so the situation is different," Huang said in an interview at the end of last year. "I live in an environment where we are desperate on one hand and ambitious on the other."

This combination has allowed NVIDIA to build what industry observers call a "moat" in the chip market, making the company's strategy immune to invaders attempting to take away its business.

In today's computing era, this moat is characterized by breakthroughs in large language models and AI. NVIDIA has positioned itself as a comprehensive platform provider, offering end-to-end solutions for the AI Datacenter market, achieving significant advancements in trillion floating-point operations performance, and committing to more innovations in the processor space. It has become the AI factory, and if anyone wants to cross the moat to storm the castle, they better start rowing now.

"One key aspect of NVIDIA's moat is that it builds entire AI systems and then breaks them down and sells them in chunks," said Vellante. "Therefore, when it sells AI products, whether chips, networks, Software, or Other Products, it knows where the bottlenecks are and can help customers fine-tune their architectures. From our perspective, NVIDIA's moat is deep and wide. It has an ecosystem and is aggressively driving innovation."

What is the outlook for NVIDIA?

A Wall Street investment bank stated that NVIDIA's leading position in the AI market will continue in the coming years. NVIDIA's stock price rose on Thursday. Loop Capital Markets Analysts Ananda Baruah and John Donovan stated in a report on Wednesday that NVIDIA's "longevity potential" is undervalued.

Prior to this, some analysts were concerned about hyperscale Cloud Computing Service providers and fabless chip manufacturers. $Broadcom (AVGO.US)$ and$Marvell Technology (MRVL.US)$ Custom chips designed for collaboration will bring competitive threats.

At the same time, Nvidia executives believe they have over $2 trillion in revenue opportunities in accelerated computing, generative AI, and inference, and "they can achieve substantial leadership in each area," according to Loop Capital.

Baruah and Donovan compared Nvidia's impact on the computing field to that of the musical group Nirvana, which had a 'transformational impact' on the music industry and achieved sustainable success.

Analysts rated Nvidia stocks as Buy, with a Target Price of $175. Today in the stock market, Nvidia's stock price rose by 3%, closing at $138.31. The key to Nvidia's leading position in AI lies in its expertise across the entire technology stack, as noted by Loop Capital. This includes Semiconductors, systems, and Software. The company stated that this technology stack and its installed user base constitute a competitive advantage.

Baruah and Donovan wrote: 'We believe that people underestimate the extent of the key 'stack-top' capabilities Nvidia has already created, which may give it characteristics similar to a moat in training and inference.'

The first wave of the trend in generative AI computing is training, and the next wave is inference. AI training is the process of teaching AI models to recognize patterns and make predictions, while AI inference is the process of the model using that training to make predictions on new data.

Analysts stated: 'Nvidia may have already locked in the inference field, while the game is just beginning. Nvidia has positioned itself as the 'preferred next-generation AI inference platform'... but it has not yet been widely recognized.'

Baruah and Donovan stated that this position is reflected in its AI ecosystem with 5 million developers, thousands of developed applications, and collaborations with all relevant Cloud Computing Service providers and Hardware suppliers.

The following are Wall Street's expectations for this chip manufacturer in 2025:

The stock will still lead in the AI sector.

Wedbush stated that NVIDIA will continue to be a major attraction in the AI field and listed the company as the top winner in AI technology for 2025.

Analysts expect that AI spending will also "increase significantly" in the coming year, estimating that capital expenditure in the field may increase by another 2 trillion dollars over the next three years.

In a recent report, they stated: "This spending of over 2 trillion dollars on AI began with AI pioneer Jensen and NVIDIA, as they remain the only game in town, and their chips are the new Gold and Oil."

In another report, they added: "Despite some nervous moments in 2025 due to the Federal Reserve's concerns, Chinese tariff poker games, and overvaluation, this will create opportunities with Technology themes and key names, which has been the core strategy of our investment in the Technology sector over the past two years."

Blackwell will become the focus.

Morgan Stanley stated that investors will be discussing Blackwell's success in 2025—if NVIDIA's next-generation GPU launches smoothly, it could alleviate concerns among investors regarding demand for NVIDIA chips and competitors in the field.

"When recent data appears mixed, we tend to be most bullish on NVIDIA, but the potential dynamics are very strong. We believe we are approaching that point now," said the bank's analyst in a report. "There is transitional pressure—but we believe that by 2:25, the only topic will be the strength of Blackwell."

Blackwell has already generated considerable hype among NVIDIA bulls. Last year, CEO Jensen Huang stated that the demand for Blackwell is "crazy," which boosted profit growth expectations, leading to a surge in the company's stock price.

The bank reiterated its "Shareholding" rating on the stock, stating that NVIDIA is its "preferred" choice for next year. The strategist's Target Price is $166 per share, implying a 21% upside from the current stock price.

A significant catalyst for the stock may emerge in January.

Citi analysts noted in a report at the end of last year that after Jensen Huang's keynote speech at the Consumer Electronics Show, the chipmaker's stock price could rise as soon as January.

The Consumer Electronics Show will be held from January 7 to January 10. Jensen Huang's opening speech is scheduled for the evening of January 6. Following the speech, Huang will hold a Q&A session with analysts the next day.

Citi analyst Atif Malik stated that Huang's speech could elevate expectations for Blackwell's sales, potentially driving NVIDIA's stock price to double-digit growth.

Malik wrote: "We... have actively observed catalysts for CES in January, and we expect Blackwell's sales forecast to rise, with management discussing changes in business and robotics industry demand driven by inference."

The bank reiterated its "Buy" rating on the stock in its report and raised its target price to $175 per share, indicating a 27% increase from the current price.

Strong chip demand must match high expectations.

Analysts at Bank of America state that Blackwell's demand may exceed Nvidia's production for several quarters.

At the same time, demand for its Hopper GPU may also remain strong.

Bank of America strategists mentioned in a report at the end of last year: "As investors digest the lack of a 'sell point', we expect the stock price to fluctuate in the short term, but we remain Bullish on the stock's 'substance'."

However, overly high expectations for the stock may pose risks. Despite Nvidia's earnings easily exceeding expectations, there is still a slight gap compared to the highest expectations, leaving investors disappointed over the past few quarters. In the past two quarters, the stock experienced sell-offs after earnings announcements, but they did not last long.

Analysts stated: "Bullish investors' expectations have always been 10-20% higher than analysts' consensus, which has suppressed unexpected factors."

The bank reaffirmed its "Buy" rating on the stock and a target price of $190, indicating a 39% upside potential for the stock.

Editor/Rocky

与此同时,苹果继续领跑全球公司市值,市值接近历史性的 4 万亿美元。这一飙升是由投资者对该公司预期的人工智能增强功能的热情推动的,旨在重振低迷的 iPhone 销售。到 2024 年底,

与此同时,苹果继续领跑全球公司市值,市值接近历史性的 4 万亿美元。这一飙升是由投资者对该公司预期的人工智能增强功能的热情推动的,旨在重振低迷的 iPhone 销售。到 2024 年底,