The latest advanced voice mode of ChatGPT can now respond in real time to video and screen sharing content. With Christmas approaching, the voice feature has also added a Santa Claus mode.

ChatGPT's Advanced Voice Mode (AVM) now has video and screen sharing features! This feature will be rolled out to paid ChatGPT Plus and Pro subscribers starting Thursday, while enterprise and education customers will gain access in January.

On the sixth day of the '12 Days of OpenAI' event, this AI startup announced that ChatGPT can recognize objects captured by the camera or displayed on the device screen and respond through its advanced voice mode feature. Users can chat with ChatGPT using their mobile camera, and the model will 'see' what you see.

Previously, OpenAI previewed this feature when it launched the GPT-4o model in May. The startup stated that AVM is powered by OpenAI's native multimodal 4o model, meaning it can handle audio input and respond in a natural conversational manner.

Previously, OpenAI previewed this feature when it launched the GPT-4o model in May. The startup stated that AVM is powered by OpenAI's native multimodal 4o model, meaning it can handle audio input and respond in a natural conversational manner.

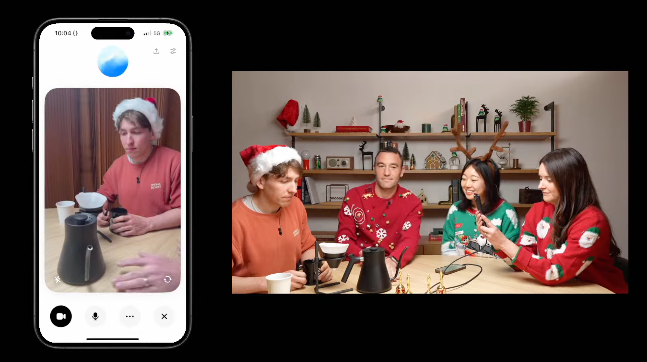

OpenAI's video mode feels like a video call because ChatGPT responds in real-time to what is shown in the video by the user. It can see things around the user, identify objects, and even remember the person who introduced themselves. During a live stream, the company's Chief Product Officer (CPO) Kevin Weil and other team members demonstrated how ChatGPT assisted in making pour-over coffee. By directing the camera at the action of making coffee, the AVM guided the team through the brewing process, proving it understands the principles of a coffee machine.

Additionally, ChatGPT can also recognize content on the screen. In the demo, OpenAI researchers triggered screen sharing and then opened a messaging application, asking ChatGPT to help reply to a photo received via text message.

This long-awaited news was released the day after Google launched its next-generation flagship model, Gemini 2.0. The new Gemini 2.0 can also process visual and audio inputs and has more agent capabilities, meaning it can perform multi-step tasks on behalf of users. Gemini 2.0's agent capabilities currently have three different named research prototypes: Project Astra for general AI assistants, Project Mariner for specific AI tasks, and Project Jules for developers.

Additionally, last week, Microsoft also released a preview version of Copilot Vision, which allows Pro subscribers to open Copilot chat while browsing the web. Copilot Vision can view photos on the screen and can even help play map guessing games. Google's Project Astra can read the browser in the same way.

OpenAI is not to be outdone, as its demonstration showcased how ChatGPT's visual mode accurately recognizes objects, even those that can be interrupted, including a holiday voice option for Santa Claus in voice mode, featuring a deep, cheerful voice and plenty of "ho-ho-hos." Users can chat with OpenAI's version of Santa Claus by clicking on the snowflake icon in ChatGPT. The media jokingly stated that it remains unclear whether the real Santa Claus contributed his voice for AI training or if OpenAI used his voice without prior consent.

Previously, the advanced voice mode with visual capabilities had been postponed several times. Reports suggest that part of the reason was that OpenAI announced the feature early before it was ready. In April of this year, OpenAI promised to roll out the advanced voice mode to users "within a few weeks." Months later, the company still stated that more time was needed.

此前,OpenAI在5月份推出GPT-4o模型时就预告了该功能。该初创公司表示,AVM由OpenAI的原生多模式4o模型提供支持,这意味着它可以

此前,OpenAI在5月份推出GPT-4o模型时就预告了该功能。该初创公司表示,AVM由OpenAI的原生多模式4o模型提供支持,这意味着它可以