Source: Semiconductor Industry Watch. At yesterday's Conputex conference, Dr. Lisa Su released the latest roadmap. Afterwards, foreign media morethanmoore released the content of Lisa Su's post-conference interview, which we have translated and summarized as follows: Q: How does AI help you personally in your work? A: AI affects everyone's life. Personally, I am a loyal user of GPT and Co-Pilot. I am very interested in the AI used internally by AMD. We often talk about customer AI, but we also prioritize AI because it can make our company better. For example, making better and faster chips, we hope to integrate AI into the development process, as well as marketing, sales, human resources and all other fields. AI will be ubiquitous. Q: NVIDIA has explicitly stated to investors that it plans to shorten the development cycle to once a year, and now AMD also plans to do so. How and why do you do this? A: This is what we see in the market. AI is our company's top priority. We fully utilize the development capabilities of the entire company and increase investment. There are new changes every year, as the market needs updated products and more features. The product portfolio can solve various workloads. Not all customers will use all products, but there will be a new trend every year, and it will be the most competitive. This involves investment, ensuring that hardware/software systems are part of it, and we are committed to making it (AI) our biggest strategic opportunity. Q: The number of TOPs in PC World - Strix Point (Ryzen AI 300) has increased significantly. TOPs cost money. How do you compare TOPs to CPU/GPU? A: Nothing is free! Especially in designs where power and cost are limited. What we see is that AI will be ubiquitous. Currently, CoPilot+ PC and Strix have more than 50 TOPs and will start at the top of the stack. But it (AI) will run through our entire product stack. At the high-end, we will expand TOPs because we believe that the more local TOPs, the stronger the AIPC function, and putting it on the chip will increase its value and help unload part of the computing from the cloud. Q: Last week, you said that AMD will produce 3nm chips using GAA. Samsung foundry is the only one that produces 3nm GAA. Will AMD choose Samsung foundry for this? A: Refer to last week's keynote address at imec. What we talked about is that AMD will always use the most advanced technology. We will use 3nm. We will use 2nm. We did not mention the supplier of 3nm or GAA. Our cooperation with TSMC is currently very strong-we talked about the 3nm products we are currently developing. Q: Regarding sustainability issues. AI means more power consumption. As a chip supplier, is it possible to optimize the power consumption of devices that use AI? A: For everything we do, especially for AI, energy efficiency is as important as performance. We are studying how to improve energy efficiency in every generation of products in the future-we have said that we will improve energy efficiency by 30 times between 2020 and 2025, and we are expected to exceed this goal. Our current goal is to increase energy efficiency by 100 times in the next 4-5 years. So yes, we can focus on energy efficiency, and we must focus on energy efficiency because it will become a limiting factor for future computing. Q: We had CPUs before, then GPUs, now we have NPUs. First, how do you see the scalability of NPUs? Second, what is the next big chip? Neuromorphic chip? A: You need the right engine for each workload. CPUs are very suitable for traditional workloads. GPUs are very suitable for gaming and graphics tasks. NPUs help achieve AI-specific acceleration. As we move forward and research specific new acceleration technologies, we will see some of these technologies evolve-but ultimately it is driven by applications. Q: You initially broke Intel's status quo by increasing the number of cores. But the number of cores of your generations of products (in the consumer aspect) has reached its peak. Is this enough for consumers and the gaming market? Or should we expect an increase in the number of cores in the future? A: I think our strategy is to continuously improve performance. Especially for games, game software developers do not always use all cores. We have no reason not to adopt more than 16 cores. The key is that our development speed allows software developers to and can actually utilize these cores. Q: Regarding desktops, do you think more efficient NPU accelerators are needed? A: We see that NPUs have an impact on desktops. We have been evaluating product segments that can use this function. You will see desktop products with NPUs in the future to expand our product portfolio.

Recently, Tenstorrent, led by industry renowned figure Jim Keller as CEO, announced the completion of a $0.693 billion Series D financing round led by Samsung Securities and AFW Partners. Following this round of financing, the valuation of this AI chip startup is approximately $2.6 billion.

Jim Keller, the founder of Tenstorrent and a pioneer in semiconductors, stated in an interview that the company hopes to develop a chip aimed at breaking the monopoly on $NVIDIA (NVDA.US)$ AI business. The company raised funds in a financing round led by south korea's AFW Partners and Samsung Securities. Bezos Expeditions partnered with LG Electronics Inc. and Fidelity to participate in this round of financing, bullish on Keller's strength and the flourishing opportunities in the ai technology field.

It is worth mentioning that the actual controller of Bezos Expeditions is $Amazon (AMZN.US)$founder Jeff Bezos. Considering the procurement volume of nvidia chips by AWS, the deeper meaning behind this investment can be seen.

It is worth mentioning that the actual controller of Bezos Expeditions is $Amazon (AMZN.US)$founder Jeff Bezos. Considering the procurement volume of nvidia chips by AWS, the deeper meaning behind this investment can be seen.

In addition to the lead investors, many well-known investors participated in this round of financing, including XTX Markets, Corner Capital, MESH, Canada Export Development Agency, Ontario Medical Pension Plan, LG Electronics, Hyundai Motor Group, Fidelity Management & Research, Baillie Gifford, and Bezos Expeditions.

Tenstorrent stated that due to strong investor demand, this round of financing was oversubscribed. Jim Keller further expressed in an interview that the company aims to develop a chip to break Nvidia's monopoly in the AI business.

Who is Tenstorrent?

There has been much media coverage about who Jim Keller is, so there is no need to elaborate further. You can see his brilliant resume in 'The Legendary Journey of Jim Keller’s Chip Development.' As for Tenstorrent, it is a company supported by Jim Keller, who serves as its CEO.

Tenstorrent is headquartered in Santa Clara, California, and primarily develops and sells computing systems designed specifically for AI workloads, all built around the company's Tensix core. The company's vision is to break Nvidia's monopoly in the chip wafer market and develop more affordable AI training and deployment hardware, avoiding the use of expensive components like high-bandwidth memory used by Nvidia.

"If you use HBM, you cannot beat Nvidia, because Nvidia buys the most HBM and has a cost advantage," Jim Keller said during an interview with Bloomberg. "But they can never lower the price like HBM built into their products and slots."

It is well known that Nvidia provides developers with a full set of proprietary technologies that cover everything from chips to interconnections and even datacenter layouts, promising that all components work better together because they are co-designed. In contrast, competitors like AMD and Tenstorrent focus on achieving greater interoperability with other technology providers, whether through sharing industry standards or open designs for others to use.

To attract more potential customers, the company focuses on interoperable hardware design with other vendors. It utilizes the open standard RISC-V processor architecture, aiming to provide engineers and developers with a more open ecosystem to apply their processors and systems to their datacenter and server setups. "In the past, I used proprietary technology, which was really difficult," Jim Keller said. "Open source can help you build a larger platform. It attracts engineers. Yes, it’s a passionate project."

To achieve this goal, Tenstorrent will license ai and RISC-V intellectual property to customers who want to own and customize dedicated chips. RISC-V is an open-source instruction architecture for developing custom processors based on a so-called 'reduced instruction set' for different applications, making it very easy to use, customize, and optimize for power, performance, and functionality.

Like RISC-V and the japanese partner Rapidus, Tenstorrent still has many things to prove. So far, this emerging company has signed contracts totaling nearly 0.15 billion US dollars, which pales in comparison to nvidia's quarterly data center revenue of several billion dollars.

The company stated that it will use the new funds to build an open-source ai software stack and hire developers to expand global development and design centers. This will enable the company to build systems and clouds for ai developers to use and test models on their systems.

Tenstorrent announced that its first chips are manufactured by $GlobalFoundries (GFS.US)$Taiwan Semiconductor Manufacturing Company and Samsung Electronics will produce the next generation of chips. The company has also begun designs for cutting-edge 2-nanometer manufacturing.$Taiwan Semiconductor (TSM.US)$Samsung will begin mass production next year, and Tenstorrent is negotiating with them and japan's Rapidus, which aims to achieve 2-nanometer output by 2027.

Joshua Leahy, the Chief Technology Officer of XTX Markets, stated: "We find Tenstorrent's open-source driving method refreshing, especially in the proprietary and often secretive field of ai accelerators."

As the company begins to leverage new funding to scale up, it will face resistance in a market dominated by nvidia. However, Jim Keller still believes that by providing more affordable ai chips that can be customized to meet business needs, and releasing a new processor every two years, the company can maintain commercially viable products in the ai chip industry.

During a media interview, Jim Keller summarized:

Tenstorrent is a design company. We design CPUs, we design ai engines, we design ai software stacks.

Therefore, whether it's soft IP, hardware IP chiplets, or complete chips, these are all realizable. We are very flexible in this regard. For example, with the CPU, we will license it multiple times before our own chiplet tape-out. We are talking to six companies that want to engage in businesses like custom memory chips or NPU accelerators. I believe that for our next generation, whether CPU or ai, we will build CPU and ai chiplets. But then others will make other small chips. Then we will integrate them into the system.

Why challenge nvidia?

From the introduction above, we share Tenstorrent's vision. Next, let’s learn about the company's products and roadmap.

In March 2023, Tenstorrent's chief CPU architect Wei-Han Lien stated during a media interview that since Tenstorrent aims to address a wide range of ai application issues, it not only requires different system-on-chips or system-level packaging but also various CPU micro-architectures and system-level architectures to achieve different power and performance targets.

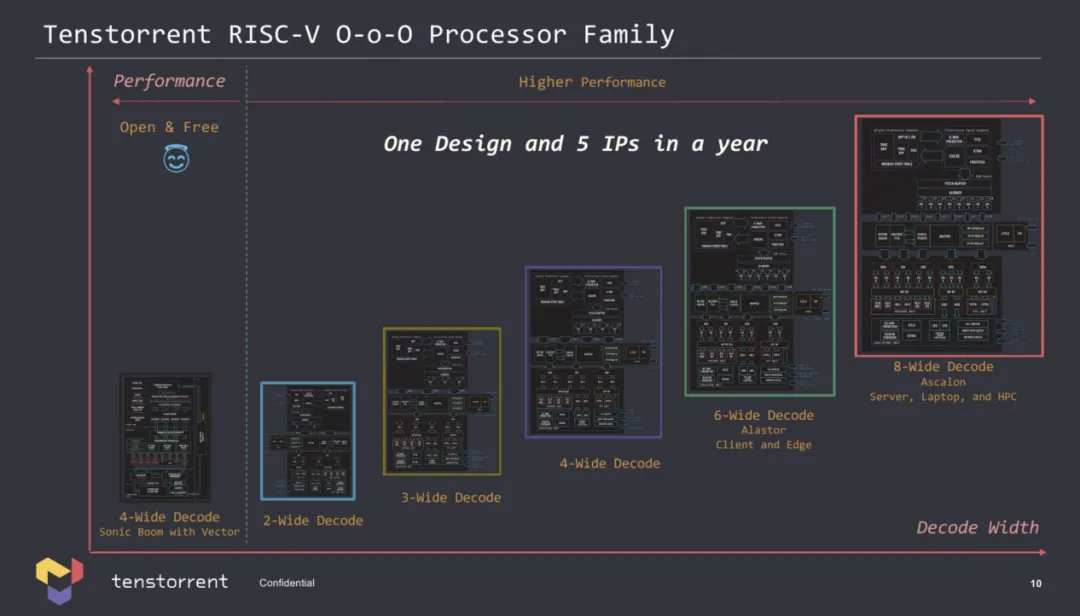

Tenstorrent stated that the company's CPU team has developed an unordered RISC-V microarchitecture and implemented it in five different ways to meet the needs of various applications.

Tenstorrent now has five different RISC-V CPU core IPs, including dual-width, triple-width, quadruple-width, six-width, and eight-width decoding, which can be used for its own processors or licensed to interested parties. For potential customers who require a very basic CPU, the company can provide small cores with dual-width execution capabilities, while for those needing higher performance for edge, client PCs, and high-performance computing, it offers the six-width Alastor and eight-width Ascalon cores.

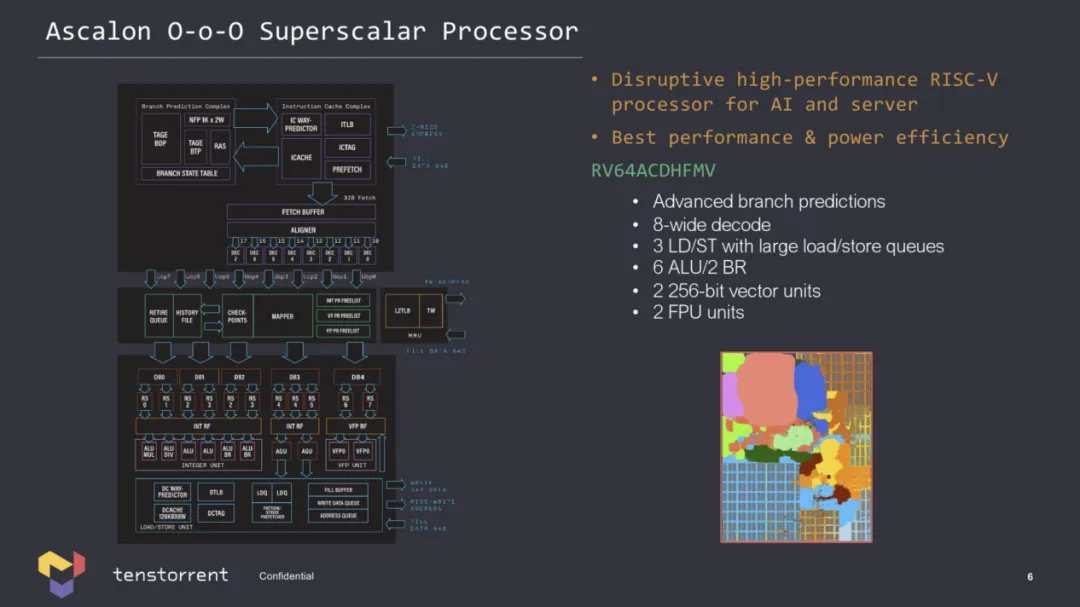

Each unordered Ascalon core with eight-width decoding (RV64ACDHFMV) features six ALUs, two FPUs, and two 256-bit vector units, making it very powerful. Considering that modern x86 designs utilize four-width (Zen 4) or six-width (Golden Cove) decoders, what we have is a very powerful core.

In addition to various RISC-V general cores, Tenstorrent also has proprietary Tensix cores specifically tailored for neural network inference and training. Each Tensix core consists of five RISC cores, an array math unit for tensor operations, a SIMD unit for vector operations, 1MB or 2MB of SRAM, and fixed-function hardware for accelerating network packet operations and compression/decompression. Tensix cores support various data formats including BF4, BF8, INT8, FP16, BF16, and even FP64.

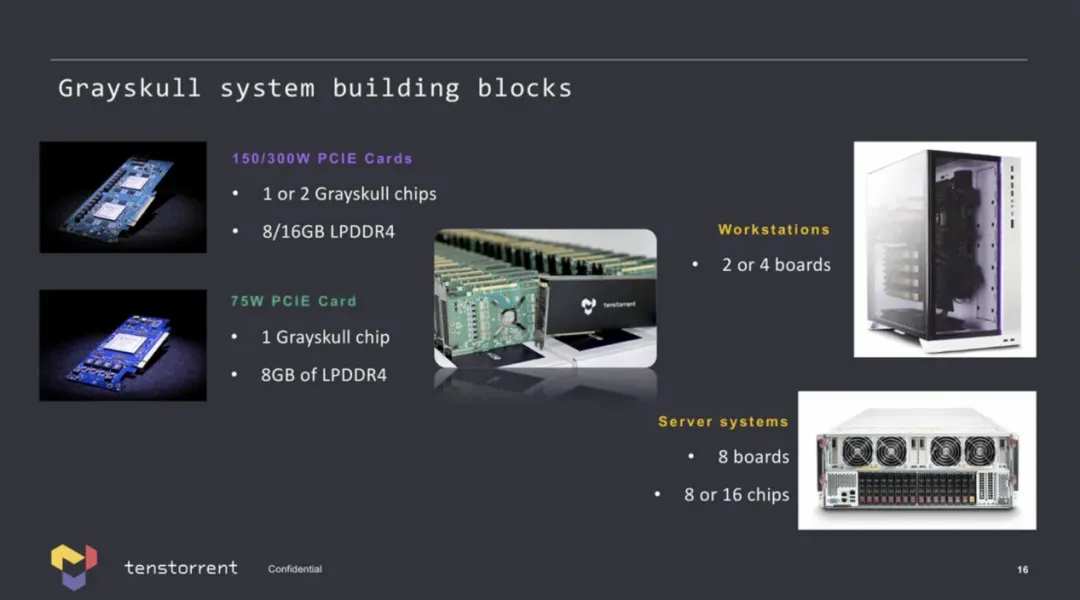

As of March 2023, Tenstorrent has two products: one is a machine learning processor called Grayskull, offering about 315 INT8 TOPS of performance and capable of being plugged into PCIe Gen4 slots; the other is the connected Wormhole ML processor, with performance around 350 INT8 TOPS, utilizing a GDDR6 memory subsystem and a PCIe Gen4 x16 interface, establishing 400GbE connections with other machines.

Both devices require a host CPU and can be used as additional boards or built into pre-configured Tenstorrent servers. A 4U Nebula server includes 32 Wormhole ML cards, providing around 12 INT8 POPS of performance, with a power draw of 6kW.

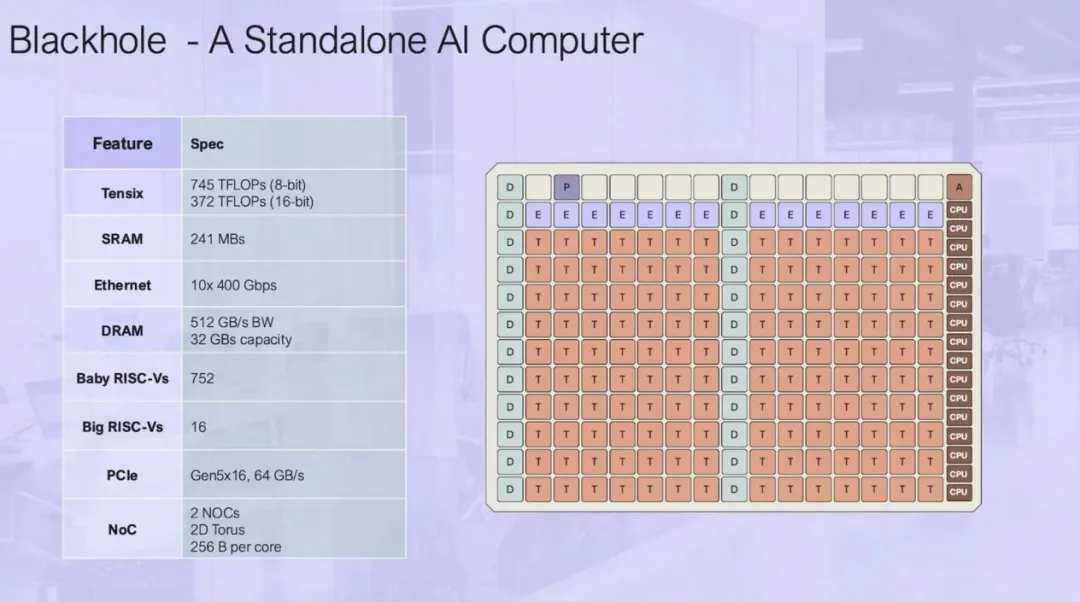

At Hot Chips held this August, Tenstorrent unveiled the Blackhole AI accelerator. Unlike previous components such as Greyskull and Wormhole that were deployed as PCIe-based accelerators, Tenstorrent's Blackhole is designed to operate as an independent AI computer.

They claim that this accelerator can outperform the Nvidia A100 in raw computation and scalability. Each Blackhole chip reportedly has 745 teraFLOPS of FP8 performance (372 teraFLOPS for FP16), 32GB of GDDR6 memory, and Ethernet-based interconnect, achieving a total bandwidth of 1TBps across its 10 400Gbps links.

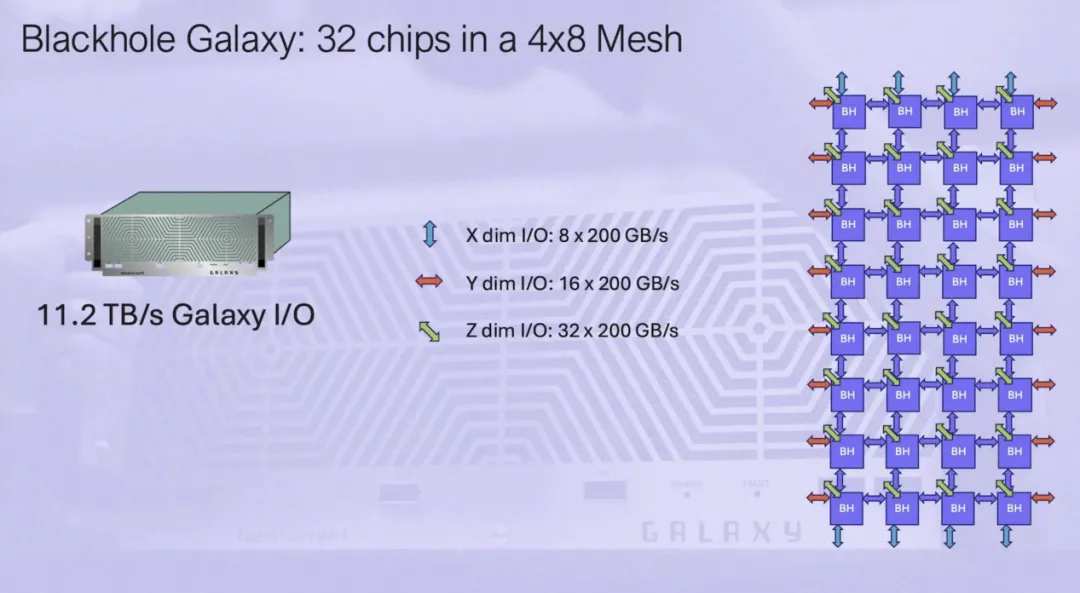

Tenstorrent demonstrated how its latest chip has a slight advantage in performance over the Nvidia A100 GPU, although it falls behind in memory capacity and bandwidth. However, like the A100, Tenstorrent's Blackhole is designed to be deployed as part of a horizontally scaled system. This AI chip startup plans to connect 32 Blackhole accelerators in a 4x8 grid configuration in a single node, referring to it as the Blackhole Galaxy.

Overall, a single Blackhole Galaxy promises 23.8 petaFLOPS for FP8 or 11.9 petaFLOPS for FP16, along with 1TB of memory capable of providing 16 TBps of raw bandwidth. Additionally, Tenstorrent stated that the chip's core-intensive architecture (which we will explore later) enables each of these systems to function either as compute or memory nodes, or as a high-bandwidth AI switch with 11.2 TBps.

Tenstorrent AI software and architecture senior researcher Davor Capalija stated, 'You can build an entire training cluster with it like Lego blocks.'

It is worth mentioning that Tenstorrent employs onboard Ethernet, which means it avoids the challenges of handling multiple interconnect technologies in chip-to-chip and node-to-node networking, while Nvidia has to use NVLink and InfiniBand/Ethernet. In this regard, Tenstorrent's horizontal scaling strategy is quite similar to Intel's Gaudi platform, which also uses Ethernet as its primary interconnect. Considering how many Blackhole accelerators Tenstorrent plans to fit into a single box, let alone a training cluster, it will be interesting to see how they handle hardware failures.

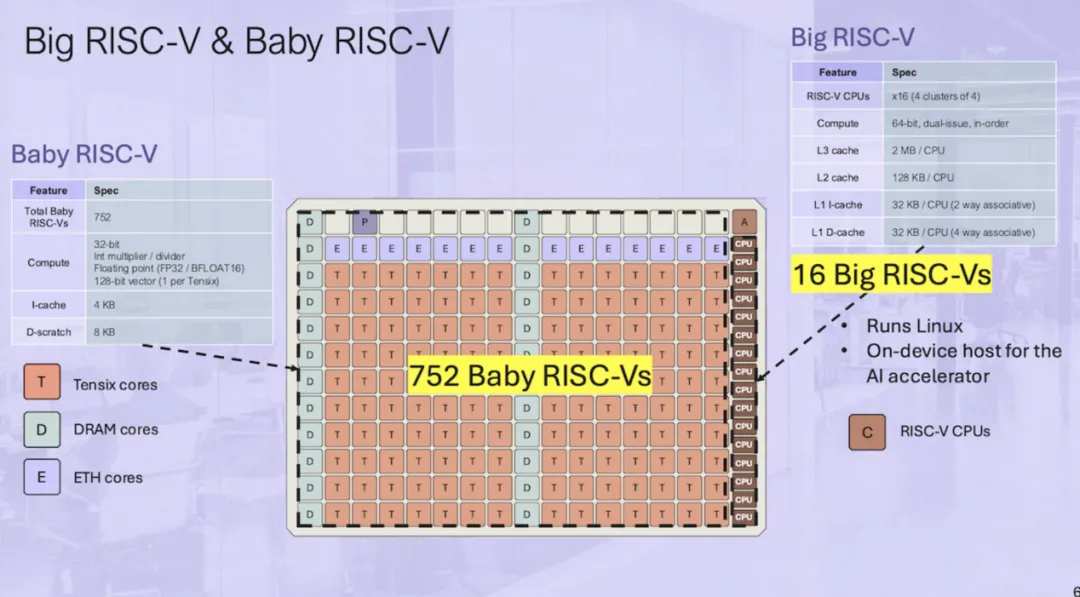

Tenstorrent stated that the Blackhole's ability to function as an independent AI computer is mainly thanks to 16 'Big RISC-V' 64-bit, dual-issue, in-order CPU cores arranged in four clusters. Crucially, these cores are powerful enough to serve as the device host running Linux. These CPU cores are paired with 752 'Baby RISC-V' cores, which are responsible for memory management, off-chip communication, and data processing.

However, the actual computations are handled by Tenstorrent's 140 Tensix cores, each consisting of five 'Baby RISC-V' cores, a pair of routers, a compute complex, and some L1 cache.

The compute complex consists of a tile math engine for accelerating matrix workloads and a vector math engine. The former will support Int8, TF32, BF/FP16, FP8, as well as block floating-point data types ranging from 2 to 8 bits, while the vector engine targets FP32, Int16, and Int32.

According to them, this configuration means that the chip can support various common data patterns in AI and HPC applications, including matrix multiplication, convolution, and chunked data layouts.

Overall, Blackhole's Tensix core accounted for 700 of the 752 so-called onboard RISC-V cores. The remaining cores are responsible for memory management ("D" stands for DRAM), off-chip communication ("E" stands for Ethernet), system management ("A"), and PCIe ("P").

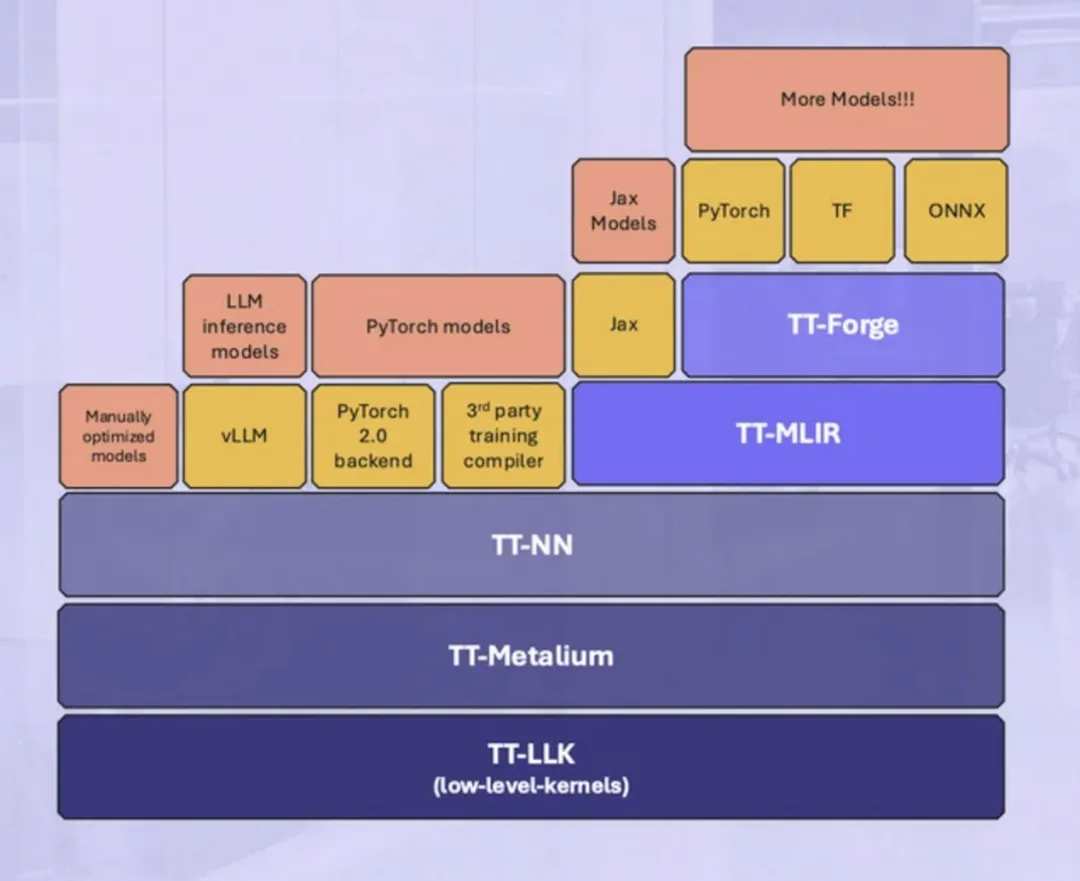

In addition to the new chip, Tenstorrent also unveiled its TT-Metalium low-level programming model for its accelerators.

Those familiar with the Nvidia CUDA platform know that software can make or break the highest performing hardware. In fact, TT-Metalium somewhat resembles GPU programming models like CUDA or OpenCL because it is heterogeneous, but the difference is that it is built from "AI and horizontal scaling" computing, Capalija explained.

One distinction is that the cores themselves are pure C++ with APIs. "We believe there is no need for a special core language," he explained.

Combined with other software libraries such as TT-NN, TT-MLIR, and TT-Forge, Tenstorrent aims to support running any AI model on its accelerators using popular runtimes like PyTorch, ONNX, JAX, TensorFlow, and vLLM.

In conclusion,

The idea of replacing nvidia is something many people think about, but it seems to be a goal that is difficult for anyone to achieve. For example, it is well known that nvidia can maintain its leading position not only due to its advanced hardware but also because of the software strength, including CUDA, which is fundamental to its monopoly to this day.

However, Jim Keller once stated, "CUDA is not a moat, but a swamp." He also believes that GPUs are not the entirety of running ai.

I hope to help clients build their own products, which is a really cool thing. You can own and control it without paying other people a gross margin of 60% or 80%. Therefore, when people tell us that nvidia has already won and ask why Tenstorrent is competing, it's because as long as there is a monopoly with extremely high profit margins, it will create business opportunities." said Jim Keller.

In my opinion, how amazon will subsequently battle with nvidia will also be an interesting topic.

Editor/Rocky

值得一提的是,Bezos Expeditions的实控人为

值得一提的是,Bezos Expeditions的实控人为