While the autonomous driving industry is being ignited, good news from Master Lou is approaching.

After completing the submission of the prospectus on nasdaq, Pony.ai is counting down to ringing the bell.

A team of geniuses, after 8 years of startup, has built a star unicorn in the autonomous driving field, relying on solid business capabilities and leading technology.

The prospectus of Pony.ai actually provides a comprehensive and detailed popular science explanation of autonomous driving technology.

The prospectus of Pony.ai actually provides a comprehensive and detailed popular science explanation of autonomous driving technology.

According to the prospectus, Pony.ai's autonomous driving system can be broken down into several parts, let’s understand them one by one.

sensors

Autonomous autos, to drive like a human driver, need to clearly perceive road conditions just like human drivers, which involves sensors:

lidar

Lidar, as the name suggests, uses laser beams to detect objects around autos, achieving high-resolution distance sensing under various lighting conditions.

Deploying multiple lidars at various locations allows for the observation of autos, pedestrians, traffic lights, and more, generating real-time three-dimensional images of the surrounding environment.

Cameras.

Multiple high-precision cameras enable the vehicle to observe the surrounding environment more comprehensively and from all angles, eliminating significant blind spots, allowing for the discernment and identification of obstacles, and expanding the range of traffic conditions visible.

Radar.

Radar detects the distance and speed of vehicles by emitting radio waves and performs better than lidar and cameras in harsh weather conditions like rain, snow, and fog.

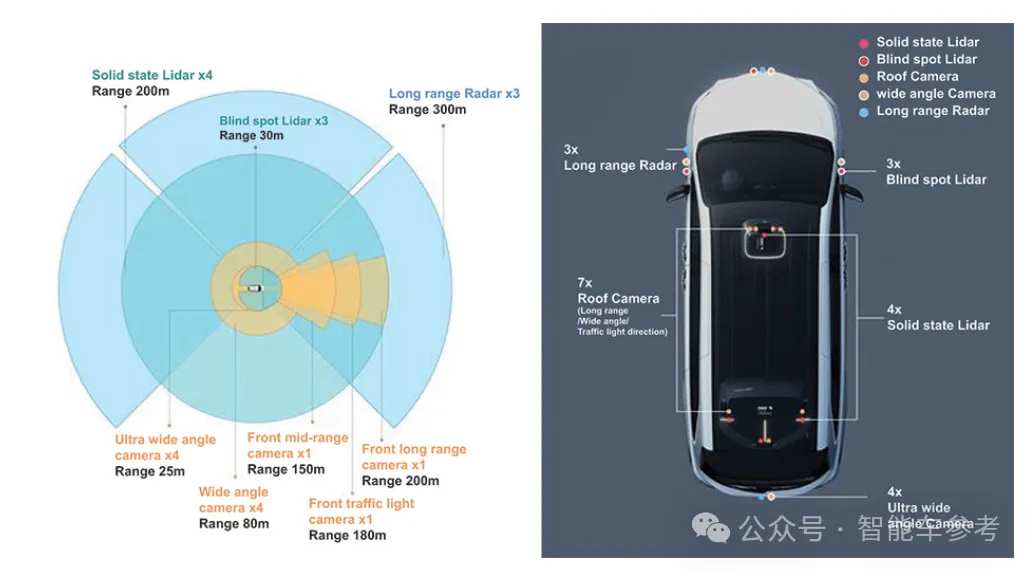

The latest sixth-generation autonomous driving model from Xiaoma adopts a multi-sensor approach that combines the above three types, equipped with 7 lidars, 11 high-resolution cameras, and 3 long-range radars, allowing the strengths and weaknesses of the three types of sensors to complement each other for more effective environmental observation.

But what should be done if a single sensor in the sensor assembly fails?

At this time, in addition to sensing the environment, the accurate positioning of the auto is also important data, so Siasun Robot & Automation also uses GNSS antenna modules, IMU inertial measurement units, and other equipment.

GNSS / IMU

High-precision Global Navigation Satellite System (GNSS) and Inertial Measurement Unit (IMU) work in collaboration with high-definition maps and positioning modules to determine the accurate positioning of the auto.

End-to-end technology software stack

In the prospectus, Siasun Robot & Automation particularly emphasizes that its autonomous driving technology stack can be considered an "intellect" that controls the vehicle through a complete set of software modules and algorithms. This intellect, or AI driver, is not restricted by the type of vehicle platform and integrates various types of sensors and algorithm modules.

Siasun Robot & Automation also emphasizes the explainability of the end-to-end process, hence adopting a segmented pattern, which still includes modules such as perception, prediction, regulation, simulation, and so on, which will be explained in detail below:

End-to-end driving closed-loop evolution

First, let’s look at Siasun's end-to-end model, which simulates the behavior of the vehicle in the real world by integrating a learnable metric space. Through intelligent labeling and feature extraction techniques, the knowledge base of LLM is migrated to the end-to-end model to expand the originally limited resources, facilitating the system to handle complex driving conditions.

Data without labels will continue to train the world model and end-to-end model through a self-supervised interpretation model, explaining the end-to-end inference results, including perception results, prediction results, specific decisions made, and detailed scene descriptions, among others.

This comprehensive interpretability allows for a deeper understanding of the functionality and decision-making processes of autonomous systems.

In this process, to ensure adaptability and accuracy, Pony.ai has incorporated a learnable optimization model, integrating model-based methods and optimization methods, thus having both data-driven characteristics while retaining controllability.

Moreover, to truly achieve an L4 level system, it is insufficient to predict the future solely based on current and past data; the real challenge lies in how to match the frequency with the real world, simulating long-tail scenarios and behaviors for continuous iteration in development.

Therefore, it is necessary to create a high-fidelity environment to facilitate the simulation of real-world scenarios. This is Pony.ai's closed-loop simulation engine, PonyWorld.

PonyWorld accurately replicates real-world conditions in visual detail and dynamic response, enabling the system to break functional boundaries by simulating critical scenarios such as suddenly appearing children, uncovered manholes, or debris falling from vehicles ahead.

This system will use the records of past events and established future facts to make reasonable inferences about various future scenarios.

When the future actions of autonomous vehicles align with these records, the ground truth condition generation model will accurately reflect the future within the records; conversely, when future actions deviate from the records, the model will reconstruct key behavioral features that differ from the records to maintain credibility.

Perception and Prediction

The process of autonomous driving requires a "virtual driver" to be able to "see" the environment around the vehicle and respond in a timely manner, which necessitates the ability to perceive and predict.

The perception and prediction module of Pony.ai employs a large Transformer framework that is multimodal, multitask, and quickly adjustable.

In terms of perception, fast learning techniques are used to integrate various modalities of input, such as point clouds, images, and electromagnetic responses, allowing accurate detection of various types of objects based on a single model while significantly reducing latency.

By processing data collected from sensor components, the perception module automatically completes object segmentation, detection, classification, tracking, and scene understanding.

In extreme and harsh weather conditions where visibility is low, this capability allows autonomous vehicles to perceive their environment without obstruction during driving, thus performing better than human drivers.

To further enhance the performance of the perception module, deep learning techniques are incorporated to process data, along with heuristic methods, which means using human knowledge and common sense to add deterministic mathematical formulas and rules at the decision-making layer to bridge the gap between simulation and reality in deep learning technologies.

In terms of prediction, the prediction module employs a multimodal deep learning model that integrates information from perception observations and human common sense.

This knowledge is extracted from traffic rules and human-designed prompts, represented in the form of a knowledge graph, while the Transformer structure captures the correlations between different modalities.

Based on a series of data, the prediction module will provide a determination. This data similarly focuses on sensor data and combines the output data from the perception module, along with historical decision experiences similar to road agents.

Considering the possibility of unexpected situations, in addition to recording regular data, the dataset also adds extra learnable and targeted prompts to the prediction module for each situation.

Similar to the perception module, the prediction module also adopts deep learning and heuristic methods to provide a predicted trajectory for each observed road agent, while calculating the probability of occurrence, providing reference for the work of other modules.

Planning and control

After successfully perceiving and predicting data, planning and execution of operations must be carried out based on the input data.

Regarding the planning and control module, Sia is created using AI, which involves methods from game theory.

When simulating and analyzing the interactions between vehicles and other road entities, for example, if autonomous vehicles and human-driven vehicles approach an intersection simultaneously, game theory will assist autonomous vehicles in choosing the best route, smoothly accelerating and decelerating, or appropriately changing lanes, determining an optimal action decision, particularly suitable for peak hours and congested roads.

At the same time, to make driving behavior closer to that of humans, a reinforcement learning human feedback (RLHF) adjustment mechanism is used in the decision-maker.

By utilizing human labelers, feedback on the safety, comfort, and efficiency of the autonomous driving system is obtained in various situations. This feedback is used to train reward functions, allowing these functions to adjust deep learning decisions on larger datasets.

Hardware and vehicle integration

After understanding the software, the next step is to look at the hardware of autonomous driving autos and how to integrate each part together.

Computing system

The data collected from the sensors is processed by the computing system, which runs algorithms in real time to achieve autonomous driving. The onboard computing unit is responsible for processing the data collected from the sensors.

Xiaoma Intelligent's autonomous driving computing unit (ADCU) uses a heterogeneous computing architecture, including central processing unit (CPU), graphics processing unit (GPU), field-programmable gate array (FPGA), and microcontroller unit (MCU). It is a fully automotive-grade computing platform that easily defines a computing architecture fully compatible with autonomous driving applications.

With the ADCU computing platform, Xiaoma can maintain a balance between performance and resource consumption through fine-tuning. If new technologies emerge, the ADCU can also be adjusted and upgraded more easily, offering strong flexibility and scalability.

Vehicle integration

The final link in autonomous driving is to integrate each part of the system into the vehicle.

Xiaoma Zhixing's solution is built on automotive-grade hardware and software toolchains, and includes regulatory systems.

Through reliable interfaces between the autonomous driving software stack and the vehicle platform, the vehicle platform can accurately receive and execute control commands.

Among the various modules, the on-board system provides a unified application programming interface (API) so that data can remain stable and smooth throughout the entire transmission path. At the same time, the on-board monitoring system can timely detect potential failures in each module.

The most important and final layer of protection is safety redundancy.

In Xiaoma's automotive model, the redundant platform ensures safety through redundant sensors, computing systems, power supplies, and actuators, thereby avoiding the occurrence of single-point failures.

For example, in the computing system, different processors cross-check each other and act as backups for one another; if an error occurs, certain algorithms running on the GPU will revert to the CPU.

For example, if the main power system fails, the backup power system will seamlessly kick in, ensuring continuous power supply to the computing system and maintaining normal running of the vehicle.

In this process, redundancy has three layers: normal running mode, degraded safe mode, and minimal risk condition mode.

The degraded mode and minimal risk condition mode operate on physically independent redundant platforms, which include redundant sensors and computing.

If a fault occurs during normal running, the platform will detect these faults and switch the system to degraded safe mode, allowing the vehicle to drive to a safe location.

If a serious fault occurs that cannot be resolved even in degraded safe mode, it will trigger minimal risk condition mode, allowing the vehicle to stop on the lane without collision.

Finally, as the carrier of all this—autonomous vehicles, Xiaoma's choice is to collaborate with the manufacturers to jointly design, test the vehicles, and establish an integrated streamlined assembly line.

Currently, the autonomous vehicles launched by Xiaoma Zhixing have been developed in cooperation with Toyota and upgraded to the sixth generation, starting deployment of a public robotaxi service in July 2023.

The latest progress is that the seventh generation autonomous driving hardware and software system has entered the research and development verification phase. On November 2, Xiaoma Zhixing signed a contract with BAIC New Energy, and the seventh generation system will be equipped with the Extreme Fox Alpha T5, with the first batch of Extreme Fox Alpha T5 Robotaxi models expected to be completed and launched in 2025.

Nowadays, players in the autonomous driving industry are racing for commercialization, who will be the first?

而小马智行的招股书,实际上也对自动驾驶技术做了一次完整又详实的科普。

而小马智行的招股书,实际上也对自动驾驶技术做了一次完整又详实的科普。