Source: Semiconductor Industry Watch. At yesterday's Conputex conference, Dr. Lisa Su released the latest roadmap. Afterwards, foreign media morethanmoore released the content of Lisa Su's post-conference interview, which we have translated and summarized as follows: Q: How does AI help you personally in your work? A: AI affects everyone's life. Personally, I am a loyal user of GPT and Co-Pilot. I am very interested in the AI used internally by AMD. We often talk about customer AI, but we also prioritize AI because it can make our company better. For example, making better and faster chips, we hope to integrate AI into the development process, as well as marketing, sales, human resources and all other fields. AI will be ubiquitous. Q: NVIDIA has explicitly stated to investors that it plans to shorten the development cycle to once a year, and now AMD also plans to do so. How and why do you do this? A: This is what we see in the market. AI is our company's top priority. We fully utilize the development capabilities of the entire company and increase investment. There are new changes every year, as the market needs updated products and more features. The product portfolio can solve various workloads. Not all customers will use all products, but there will be a new trend every year, and it will be the most competitive. This involves investment, ensuring that hardware/software systems are part of it, and we are committed to making it (AI) our biggest strategic opportunity. Q: The number of TOPs in PC World - Strix Point (Ryzen AI 300) has increased significantly. TOPs cost money. How do you compare TOPs to CPU/GPU? A: Nothing is free! Especially in designs where power and cost are limited. What we see is that AI will be ubiquitous. Currently, CoPilot+ PC and Strix have more than 50 TOPs and will start at the top of the stack. But it (AI) will run through our entire product stack. At the high-end, we will expand TOPs because we believe that the more local TOPs, the stronger the AIPC function, and putting it on the chip will increase its value and help unload part of the computing from the cloud. Q: Last week, you said that AMD will produce 3nm chips using GAA. Samsung foundry is the only one that produces 3nm GAA. Will AMD choose Samsung foundry for this? A: Refer to last week's keynote address at imec. What we talked about is that AMD will always use the most advanced technology. We will use 3nm. We will use 2nm. We did not mention the supplier of 3nm or GAA. Our cooperation with TSMC is currently very strong-we talked about the 3nm products we are currently developing. Q: Regarding sustainability issues. AI means more power consumption. As a chip supplier, is it possible to optimize the power consumption of devices that use AI? A: For everything we do, especially for AI, energy efficiency is as important as performance. We are studying how to improve energy efficiency in every generation of products in the future-we have said that we will improve energy efficiency by 30 times between 2020 and 2025, and we are expected to exceed this goal. Our current goal is to increase energy efficiency by 100 times in the next 4-5 years. So yes, we can focus on energy efficiency, and we must focus on energy efficiency because it will become a limiting factor for future computing. Q: We had CPUs before, then GPUs, now we have NPUs. First, how do you see the scalability of NPUs? Second, what is the next big chip? Neuromorphic chip? A: You need the right engine for each workload. CPUs are very suitable for traditional workloads. GPUs are very suitable for gaming and graphics tasks. NPUs help achieve AI-specific acceleration. As we move forward and research specific new acceleration technologies, we will see some of these technologies evolve-but ultimately it is driven by applications. Q: You initially broke Intel's status quo by increasing the number of cores. But the number of cores of your generations of products (in the consumer aspect) has reached its peak. Is this enough for consumers and the gaming market? Or should we expect an increase in the number of cores in the future? A: I think our strategy is to continuously improve performance. Especially for games, game software developers do not always use all cores. We have no reason not to adopt more than 16 cores. The key is that our development speed allows software developers to and can actually utilize these cores. Q: Regarding desktops, do you think more efficient NPU accelerators are needed? A: We see that NPUs have an impact on desktops. We have been evaluating product segments that can use this function. You will see desktop products with NPUs in the future to expand our product portfolio.

Author: Li Shoupeng

Recently, Dr. Lisa Su, the Chairman and CEO of AMD, posted a message on social media, saying: '10 years ago, I was fortunate to be appointed as the CEO of AMD. It has been an incredible journey with many proud moments.' Indeed, looking back at AMD's development over the past decade, it can truly be considered a miracle.

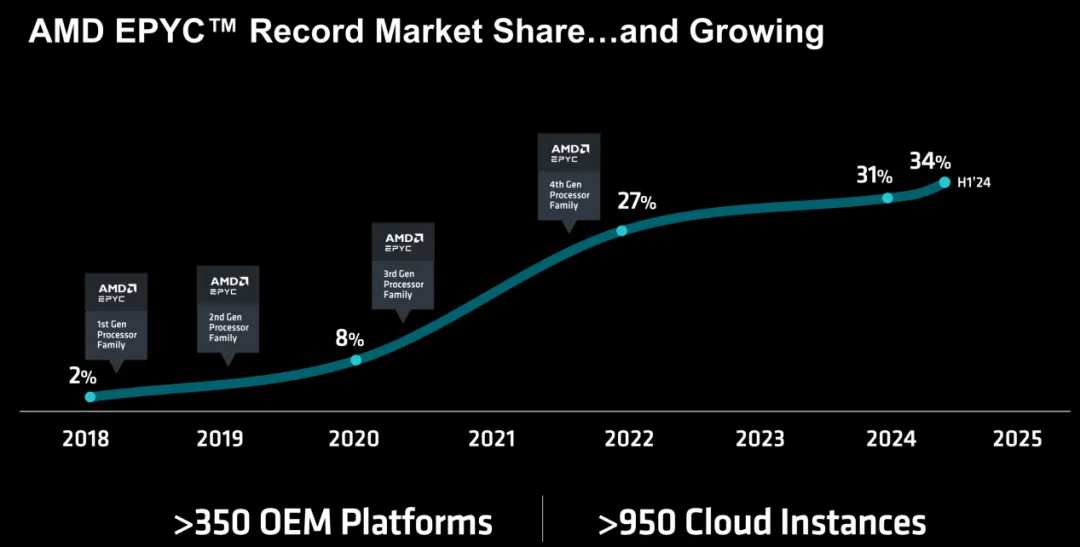

Taking the server CPU as an example, after Lisa Su took over as CEO, AMD increased its investment in this market and launched the EPYC series for the datacenter market in 2017. After seven years of development, AMD not only regained lost ground in the server CPU market but also set new highs. As shown in the following figure, in the first half of this year, the company's EPYC CPU market share reached as high as 34%, which is enough to illustrate the success of EPYC.

Taking the server CPU as an example, after Lisa Su took over as CEO, AMD increased its investment in this market and launched the EPYC series for the datacenter market in 2017. After seven years of development, AMD not only regained lost ground in the server CPU market but also set new highs. As shown in the following figure, in the first half of this year, the company's EPYC CPU market share reached as high as 34%, which is enough to illustrate the success of EPYC.

Besides server CPUs, AMD has also made leaps and bounds in PC CPUs, GPUs, data center GPUs, and DPUs. At the 'AMD Advancing AI 2024' summit held today in San Francisco, AMD unveiled major updates for AI PCs, data centers, and AI CPUs, GPUs, and DPUs.

Focus on the AI market, continually investing in GPUs

Among the products AMD has released in recent years, the Instinct GPU series targeted at the AI market is undoubtedly the most notable. With the popularity of GenAI represented by ChatGPT, there is a growing demand for GPUs in the market. As one of the few companies globally that can compete with NVIDIA, AMD's Instinct series is also growing rapidly.

According to Lisa Su's remarks at the earnings conference in July this year, the AMD Instinct MI300X GPU brought in over $1 billion in revenue for data centers in the second quarter of this year. She also mentioned that these accelerators are expected to generate over $4.5 billion in revenue for AMD in the 2024 fiscal year, exceeding the $4 billion target set in April.

In order to further seize the opportunity in the GenAI market, AMD detailed the updated version of the Instinct MI325X accelerator at the summit.

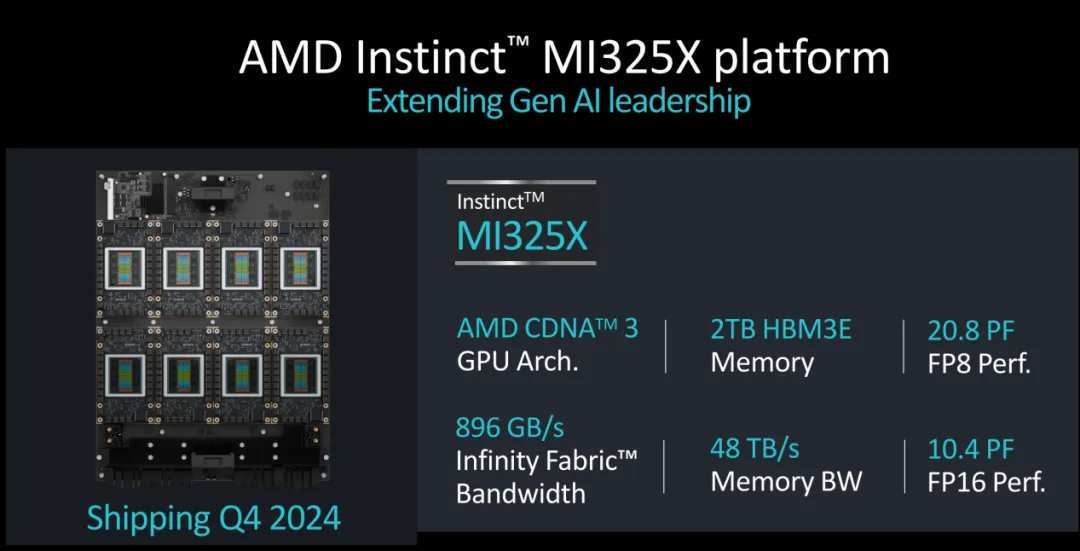

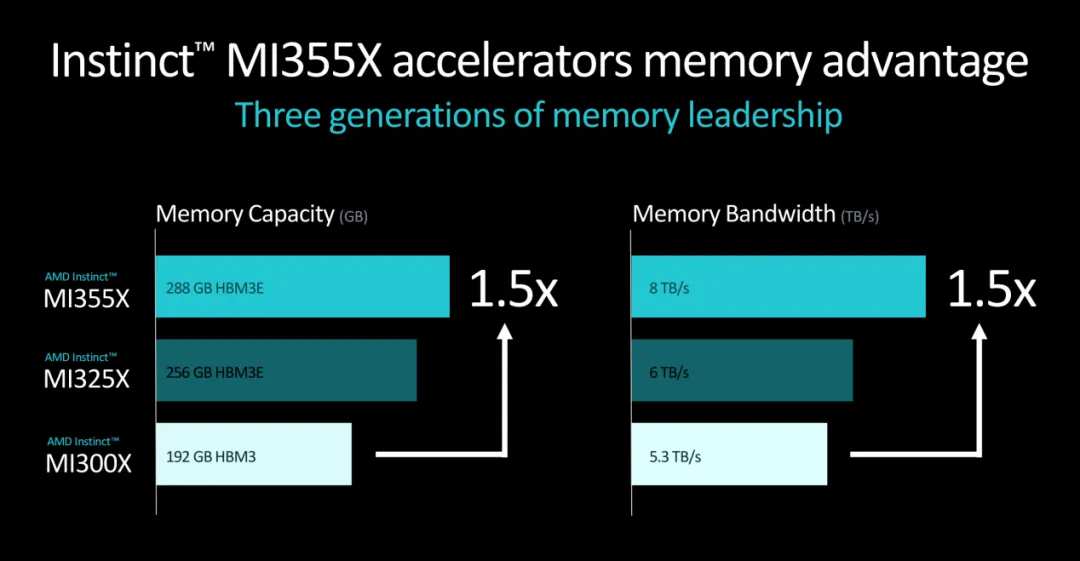

For readers who are interested in AMD, they should know that this accelerator was disclosed as early as June at the Taipei International Computer Show. As shown in the picture, as an upgrade version of the MI300X GPU, the new AMD Instinct MI325X accelerator is similar in most configurations to the former, even adopting the same design in terms of board server design. The difference is that the MI325X will be equipped with 288GB of HBM3E memory and a memory bandwidth of 6TB/sec. In comparison, the MI300 used 192GB of HBM3 and had a memory bandwidth of only 5.2 TB/sec.

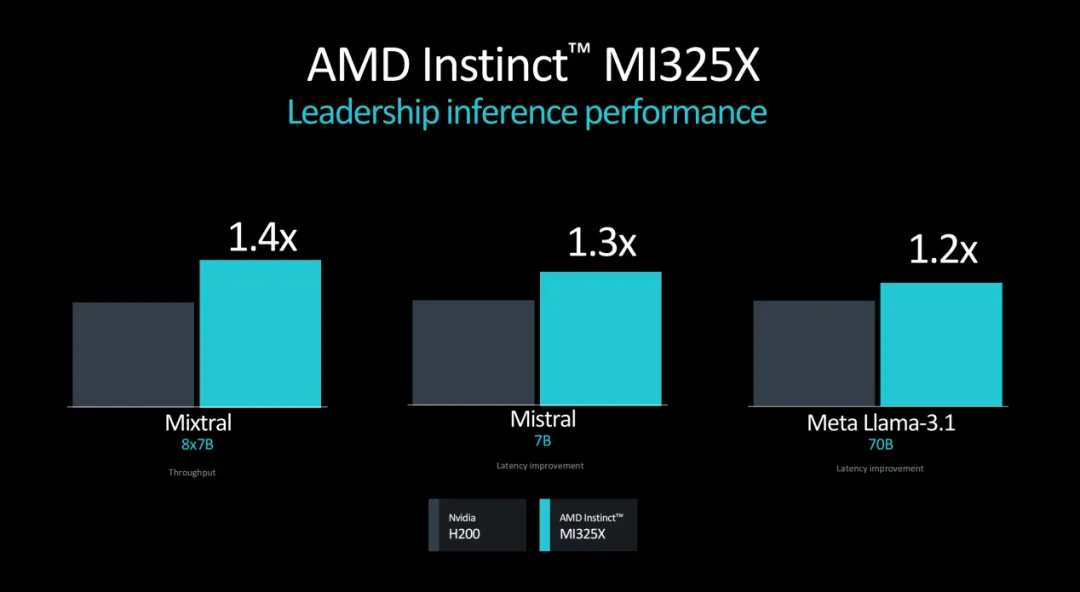

Thanks to this update, as shown in the picture above, the AMD Instinct MI325X outperforms the NVIDIA H200 in the inference performance of multiple models. AMD also revealed that the company's 8-path OAM platform based on the MI325X will also ship in Q4 this year. As shown in the picture, compared to the NVIDIA H200 HGX, the new platform excels in memory capacity (1.8 times), memory bandwidth (1.3 times), FP16 and FP8 Flops (1.3 times), and even surpasses the former by 1.4 times in terms of inference performance.

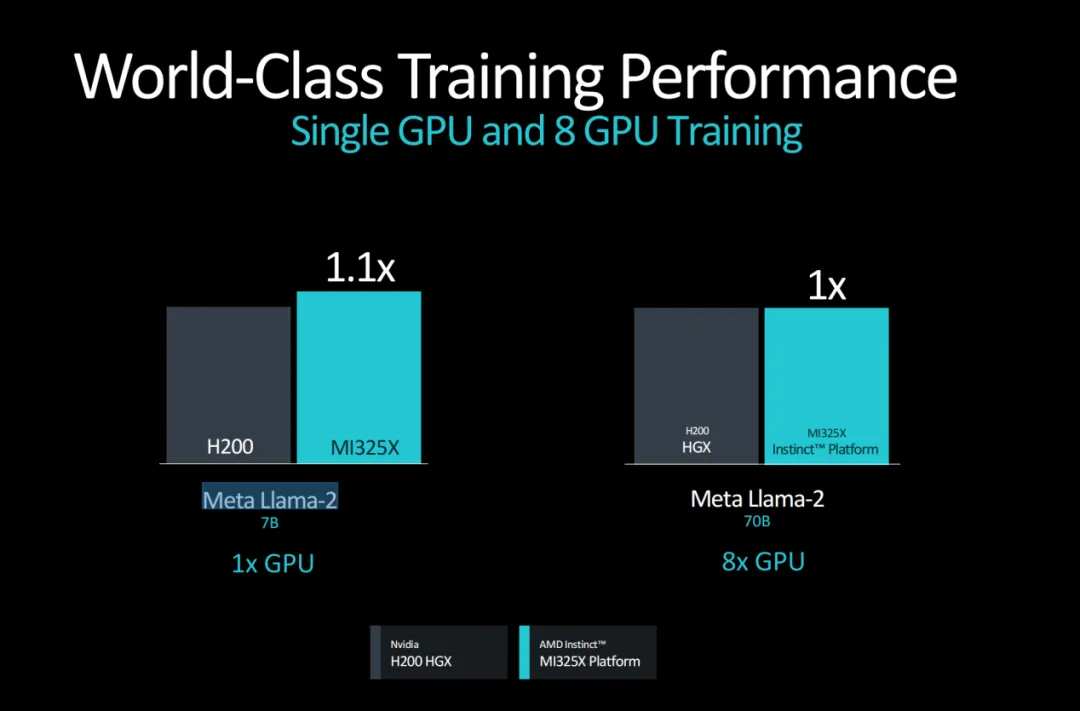

When it comes to training, the performance of the AMD Instinct MI325X platform is equally competitive with the NVIDIA H200 HGX. As shown in the picture, whether in a single GPU or in an 8-GPU Meta Llama-2 training scenario, the performance of the AMD Instinct MI325X platform is on par with the latter, giving the company more leverage in this market.

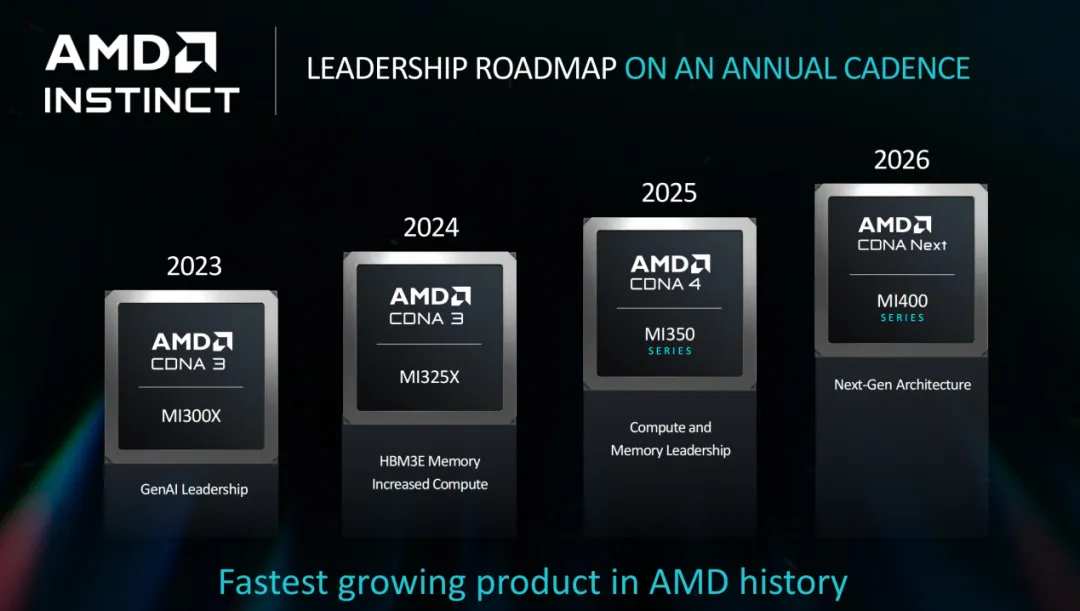

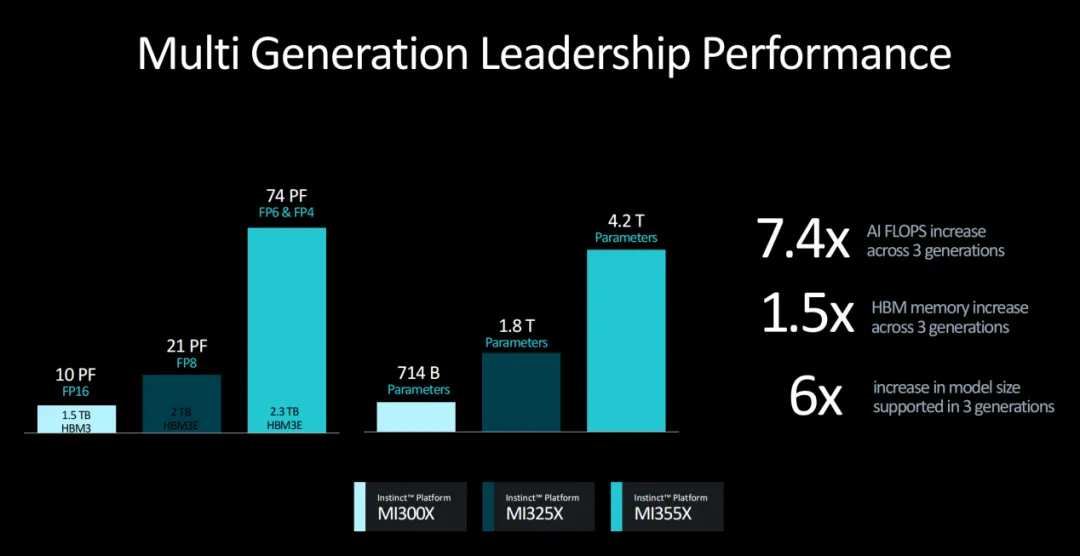

In order to meet the increasingly growing demand for AI computing, AMD emphasizes that the company's Instinct accelerators will follow an annual update rhythm. This means that the company's next generation of products in this series - the AMD Instinct MI350 series - may be launched in 2025.

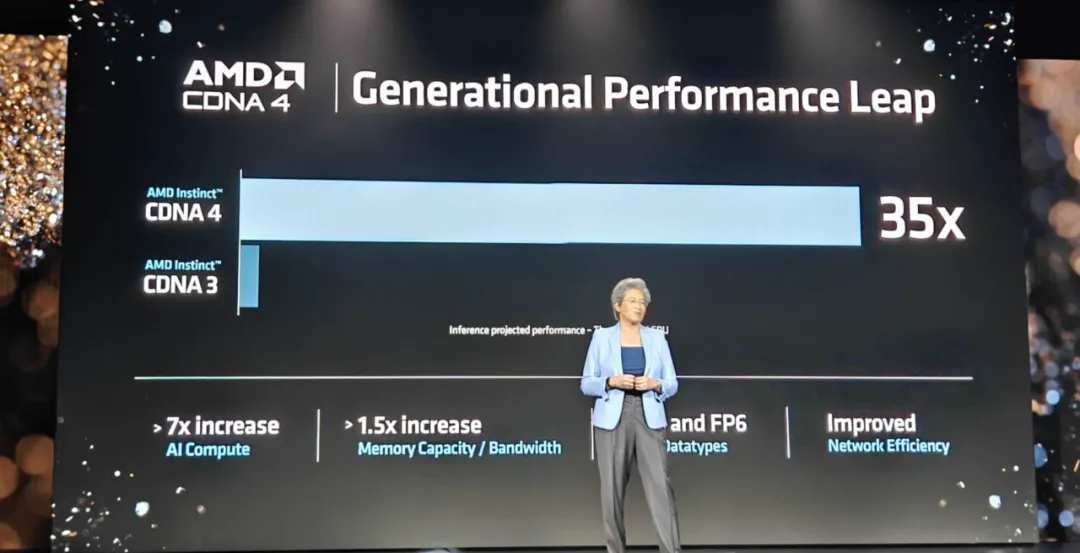

It is reported that the first product of the upcoming AMD Instinct MI350 series, the AMD Instinct MI350X accelerator, is expected to be based on the AMD CDNA 4 architecture. As shown in the picture, compared to the previous generation products, the new GPU architecture will see a significant performance boost, ensuring the performance of the AMD Instinct MI350X.

While the new generation of Instinct GPU architecture has significantly improved performance, it also utilizes advanced 3nm process technology, incorporates up to 288GB of HBM3E memory, and supports FP4 and FP6 AI data types, further enhancing overall performance. Additionally, by adopting the industry standard common board server design used in other MI300 series accelerators, the new GPU makes chip upgrades at the endpoint easier.

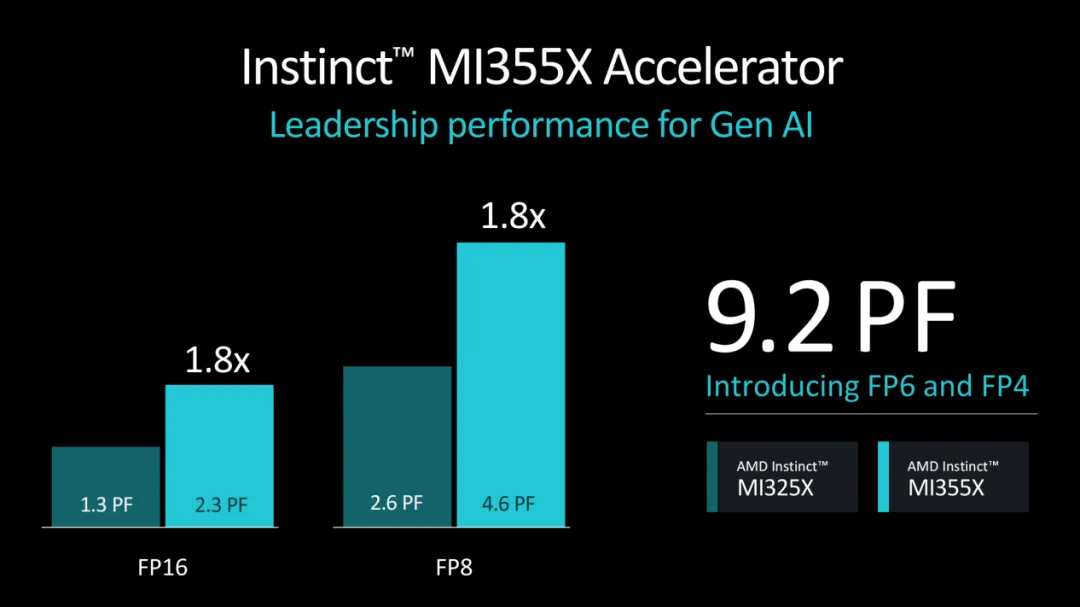

Compared to the AMD Instinct MI325X, as shown in the picture above, the new accelerator has achieved good results in many aspects. Of course, the platform built on the MI350X also performs equally well. AMD disclosed that this product is planned to be officially ready in the second quarter of next year.

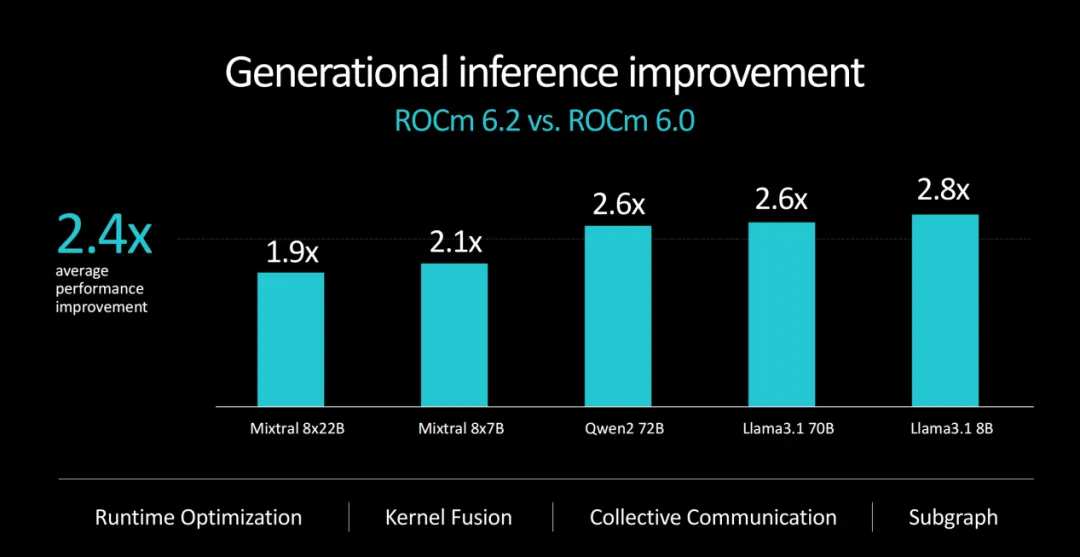

To make it easier for developers to make better use of the company's GPUs, AMD not only continues to update its ROCm series, but also closely collaborates with industry ecosystem partners.

According to the introduction, AMD's new version of ROCm 6.2 now provides support for key AI features including FP8 data type, Flash Attention 3, and Kernel Fusion. With these new features, compared to ROCm 6.0, ROCm 6.2 has shown performance improvements of up to 2.4 times for inference, and up to 1.8 times for various LLM training tasks.

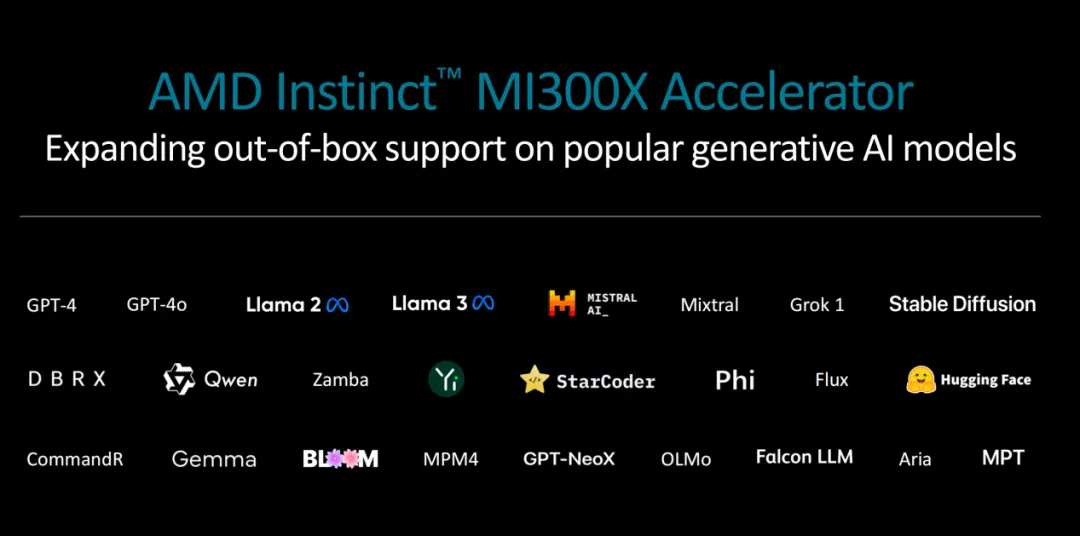

At the same time, AMD is also promoting the support of the most widely used AI frameworks, libraries, and models (including PyTorch, Triton, Hugging Face, etc.) for AMD's computing engine. This effort translates to out-of-the-box performance and support for AMD Instinct accelerators in popular generative AI models (such as Stable Diffusion, Meta Llama 3, 3.1 and 3.2, and over a million models on Hugging Face).

Furthermore, AMD also revealed that the company's recent $665 million acquisition of Silo AI will address the final mile problem for customer AI. As Vamsi Boppana, Senior Vice President of AMD and President of AIG, said: "The Silo AI team has developed state-of-the-art language models, which have been extensively trained on AMD Instinct accelerators. They have rich experience in developing and integrating AI models to address key customer issues. We expect their expertise and software capabilities to directly enhance the customer experience of providing the best performance AI solutions on the AMD platform."

It is worth mentioning that with this update pace, the AMD Instinct MI400 series may debut in 2026.

Not to be outdone by CPUs, AMD continues to innovate.

As mentioned at the beginning of the article, in the datacenter market, the EPYC series CPUs are AMD's most proud products, as we have outlined the development of this series in recent years earlier. In addition, the Ryzen CPUs for the consumer PC market have also been another pillar of AMD's resurgence in the CPU market in recent years.

At today's summit, AMD also introduced CPU updates - the fifth-generation EPYC processors for datacenters, and the Ryzen AI PRO 300 series for AI PCs.

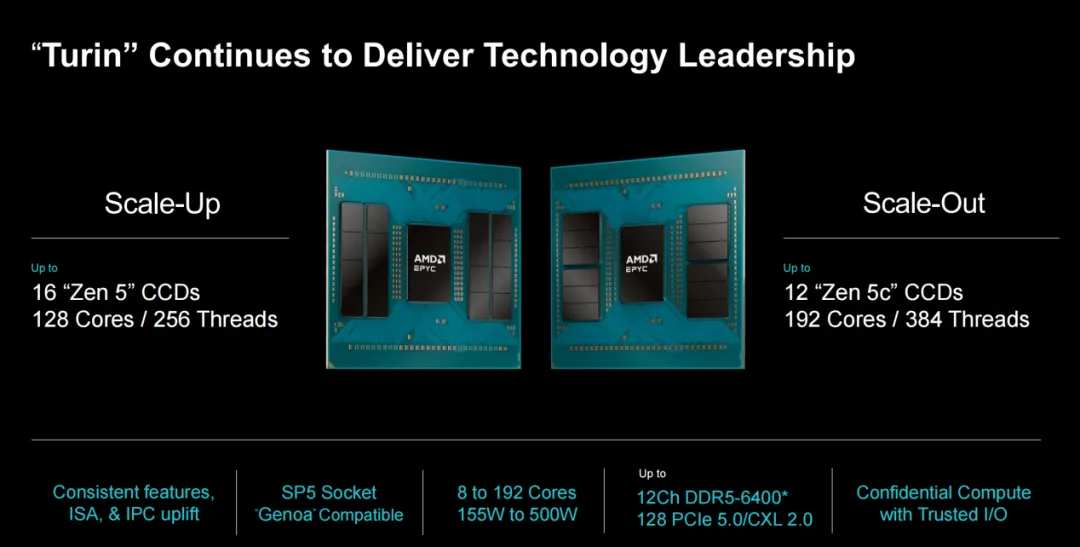

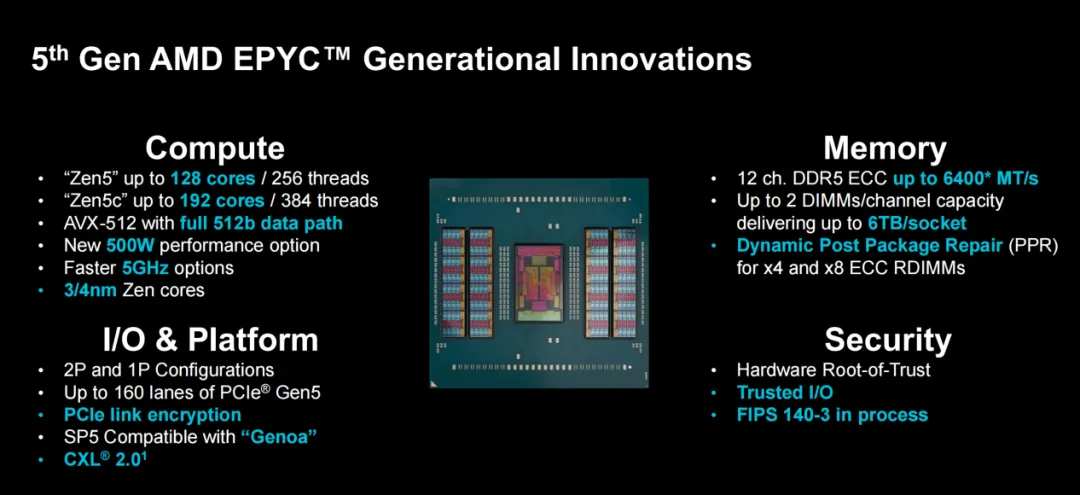

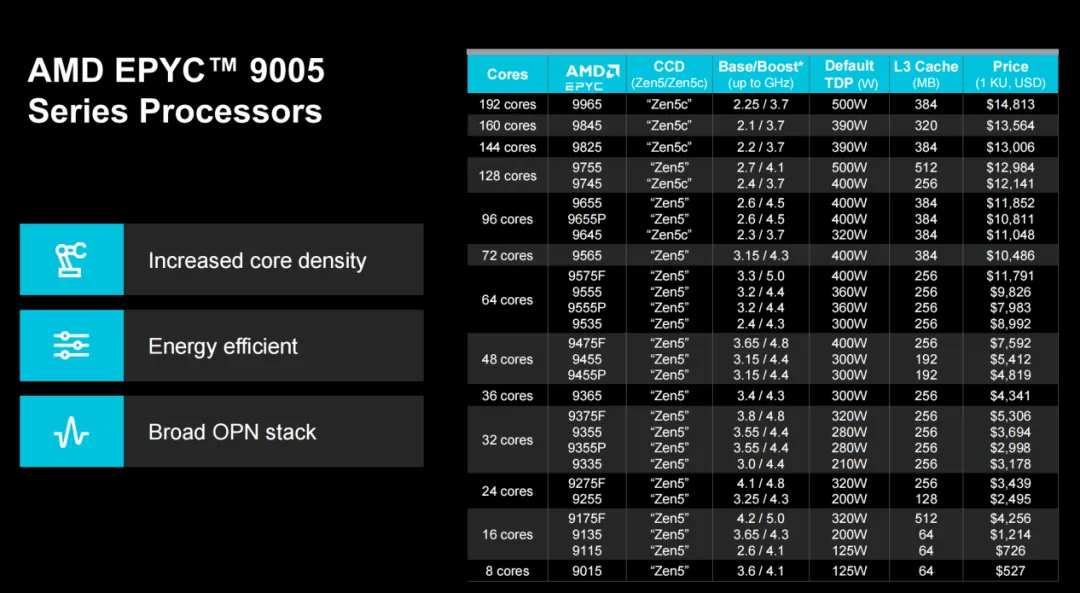

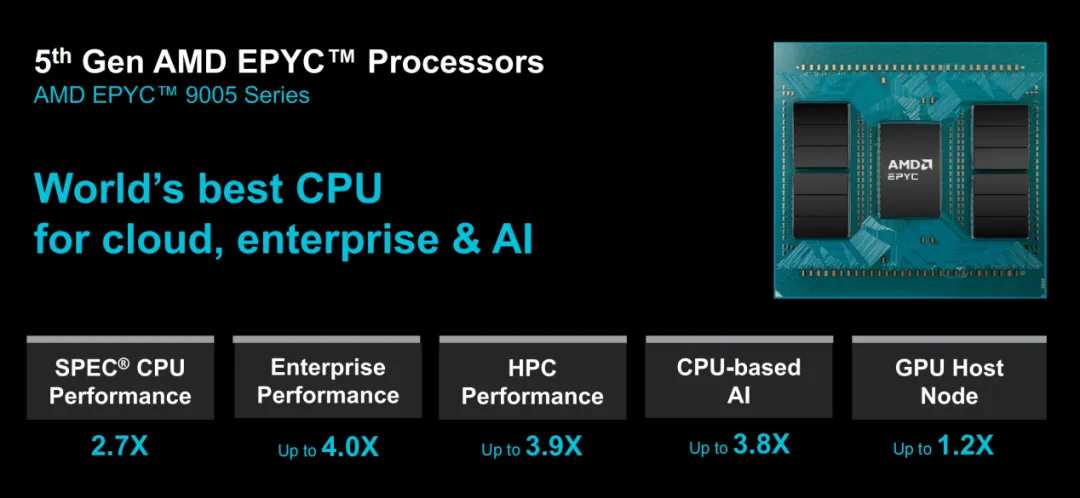

First, let's look at the latest generation of EPYC processors. As shown in the diagram below, AMD's EPYC server processor based on Zen 5 is codenamed 'Turin', manufactured using Taiwan Semiconductor's 3nm/4nm technology, with a maximum frequency of 5Ghz. Specifically, Turin has two versions: one equipped with Zen 5 cores (128 cores, 256 threads), and another equipped with Zen 5c cores.

In particular, the density-optimized CPU core Zen 5c chip will be equipped with up to 192 cores and 384 threads, manufactured using 3nm technology, paired with a single-slot 6nm I/O chip (IOD), the entire chip consists of 17 chiplets; models with standard full-performance Zen 5 cores are equipped with 12 computing chips with N4P process nodes and a central 6nm IOD chip, totaling 13 chiplets.

In terms of basic memory and I/O, this series offers 12 DDR5 memory channels and 160 PCIe 5.0 channels. Based on these two cores, AMD designed multiple SKUs for the Turin series to meet various requirements in different scenarios.

According to the data provided by AMD, the fifth-generation EPYC excels in various aspects. For example, in enterprise and cloud applications, the Zen 5 core's IPC has increased by 17%; for HPC and AI applications, the IPC has increased by 37%; in other areas such as world-class SPEC CPU 2017 integer throughput, leading single-core performance, and outstanding workload performance, the fifth-generation EPYC stands out.

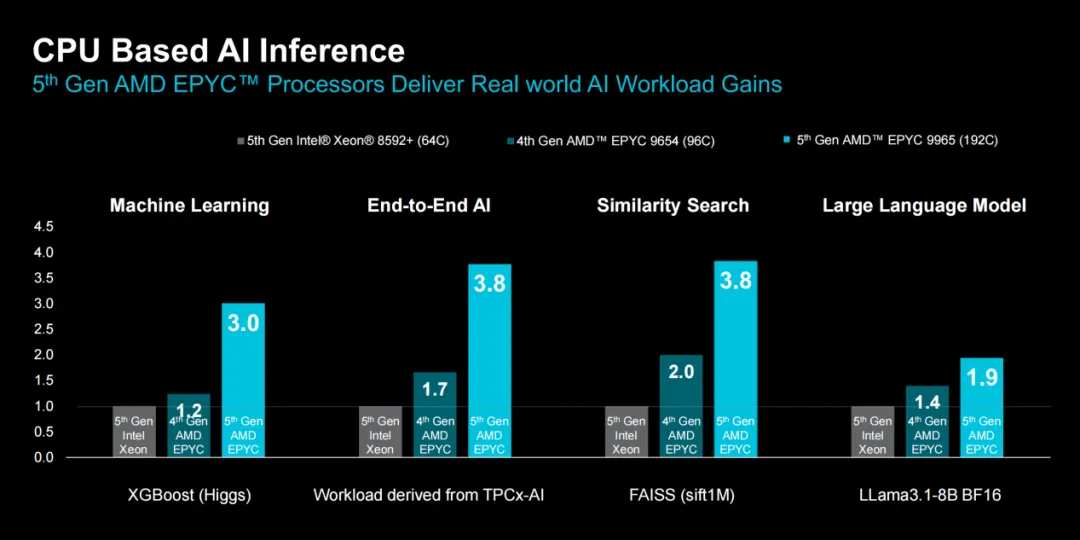

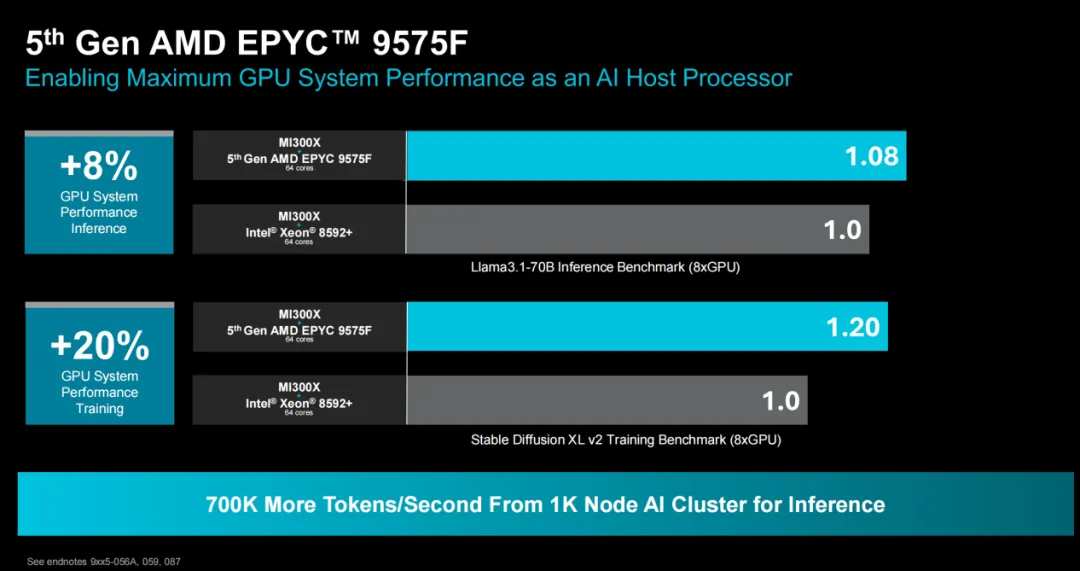

When serving as an AI host processor, the 5th Gen AMD EPYC 9575F CPU brings noticeable improvements to GPU systems.

AMD states that with the optimized CPU + GPU solution, the fifth-generation EPYC can empower traditional computing, hybrid AI, and large-scale AI. After years of development, this CPU series has also become a leader in secure computing. Importantly, due to its X86 architecture and mature ecosystem, this processor series enables developers to easily migrate from the Intel platform, modernize data centers, and increase capacity to meet customer computing needs.

Based on these leading performances, the fifth-generation EPYC processor is the best CPU globally applicable to cloud, enterprise, and AI.

Next, moving on to the Ryzen AI CPU, this is another focal point for AMD in recent years, and it is also a product of the rise of GenAI.

According to IDC's report, with the promotion of chip manufacturers and ODMs, 2024 has become the first year of AI PC development. Although its market share in the entire PC market is only 3%, its momentum is unstoppable. It is estimated that the shipment volume will reach 60 times that of this year by 2028. AMD's Ryzen AI 300 series CPU is designed for this market.

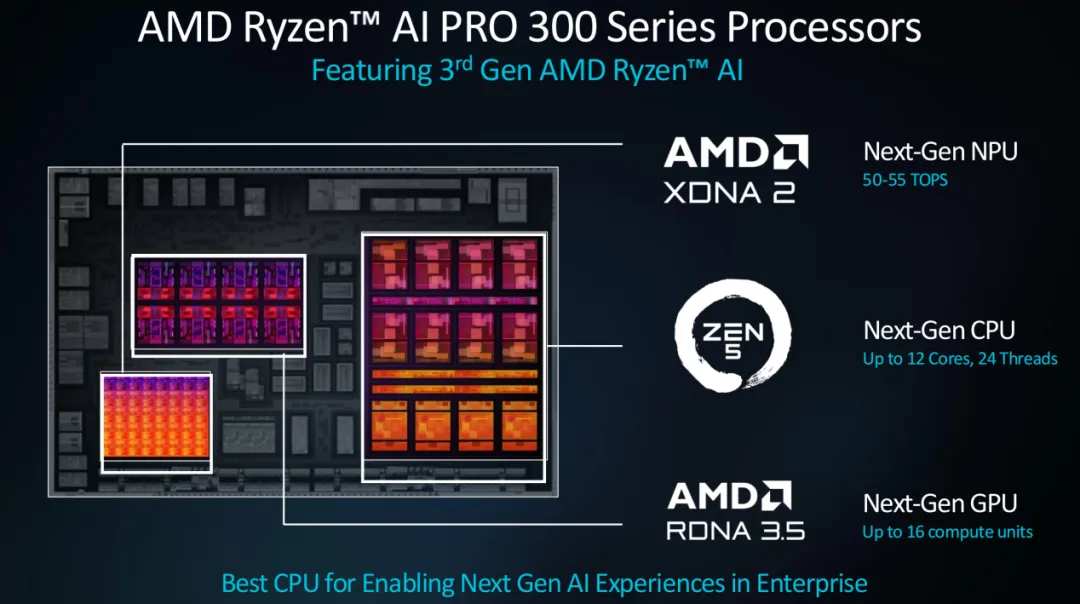

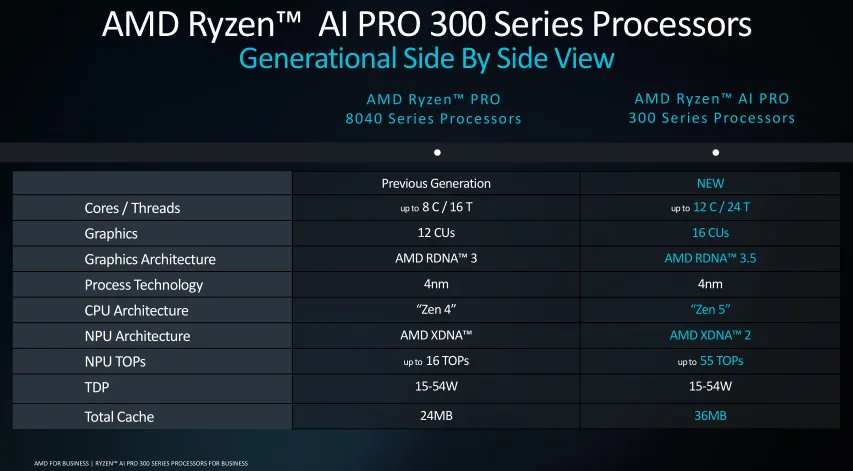

The newly released Ryzen AI PRO 300 series is AMD's latest product for this market.

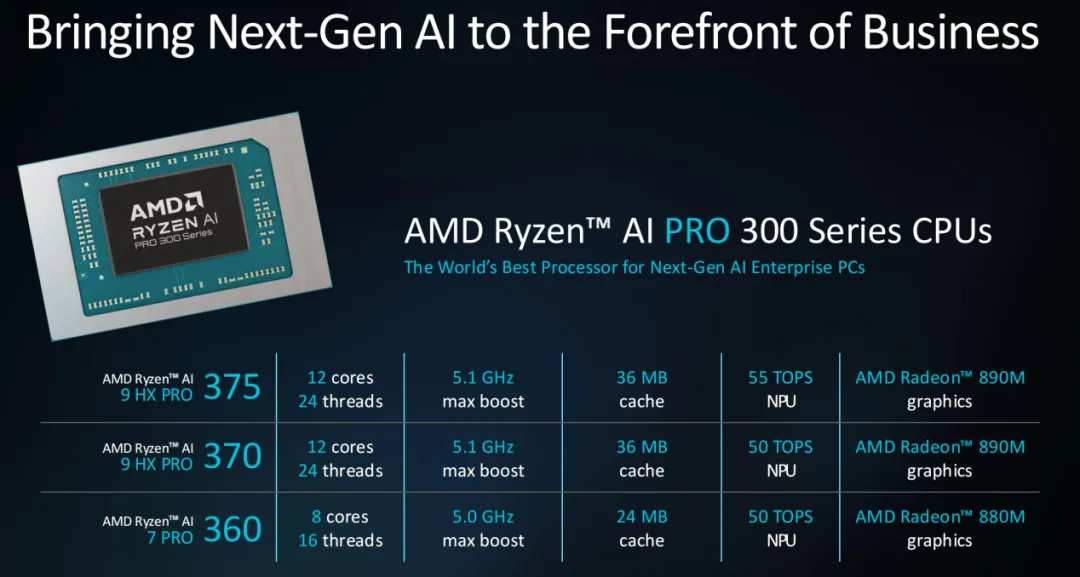

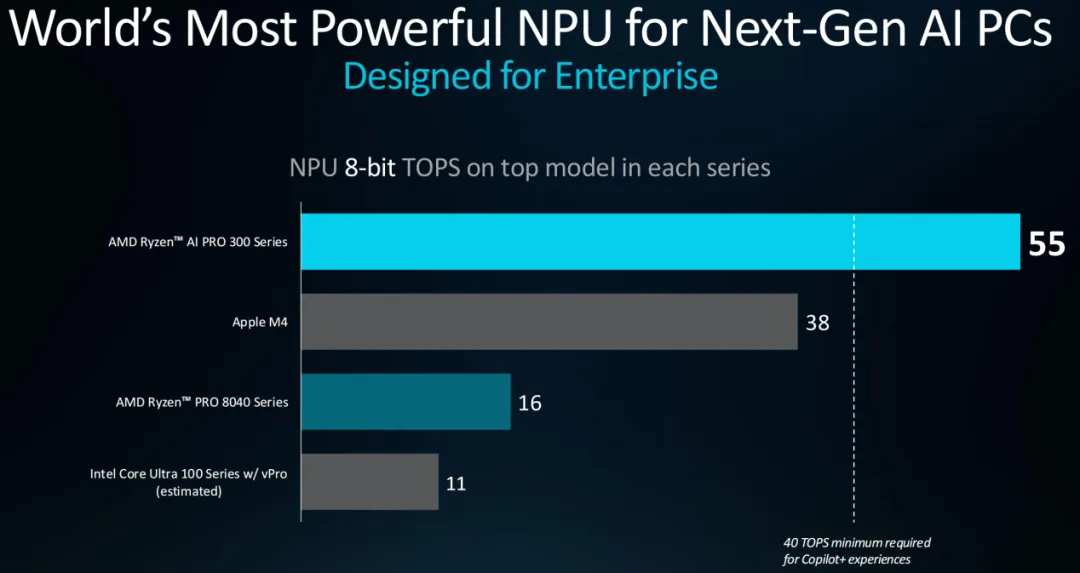

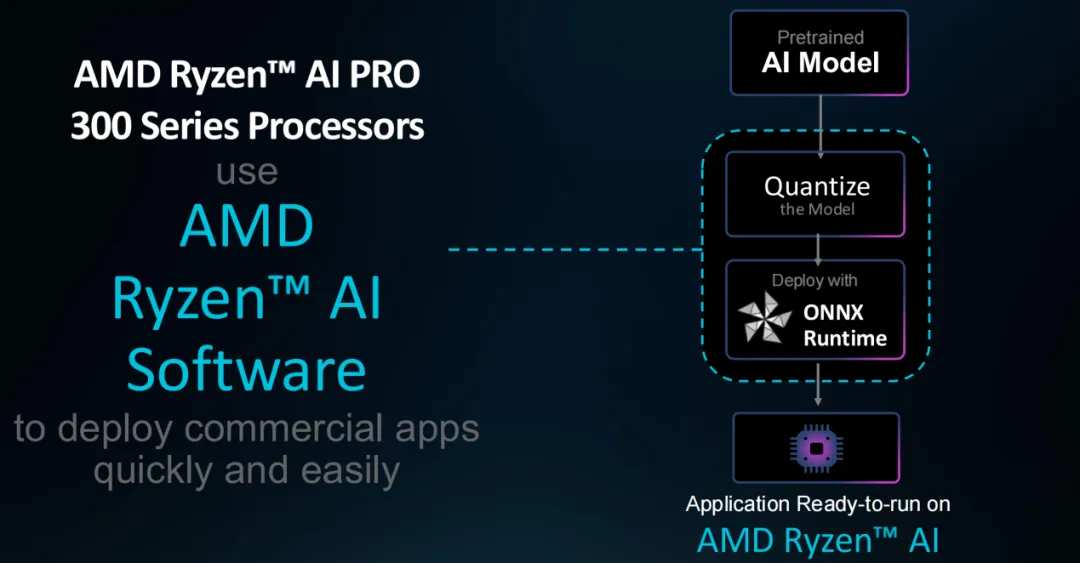

On AMD's side, as shown in the figure, this series of processors is mainly composed of the latest generation of Zen 5 CPU, RDNA 3.5 GPU, and XDNA NPU. In terms of CPU, it has up to 12 cores, 24 threads; for GPU, there are 16 compute units, and the NPU's computing power is as high as 50 to 55 TOPS.

It is reported that the new series of processors have shown significant improvements compared to the previous generation. In order to meet the needs of multiple application scenarios, AMD Ryzen AI PRO 300 provides three SKUs to choose from.

With the support of these leading CPUs, GPUs, and NPUs, the Ryzen AI PRO 300 series outperforms similar products from competitors across the board. For example, compared to the Intel Core Ultra 7 w/ vPro 165U, the CPU performance of the AMD Ryzen AI 7 PRO 360 is 30% ahead; compared to the Intel Core Ultra 7 165H, the CPU performance of Ryzen AI 9 HX PRO 375 is even 40% ahead.

In terms of NPU, the Ryzen AI PRO 300 series is far ahead of its competitors. This allows it to handle AI tasks with ease, and to facilitate developers, AMD has joined forces with partners in software and ecosystem, speeding up the popularization of AI PC.

The network, an indispensable component.

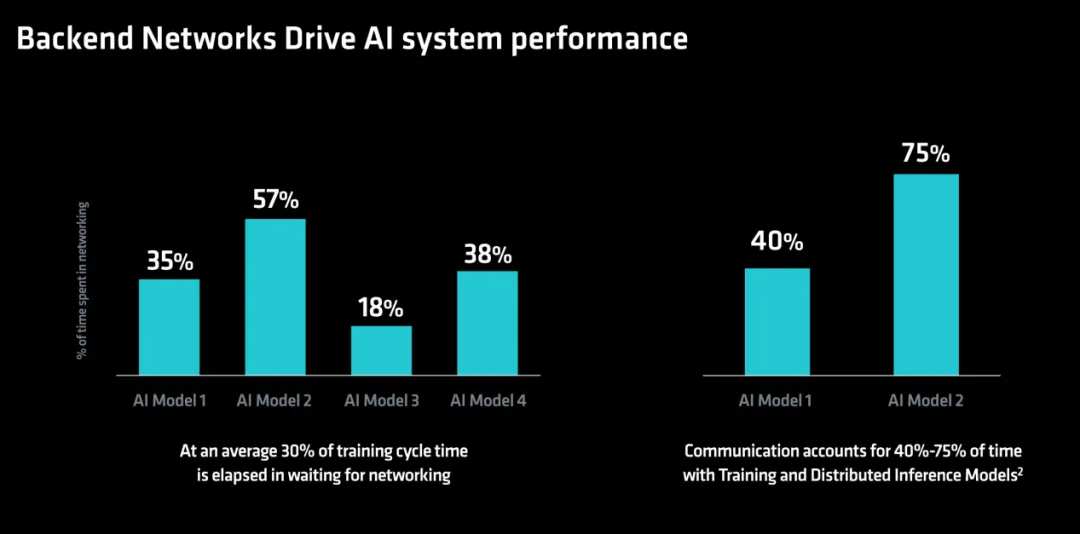

When we usually talk about AI server systems, we often focus on GPU or CPU, even storage, and power consumption. However, for current AI systems, network connection is the most critical component that cannot be ignored.

From the chip level, facing the slow down of Moore's Law and the impact of mask size, it is becoming increasingly difficult to achieve more computing power on a single chip. This is also the reason why AMD is vigorously promoting Chiplet, and to connect these different Chiplets, better network connections are needed. From the system level, because the computing power of a single server cabinet is always limited, how to connect more nodes in a datacenter to form a cluster becomes a major concern for the entire industry. At the same time, considering the need to process more and more data in the system, networking has become unprecedentedly important.

According to relevant information, with the growth of artificial intelligence applications and the use of GPUs in servers, the traditional datacenter network consisting of Ethernet switches and Network Interface Cards (NICs) used in servers has become the 'front end network,' as stated by AMD. This network connects to front-end computing and storage servers, which typically handle data extraction and support access for many users and devices to AI services. It carries two types of traffic, north-south (NS) for traffic to and from the outside world (internet or other data centers) and east-west (EW) traffic from endpoints within the same data center network. Both have different requirements.

At the same time, a new network known as the 'back-end network' has also emerged. The main role of this network is to interconnect AI nodes for distributed computing. In AMD's view, the back-end network requires high performance and low latency to support the high-speed communication needs of AI workloads. Some machines are typically referred to as GPU nodes (containing one or more CPUs and GPUs). GPU nodes have multiple network interfaces on both networks. Nowadays, each GPU in GPU nodes has an RDMA NIC. Nodes are grouped to form a pod (connected to the same node and used for parallel processing of GPUs). A group of interconnected nodes collectively provide accelerated computing capabilities for specific computing tasks, forming a cluster.

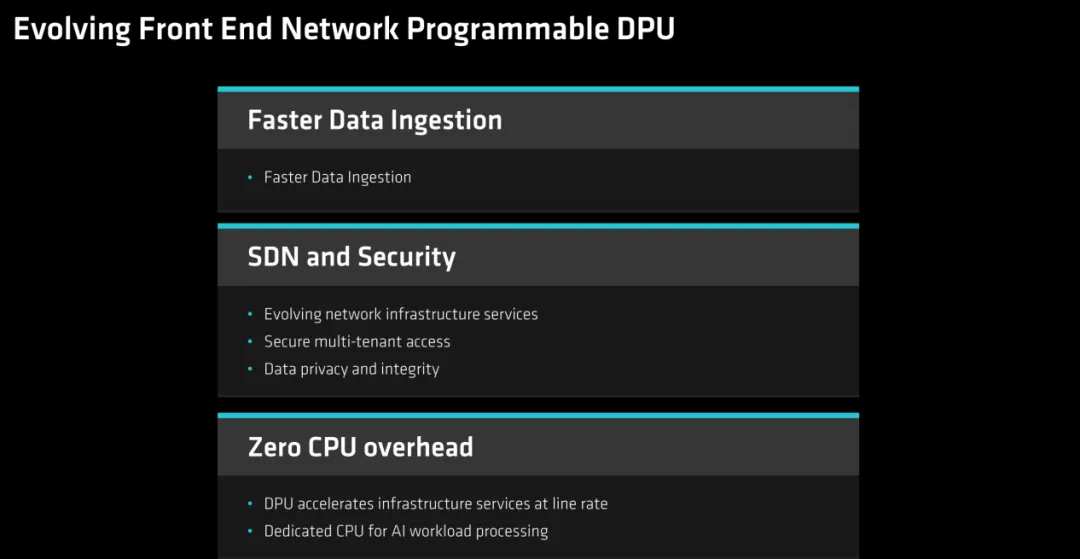

Different manufacturers are adopting different solutions to address these issues. AMD believes that DPU and Ethernet will be one solution.

Based on these considerations, AMD first acquired datacenter optimization startup Pensando for approximately $1.9 billion. The company's products include programmable data processing units (DPUs), which manage workload mobility in hardware infrastructure, moving work away from CPUs as much as possible to improve performance. At the same time, AMD is also involved in promoting the development of Ultra Ethernet.

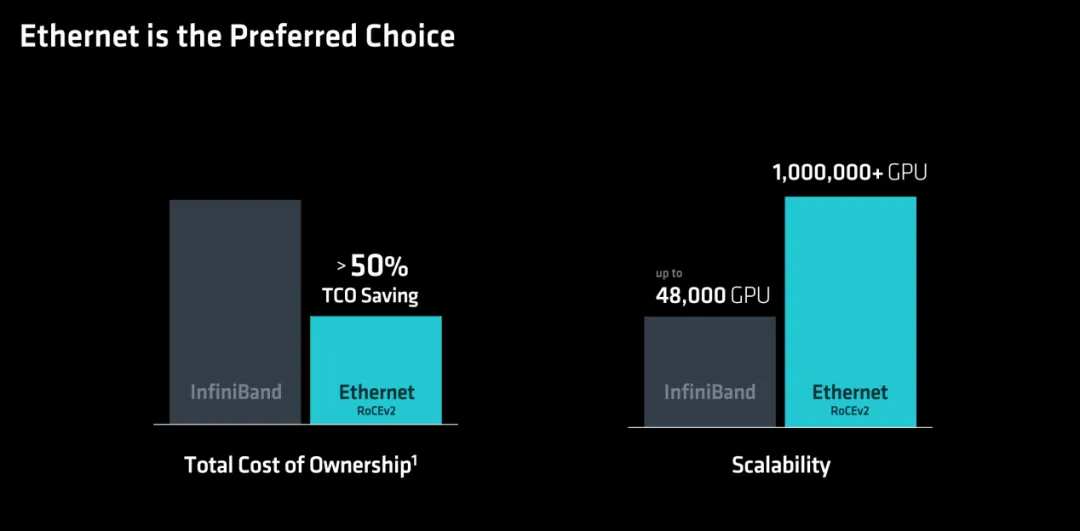

"For the back-end network, Ultra Ethernet is the preferred choice in terms of cost and scalability," emphasized by AMD.

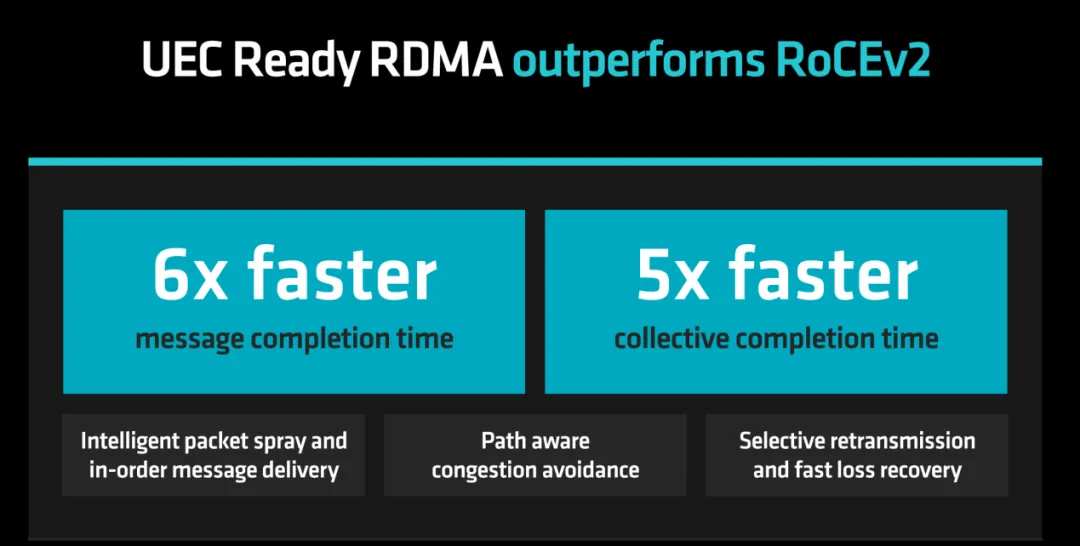

Under the promotion of high-speed Ethernet consortiums like UEC (Ultra Ethernet Consortium) by AMD, the performance of relevant protocols for Ultra Ethernet is excellent. At the same time, AMD also announced the third generation of P4 engines, Pensando Salina 400, and Pensando Pollara 400 at today's summit.

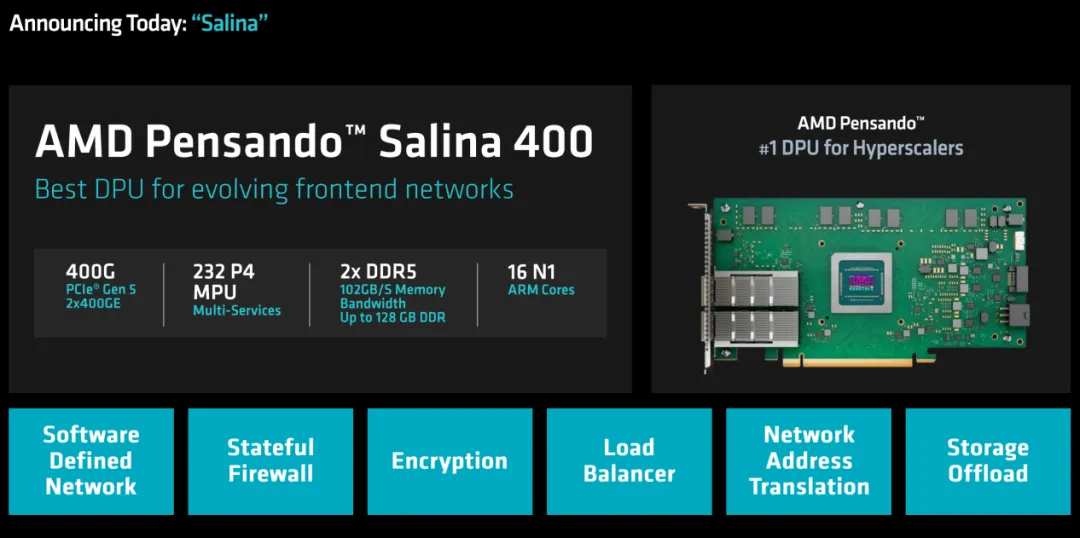

According to reports, Pensando Salina 400 is a DPU for the front-end network, also the third generation product with the highest performance and strongest programmability in the world. Compared to the previous generation, its performance, bandwidth, and scale have doubled. At the same time, this DPU also supports 400G throughput to achieve fast data transfer rates, making AMD Pensando Salina DPU a key component in AI front-end network clusters, optimizing the performance, efficiency, security, and scalability of data-driven AI applications.

As for the Pensando Pollara 400 with the AMD P4 programmable engine, it is the industry's first UEC ready AI NIC. It supports next-generation RDMA software and is supported by an open network ecosystem. In AMD's view, the new Pensando Pollara 400 is crucial for providing leading performance, scalability, and efficiency in accelerator-to-accelerator communication in the back-end network.

With these leading products, AMD can also have a share in the huge network system market. AMD also revealed that the company's Pensando Salina DPU and Pensando Pollara 400 will provide samples to customers in the fourth quarter of 2024 and are expected to be launched in the first half of 2025.

In conclusion,

Since Lisa Su took over as AMD CEO, AMD's market cap and revenue have achieved exponential growth, making the company one of the few all-around players in the AI chip market. This is partly due to the strategic planning of the management team led by Lisa Su; on the other hand, AMD's team is able to firmly execute the company's leadership strategy, which is also key to AMD's current success.

Now, with the growing scale of LLM, building a cluster with stronger CPUs and GPUs has become a common goal pursued by the world, which is also a consensus among LLM participants. With abundant computing power, network product line layout, and extensive investment in software ecosystems, AMD has become a pivotal player in the computing market.

As Lisa Su said on social media: "While the past 10 years have been amazing, the best is yet to come."

Editor/Rocky

以服务器CPU为例,在Lisa Su出任CEO后,AMD加大了这个市场的投入,并于2017年推出了公司面向数据中心市场的EPYC系列。历经七年的发展,AMD在服务器CPU市场不但已经收复失地,甚至还屡创新高。如图所示,在今年上半年,公司EPYC CPU的市场占有率高达34%,这足以说明EPYC的成功。

以服务器CPU为例,在Lisa Su出任CEO后,AMD加大了这个市场的投入,并于2017年推出了公司面向数据中心市场的EPYC系列。历经七年的发展,AMD在服务器CPU市场不但已经收复失地,甚至还屡创新高。如图所示,在今年上半年,公司EPYC CPU的市场占有率高达34%,这足以说明EPYC的成功。