South Korean tech giant Samsung Electronics announced on Tuesday that its performance forecast shows that third-quarter profits will be lower than expected. The world's largest semiconductor manufacturer stated in its performance forecast that it expects operating profit to reach around 9.1 trillion Korean won, a significant increase from last year's 2.43 trillion Korean won, but lower than analysts' expectations of 11.456 trillion Korean won (approximately $7.7 billion).

According to the Financial Intelligence APP, the latest performance forecast released by South Korean tech giant and the world's largest semiconductor manufacturer Samsung Electronics indicates that third-quarter profits are lower than the market's general expectations. In a performance forecast released on Tuesday, this global top-tier semiconductor manufacturer stated that the latest quarterly operating profit as of September is estimated to be approximately 9.1 trillion Korean won, a 274% increase from last year's 2.43 trillion Korean won. However, this figure did not meet the economists' general expectations compiled by LSEG. Against the backdrop of the recent surge in South Korean chip exports and the sharp drop in chip inventory due to the global AI boom, Samsung's operating profit forecast has disappointed analysts and chip stock investors.

Analysts covered by LSEG generally expected that Samsung Electronics' quarterly operating profit as of September 30 will be approximately 11.456 trillion Korean won (around $7.7 billion). Due to Samsung's performance in multiple quarters failing to achieve significant profit growth in HBM, data center DRAM, and NAND like competitors SK Hynix and Micron, some analysts doubt that Samsung has caught up with this unprecedented AI wave. According to analysts' expectations compiled by LSEG, Samsung's total revenue for this quarter is expected to reach approximately 81.96 trillion Korean won (approximately $61 billion).

Samsung's Vice Chairman and newly appointed head of the Device Solutions Division, Jun Young-hyun, even made a rare apology statement after the performance forecast was released, stating that efforts are being made to address significant issues related to HBM and other AI chip products. Samsung said in a statement: "The performance of Samsung's memory chip business, including HBM and enterprise SSDs, has decreased due to 'one-off costs and significant negative impact,' including inventory adjustments from mobile clients and increased supply of traditional storage products from other Asian storage chip companies."

Samsung's Vice Chairman and newly appointed head of the Device Solutions Division, Jun Young-hyun, even made a rare apology statement after the performance forecast was released, stating that efforts are being made to address significant issues related to HBM and other AI chip products. Samsung said in a statement: "The performance of Samsung's memory chip business, including HBM and enterprise SSDs, has decreased due to 'one-off costs and significant negative impact,' including inventory adjustments from mobile clients and increased supply of traditional storage products from other Asian storage chip companies."

South Korea's chip exports and inventory levels indicate a surge in global storage demand.

South Korea is home to the world's two largest semiconductor manufacturers, SK Hynix and Samsung, with Samsung being the global leading manufacturer of memory chips and the world's largest memory chip producer used in devices like laptops and servers. Samsung Electronics is also the world's second-largest player in the smart phone market. Additionally, Samsung is striving to become one of the most powerful suppliers for NVIDIA's demanding Hopper architecture and the latest Blackwell architecture AI GPU with the latest generation HBM3E.

Since 2023, the global AI boom sweeping through enterprises worldwide has driven a surge in AI server demand, with top global data center server manufacturers such as Dell and Super Micro Computer typically using Samsung and Micron data center DDR series products in their enterprise AI servers, and Samsung/Micron SSDs, one of the main storage applications for NAND memory, extensively used in computing system server main storage systems, while SK Hynix's HBM memory system is fully integrated with NVIDIA's AI GPU. This is also a key logic behind the surge in HBM memory systems, as well as the entire DRAM and NAND storage demand.

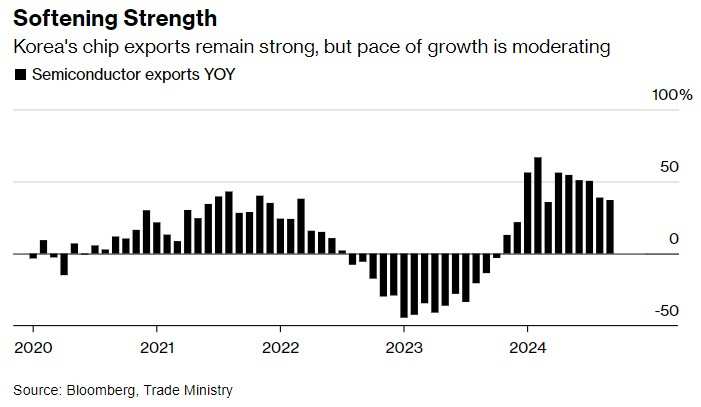

According to the South Korean chip export data and chip inventory data, the strong demand for storage chips appears to be more clear. Data released by the South Korean government shows that despite the slowdown, semiconductor exports in September still grew significantly by 37% year-on-year, marking an 11-month continuous growth, slightly weaker than the 38.8% increase in August.

In August, the inventory of South Korean chip products decreased at the fastest pace since 2009, indicating the continuous demand for high-performance storage chips used in artificial intelligence development. Data shows that South Korean chip inventory decreased by 42.6% compared to the same period last year, higher than the 34.3% reported in July. Production and shipments increased by 10.3% and 16.1% respectively, further indicating the continued prosperity of the global chip industry during most of the third quarter.

With the new construction and expansion of global datacenters over the past year, driven by the surge in AI computational power demand from applications like ChatGPT and Meta AI, enterprise-grade DRAM and NAND have become the core drivers of performance growth for global memory chip companies. Samsung is indeed an important leader, but its position in enterprise-grade markets is not as dominant as it is in consumer electronics like smartphones and PCs. Compared to the consumer market, the competition in the enterprise market is more complex, with major players including Micron, SK Hynix, Western Digital, and NetApp. Micron and SK Hynix have shown more prominent performance in the enterprise SSD market compared to Samsung, especially in the area of data center storage custom solutions.

There is a strong demand for enterprise storage and HBM, but storage giant Samsung seems to have missed the opportunity.

Regarding the critically important HBM storage system for AI large model training/inference and Nvidia's H100, H200, and latest Blackwell architecture AI GPU hardware system, the company also mentioned that there has been an unexpected delay in shipping high-bandwidth memory 'HBM3E' storage systems to major customers (high probability being Nvidia and AMD), further confirming market speculation that the current major storage chip manufacturers do not have competitive HBM products. Samsung may have missed out on this surge in HBM demand.

The HBM storage system, paired with the core hardware provided by AI chip leader Nvidia – H100/H200/GB200 AI GPU, along with HBM, is essential in driving heavyweight AI applications like ChatGPT and Sora. As the market's demand for Nvidia's full line of AI GPU products seems endless, Nvidia has become the world's most valuable chip company. The HBM storage system can deliver information faster, aiding in developing and running large-scale artificial intelligence models.

Nvidia's H200 AI GPU and the latest generation of HBM storage systems, HBM3E, produced by SK Hynix, based on the Blackwell architecture of B200/GB200 AI GPU, with another major HBM3E supplier being the US storage giant Micron. Micron's HBM3E is highly likely to be paired with Nvidia's H200 and the newly launched high-performance B200/GB200 AI GPU models.

HBM is a high-bandwidth, low-power storage technology, specifically used in high-performance computing and graphics processing fields. HBM connects stacked multiple DRAM chips together through 3D stacked storage technology, enabling high-speed and high-bandwidth data transmission via fine Through-Silicon Vias (TSVs). This achieves high-speed, high-bandwidth data transfer. Through 3D stacking technology, HBM stacks multiple memory chips together, significantly reducing the storage system's space footprint, lowering data transfer energy consumption, and improving data transfer efficiency, allowing large AI models to run more efficiently 24/7.

In particular, HBM storage systems have strong low-latency characteristics and can quickly respond to data access requests. Generating AI models like GPT-4 often require frequent access to large datasets and heavy model inference workloads. The powerful low-latency feature greatly improves the overall efficiency and responsiveness of AI systems. In the AI infrastructure field, HBM storage systems are fully integrated with NVIDIA's H100/H200 AI GPU server systems, as well as the upcoming NVIDIA B200 and GB200 AI GPU server systems.

"Well, let's take a look at these numbers - it is indeed very disappointing," said Daniel Yoo, the Global Asset Allocation Director of Yuanta Securities in South Korea. He also pointed out that the demand for traditional storage chips used in PCs and smartphones by Samsung has not significantly increased globally.

"Samsung has not aggressively captured the strongest demand for storage chips in the emerging AI infrastructure field as we have seen in the past. I think this is a major issue we are seeing," Yoo added.

"The company needs to maintain flexibility in its storage product supply system control, as the decline in the traditional DRAM market focused on personal products may cause greater harm to Samsung than its smaller competitors," analysts from Macquarie Securities Research stated in a recent report. DRAM is typically used in smartphones and personal computers, while enterprise-grade traditional DRAM is used in areas such as enterprise servers.

Media reports in September cited two informed sources as saying that Samsung has instructed its global subsidiaries to reduce staff in certain departments by about 30%. This move aims to provide ample cash flow support for the successful launch of higher-performance and NVIDIA flagship AI GPU-compliant HBM, as well as enterprise SSDs with stronger demand than consumer electronics. There have even been reports that, to accelerate HBM R&D, production manufacturing, and comprehensive testing of core technologies, Samsung executives have even mandated overtime work for storage line employees.

According to LSEG statistics, Samsung Electronics' stock price listed on the South Korea Stock Exchange has fallen by 22% year-to-date. The company is set to announce detailed third-quarter performance later this month. Samsung Electronics stock fell 0.98% after the guidance was released.

三星副董事长、设备解决方案部门新任负责人Jun Young-hyun甚至在业绩预告发布后罕见地发表了致歉声明,并且表示正在努力解决与HBM等人工智能芯片产品相关的重大问题。三星在一份声明中表示:“包含HBM以及企业级SSD的三星存储芯片业务的业绩因‘一次性成本和巨大的负面影响’而下降,其中包括移动端客户的库存调整和亚洲其他的存储芯片公司传统存储产品的供应增加。”

三星副董事长、设备解决方案部门新任负责人Jun Young-hyun甚至在业绩预告发布后罕见地发表了致歉声明,并且表示正在努力解决与HBM等人工智能芯片产品相关的重大问题。三星在一份声明中表示:“包含HBM以及企业级SSD的三星存储芯片业务的业绩因‘一次性成本和巨大的负面影响’而下降,其中包括移动端客户的库存调整和亚洲其他的存储芯片公司传统存储产品的供应增加。”