Ricoh Co., Ltd. (President and CEO: Akira Oyama) has developed a high-performance Japanese large-scale language model (LLM*) by improving the Japanese performance of "Meta-Llama-3-70B" provided by Meta Platforms Inc. to the base model "Llama-3-Swallow-70B*1", extracting vectors from the company's Instruct model, and merging the Chat Vectors*2 extracted from Ricoh and Chat Vectors*3 produced by Ricoh with Ricoh's unique expertise. As a result, Ricoh has added a high-performance model equivalent to GPT-4 developed by OpenAI to the lineup of LLM developed and provided by Ricoh.

The increasing spread of generative AI has led to a growing demand for high-performance LLM that companies can utilize in their operations. However, there is a challenge that additional learning of LLM is costly and time-consuming. In response to this challenge, the efficient development method of combining multiple models to create a higher-performance model, known as "Model Merge*5", is gaining attention.

Based on the expertise in model merging and the knowledge of LLM development, Ricoh has developed a new LLM. This technology contributes to streamlining the development of private LLMs unique to companies and high-performance LLMs for specific operations.

Ricoh will continue to promote research and development of diverse and efficient methods and technologies in order to not only develop its own LLMs but also to provide optimal LLMs tailored to customers' applications and environments at low cost and short delivery times.

Ricoh will continue to promote research and development of diverse and efficient methods and technologies in order to not only develop its own LLMs but also to provide optimal LLMs tailored to customers' applications and environments at low cost and short delivery times.

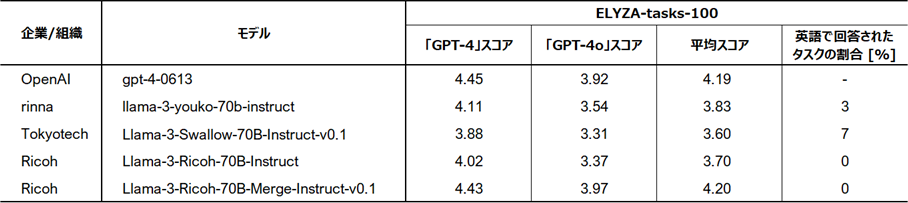

Evaluation Results*6 (ELYZA-tasks-100)

"ELYZA-tasks-100", a representative Japanese benchmark including complex instructions and tasks, Ricoh's LLM developed using the model merge method in this instance showed a high level of score equivalent to GPT-4. Furthermore, while other LLMs compared showed cases where answers were given in English depending on the task, Ricoh's LLM consistently provided responses in Japanese for all tasks showing high stability.

Comparison Results with Other Models in Benchmark Tool (ELYZA-tasks-100) (Ricoh at the bottom)

Comparison Results with Other Models in Benchmark Tool (ELYZA-tasks-100) (Ricoh at the bottom)Background of Ricoh's LLM development

Against the backdrop of declining labor force and aging population, the use of AI to improve productivity and create high-value working styles has become a challenge for corporate growth. As a means to address this challenge, many companies are focusing on the practical application of AI in their operations. However, in order to apply AI to actual operations, it is necessary to train LLM with large amounts of text data including company-specific terminology and phrasing, to create their own AI model (Private LLM).

Leveraging its top-class LLM development and learning technology in Japan, Ricoh is capable of proposing various AI solutions such as providing private LLM for enterprises and supporting the utilization of internal documents through the introduction of RAG.

Related News

- Ricoh has developed a large-scale language model (LLM) with 70 billion parameters that supports three languages: Japanese, English, and Chinese, and strengthens support for customers' private LLM construction.

- Developed a Japanese LLM with 13 billion pre-tuned parameters

- Developed a high-precision Japanese large language model (LLM) with 13 billion parameters

This news release is also available as a PDF file.

Ricoh has developed a high-performance Japanese LLM (70 billion parameters) equivalent to GPT-4 through Model Merge (224KB, 2 pages in total).

リコーは、自社製LLMの開発だけではなく、お客様の用途や環境に合わせて、最適なLLMを低コスト・短納期でご提供するために、多様で効率的な手法・技術の研究開発を推進してまいります。

リコーは、自社製LLMの開発だけではなく、お客様の用途や環境に合わせて、最適なLLMを低コスト・短納期でご提供するために、多様で効率的な手法・技術の研究開発を推進してまいります。