A very good stress test.

Last week, a series of bearish news such as economic data and financial report figures pulled down the US stock market, some of which were specifically targeted at the hot stock Nvidia. As a result, a wave of heavy selling occurred. On Tuesday, Nvidia fell sharply by 7%, but rose nearly 13% on Wednesday, and then fell nearly 7% on Thursday; on Friday, the intraday decline reached 7%. Such a large wave also caused a group of technology stocks to fluctuate wildly. Even the VIX index surged from 15 to 65, directly multiplying several times. Behind this major shock in technology stocks is the market's growing concerns about the future returns of AI in the context of already inflated valuations. Some institutions believe that if Nvidia's performance at the end of the month is not good, the bubble may burst at any time.

In this context of multiple bearish news, US technology stocks experienced significant fluctuations. On Tuesday, Nvidia fell sharply by 7%, but rose nearly 13% on Wednesday, and then fell nearly 7% on Thursday; on Friday, the intraday decline reached 7%. This large fluctuation also caused a group of technology stocks to fluctuate wildly, and even the VIX index surged from 15 to 65, multiplying several times.

The current earthquake in the technology stock market is actually due to the fact that valuations have already risen too high, and the market is beginning to worry about the later returns of AI. Some institutions believe that if Nvidia's performance at the end of the month is not good, the bubble may burst at any time.

Can Nvidia, amid the turmoil, withstand the pressure and lead the US stock market to further ascend?

01

Recently, several news items have hit Nvidia.

First, on July 30th, there was news that Apple's AI model was trained on Google's TPU rather than using Nvidia's H100.

Just one day later, there was another report that Nvidia's next-generation killer Blackwell GPU would be delayed from its original scheduled release in Q3 of this year to Q1 of next year due to design flaws that needed to be addressed.

Subsequently, the US Department of Justice also targeted Nvidia, collecting charges from competitors and clients. According to the charges, Nvidia either forced customers to purchase supporting products such as Ethernet cables and racks along with their GPUs, or they would lose their right to priority shipping. But if they used Nvidia's custom racks, the giants would not be able to flexibly switch between different AI chips and would be caught in a passive position.

It is said that Nvidia's vice-president even said, 'Whoever buys the rack will get priority to buy the GB200.' This has forced Microsoft and Google to buy Nvidia's GPUs with money and spend a lot of money on these supporting products. This may make the suppliers very uncomfortable.

In addition, one of the server suppliers for Nvidia, Super Micro Computer, also feels a bit uncomfortable. $SMCI.US$ The company's stock price once doubled rapidly with the increase in AI server demand, but its bargaining power was weakened due to competition with Dell, HPE and other vendors for Nvidia orders.

As a result, the company's revenue grew significantly last quarter, but its profit performance fell short of market expectations, and as of the time of writing, the stock fell more than 14% before the market opened.

In addition, the US Department of Justice is also reviewing whether Nvidia's acquisition of the startup company Run:ai involves improper conduct.

In summary, as Nvidia's position in the market for generative AI chips is well known, this is essentially an anti-monopoly investigation. Although it came quickly and fiercely, it usually takes several years to argue the case before a result is reached, and the result may cause Nvidia to be more restrained.

What concerns the market most is actually the progress of Nvidia's next-generation chip and the impact of the delayed delivery.

Blackwell is Nvidia's next-generation chip architecture, an extension of the previous Hooper architecture, which inherits the idea of assembling H200 chipsets and splicing two GPU dies (bare chips) onto one GPU chipset. Compared to the previous Hooper architecture GPU products, Blackwell architecture GPU's single-chip training performance (FP8) is 2.5 times that of the Hooper architecture, and its inference performance (FP4) is 5 times that of the Hooper architecture, while power consumption is still relatively low. This makes it hard for the technology giants who invest heavily in building data centers to refuse.

But the latest news suggests that this technology has not yet been fully matured. Therefore, the bearish rumor of the delayed delivery of the Blackwell chip has become one of the culprits for the drop in stock price.

Over the weekend, some institutions gave their views, believing that the impact of chip delay is not as bad as some have claimed. Morgan Stanley believes that B100's delay will last for one quarter, but GB200's delivery time will remain basically unchanged. Goldman Sachs believes that after TSMC's production capacity expands in Q4, it may catch up with the delay caused by chip improvements in Q3.

In terms of revenue contribution, the second half of 2024 should be the peak period of shipments for the H series, and there are not many revenue expectations for the Blackwell architecture in Q3, so it will not have a significant impact on this year's performance.

But if the impact is indeed as rumored, and it must be delayed until the middle of next year to be delivered, confirming that the technical issues require more than one or two quarters to resolve, then the performance expectations for the first quarter of next year are likely to be adjusted.

02

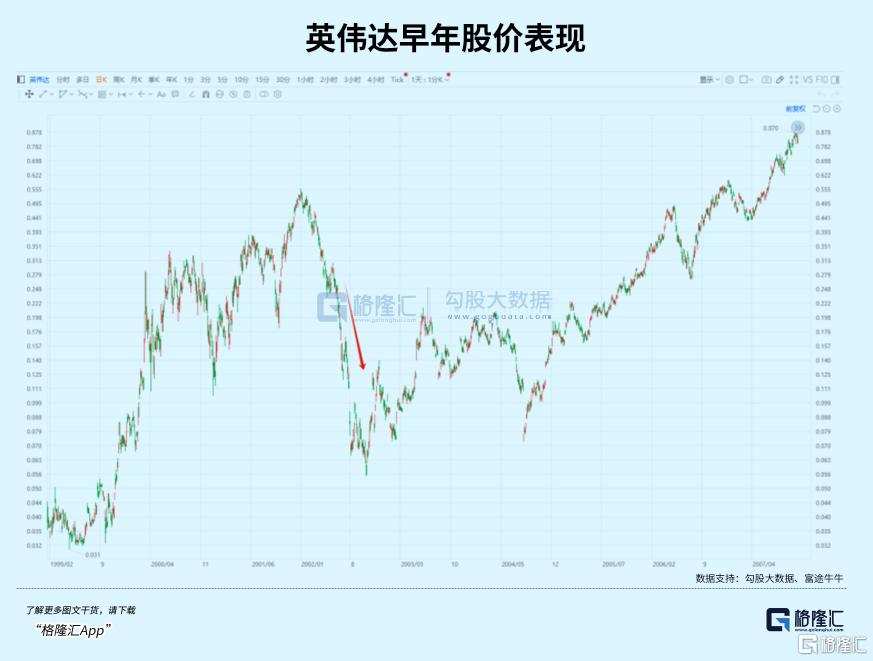

Nvidia's stock price has fallen more than 20% since its high in July. Coupled with the selling off of senior executives such as Huang Renxun, there is now a tendency for selling pressure. Every time there is a downturn, there are various explanations for it, such as the case for Cisco.

Since its listing in 1990, until the peak of the Internet bubble in 2000, Cisco's stock price almost doubled every year, while its performance maintained a growth rate of 40%, and its gross margin remained above 65%. Cisco, which focuses on switches and routers, guarded the gateway of the Internet world and had a position similar to that of Nvidia, which currently distributes AI big model tickets. Product structure, 10-30 billion yuan products operating income of 401/1288/60 million yuan respectively.

In 2000, when the performance of Internet hardware providers such as Nortel, Juniper, and Lucent began to decline significantly, Cisco's performance was almost unaffected. Analysts also tried to support it, believing that Cisco's strength was due to its technological barriers allowing its share to continue expanding. In the optimistic mood of rising stock prices, the management ignored the warning signals and continued to increase inventory in large quantities. Two quarters later, Cisco's stock price plummeted 60% due to a decline in orders.

In Cisco's story, it was not just the slowdown in downstream demand that caused the stock price to collapse, but also the problem of technological barriers being broken, capacity no longer being scarce, and the transfer of the internet value chain, which resulted in the market only being willing to give it a valuation of about ten times its current market cap of over 180 billion. Against the background of the bubble narrative, these risks are points that any high-tech company should consider, and Nvidia's response is still very cautious. Short-term concerns include 1) large customers stocking up on GPUs; 2) inadequate return on investment, and downstream capital expenditures being passively reduced.

Including AI faithful believers such as Wooden Chie, they also believe that in order to lock in the entry ticket of the big model elimination match, the giants such as Microsoft are currently overdrawing their demand for Nvidia's GPUs. If there is no explosive revenue growth prospect to prove the correctness of overdevelopment, it implies that there may be a phased excess. These concerns are not unreasonable. Several giants currently occupy half of Nvidia's total revenue, and "big customer dependency syndrome" has been reflected in Nvidia's development process.

For example, in 2002, when it was still a small shrimp, Nvidia's five largest customers accounted for 65% of its share, and after the big customer Microsoft cancelled the order, Nvidia's performance and stock price quickly plummeted. Today, a similar situation still exists. Although Nvidia, which is committed to computing power, stands at the pinnacle of Silicon Valley's power, its major customers cannot bypass Nvidia's chips on their next growth point. But once AI applications fail to earn as much money as expected, some irrational demand may not be able to be digested again. Even if the big players do not cancel their orders, their subsequent demand may weaken, leading to a possible downward revision of Nvidia's future growth prospects.

In the short term, the biggest worry is that 1) large customers have accumulated enough GPUs; 2) the input-output ratio is not satisfactory, and downstream capital expenditures are passively reduced. Even AI investment still seems to be an unstoppable trend. This risk is still not enough to worry about.

AI faithful believers like Wooden Chie also believe that in order to lock in the entry ticket of the big model elimination game, the giants' crazy purchases are overdrawing demand for Nvidia's GPUs. If there is no explosive revenue growth prospect to prove the correctness of overdevelopment, it implies that there may be a phased excess.

This concern is not unfounded. Several giants currently occupy half of Nvidia's total revenue, and "big customer dependency syndrome" has been reflected in Nvidia's development process.

For example, in 2002, when it was still a small shrimp, Nvidia's five largest customers accounted for 65% of its share, and after the big customer Microsoft cancelled the order, Nvidia's performance and stock price quickly plummeted.

Today, a situation similar to this scenario still exists. Although Nvidia, which is committed to computing power, stands at the pinnacle of Silicon Valley's power, its major customers cannot bypass Nvidia's chips on their next growth point.

But once AI applications fail to earn as much money as expected, some irrational demand may not be able to be digested again. Even if the big players do not cancel their orders, their subsequent demand may weaken, leading to a possible downward revision of Nvidia's future growth prospects.

However, judging from the trend of AI investment that is still in full swing, this risk seems to be still not worth worrying about.

Nvidia also has a backup plan, such as the already launched DGX Cloud, which has the intention to move towards cloud computing services, competing with its downstream major customers. Although it is hosted on other cloud platforms, cloud services generated billions of dollars in revenue last year, which is complementary to its chip business.

Nvidia's acquisition of Run:AI may also be based on this consideration. Run:AI is a company that focuses on improving AI efficiency and helping to reduce the number of GPUs needed to complete training tasks. If Nvidia integrates it into its products, it will not only eliminate the possibility of being used by downstream customers but also amplify the advantages of DGX Cloud.

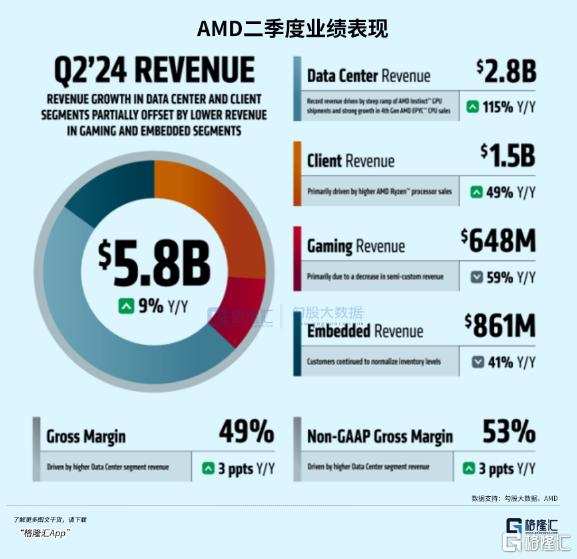

In the long run, Nvidia will not always occupy a dominant position in the large-scale training and reasoning chip market, and this consensus is very clear. On the one hand, competitors such as AMD, whose net profit increased 8.8 times year on year in the second quarter of this year, earned more than 1 billion in revenue in a single quarter against Nvidia's H100 and also took care of their downstream customers' needs, but sold their products cheaper than the company. They will continue to expand their chip supply in the future. On the other hand, in recent years, technology giants have personally entered the chip-making field for the sake of cost reduction and to constrain this increasingly "arrogant" chip supplier.

Each time news of a dedicated chip is released, improving performance and power consumption and threatening Nvidia's GPU, the market considers it a threat to Nvidia. However, Nvidia's software and hardware moats of GPUs and CUDA are very strong, and technology giants have not yet posed a threat to the company in self-developed chips. The biggest consideration is still the cost of self-developed chips versus outsourced chips.

The fixed costs of chip design, R&D, and manufacturing are already a hefty expense, and the cost of making a one-time chip can easily run into billions of dollars. For the R&D side, the more available scenarios, the more it can amortize more R&D costs. Nvidia's general architecture can be used in multiple scenarios, which is an advantage that large customers who only invest in dedicated scenarios cannot compare with. When Nvidia and downstream customers run to Taiwan Semiconductor and wait for production scheduling while holding orders, according to the scale, who will Taiwan Semiconductor prioritize for chip supply? It's self-explanatory.

This concern is not unfounded. Several giants currently occupy half of Nvidia's total revenue, and "big customer dependency syndrome" has been reflected in Nvidia's development process.

For example, in 2002, when it was still a small shrimp, Nvidia's five largest customers accounted for 65% of its share, and after the big customer Microsoft cancelled the order, Nvidia's performance and stock price quickly plummeted.

Today, a situation similar to this scenario still exists. Although Nvidia, which is committed to computing power, stands at the pinnacle of Silicon Valley's power, its major customers cannot bypass Nvidia's chips on their next growth point. But once AI applications fail to earn as much money as expected, some irrational demand may not be able to be digested again. Even if the big players do not cancel their orders, their subsequent demand may weaken, leading to a possible downward revision of Nvidia's future growth prospects.

As a result, Nvidia, which maintains a consistent architecture, is able to iterate a new chip every 18 months, while the cycle from chip design to test application is stretched out, forcing customers to abandon their bold ideas. Even Google, which is recognized by Apple for its TPU, is reluctant to give up purchasing GPUs.

Therefore, even facing a joint siege, such as the 'anti-CUDA' alliance launched by major customers last year attempting to recreate a compile tool compatible with CUDA, Nvidia is not necessarily afraid. Because by the time they achieve a level close to CUDA, Nvidia has already released the next-generation GPU.

03

Huang Renxun once said: 'Run for food, or run to not be someone else's food. In either case, keep running.'

Someone said that Nvidia’s arrogance is like Cisco’s back then, and urgently needs someone to join forces, but Huang Renxun is always the one who keeps saying that 'The company is going bankrupt.'

In order to avoid ending up like Cisco, Nvidia has implemented measures such as stocking up GPUs, forcibly allocating graphics cards, and building their own cloud, all designed to guard against downstream customers defecting at any time. This may result in a delay, and perhaps it will make the market realize how much they need Nvidia's chips, but it is also a very good stress test for Nvidia. If its stock price can continue to plummet along with the market, it may hit a very rare gold mine.

The music may stop at any time, but the dance continues. Give Nvidia more time. (End of the full text)

Editor/new