Source: Tencent Technology

1. Huang Renxun emphasized that generative AI is growing at an exponential rate and that businesses need to adapt and utilize this technology quickly, rather than standing by and falling behind the pace of technological development.

2. Huang Renxun believes that open and closed source AI models will coexist and that companies need to leverage their respective strengths to promote the development and application of AI technology.

3. Huang Renxun proposed that the development of AI needs to consider energy efficiency and sustainability, reducing energy consumption by optimizing the use of computing resources and promoting the inference and generation capabilities of AI models to achieve more eco-friendly intelligent solutions.

4. With the constant accumulation of data and the continuous advancement of intelligent technology, customer service will become a key area for companies to achieve intelligent transformation.

5. According to foreign media reports, at the 2024 Databricks Data + AI Summit held recently,

6. Founder and CEO Huang Renxun had a fascinating conversation with Ali Ghodsi, co-founder and CEO of Databricks. The dialogue between the two parties demonstrated the importance and development trends of artificial intelligence and data processing technology in modern enterprises, emphasizing the key role of technological innovation, data processing capabilities and energy efficiency in promoting enterprise transformation and industry development.

7. Huang Renxun looked to the future of data processing and generative AI in the conversation. He pointed out that the business data of each company is like an untapped gold mine, with tremendous value but extracting deep insight and intelligence from it has always been a daunting task.

8. Huang Renxun also talked about open source models like Llama and DBRX are driving corporate transformation into AI companies, activating a global AI movement and promoting technological development and corporate innovation. Through the collaboration between NVIDIA and Databricks, the two companies will work together to leverage their respective strengths in accelerating computing and generative AI, bringing unprecedented benefits to users.

9. The following is the transcript of the conversation:

10. Moderator: I am very excited to introduce our next guest, a man who needs no introduction, the one and only global rock star CEO - NVIDIA CEO Huang Renxun. Please come to the stage. Thank you very much for coming! I want to start with NVIDIA's remarkable performance, with a market capitalization of up to 3 trillion US dollars. Did you ever think five years ago that the world would evolve so rapidly and present such a remarkable picture today?

11. Huang Renxun: Absolutely! I expected that from the beginning.

12. Moderator: That's really amazing. Can you offer some advice to the CEOs in the audience on how to achieve their goals?

13. Huang Renxun: Whatever you decide to do, my advice is not to get involved in the development of graphics processors (GPUs).

14. Moderator: I will tell the team that we are not going to get involved in that field. We spent a lot of time today discussing the profound significance of data intelligence. Enterprises have vast amounts of proprietary data that are critical for building customized artificial intelligence models. The deep mining and application of this data are crucial to us. Have you also noticed this industry trend? Do you think we should increase our investment in this area? Have you collected any feedback and insights from the industry on this issue?

15. Huang Renxun: Every company is like a gold mine with abundant business data. If your company offers a series of services or products and customers are satisfied with them while giving valuable feedback, you have accumulated a large amount of data. These data may involve customer information, market trends, or supply chain management. Over the years, we have been collecting these data and have a huge amount of data, but until now, we have just started to extract valuable insights from them, and even higher-level intelligence.

16. Currently, we are passionate about this. We use these data in chip design, defect databases, creation of new products and services, and supply chain management. This is our first time using engineering processes based on data processing and detailed analysis, building learning models, then deploying these models, and connecting them to the Flywheel platform for data collection.

17. Our company is moving towards the world's largest companies in this way. This is, of course, due to the extensive use of artificial intelligence technology in our company, which has helped us achieve many remarkable achievements. I believe that every company is experiencing such changes, so I think we are in an extraordinary era. The starting point of this era is data, and the accumulation and effective use of data.

18. The harmonious coexistence of open source and closed source

19. Moderator: This is truly amazing and very much appreciated. At present, the debate about closed-source and open-source models is gradually heating up. Can open-source models catch up? Can they coexist? Will they eventually be dominated by a single closed-source giant? What is your view of the entire open-source ecosystem? What role does it play in the development of large language models? And how will it develop in the future?

Author: Li Haidan

On July 30th Beijing Time, many of the latest developments in rendering, simulation and generative AI were showcased at SIGGRAPH 2024 in Denver, USA. Last year's SIGGRAPH saw Nvidia launch GH200, L40S graphics cards, ChatUSD, etc. This year's protagonist is Nvidia's new trump card in the generative AI era - the upgraded Nvidia NIM, which applies generative AI to USD (Universal Scene Description) through NIM, expanding the possibilities of AI in the 3D world.

On July 30th Beijing Time,$NVIDIA (NVDA.US)$Many of the latest developments in rendering, simulation, and generative AI in the field were showcased at SIGGRAPH 2024 in Denver, USA.

Last year, Nvidia launched GH200, L40S, ChatUSD and other products at SIGGRAPH. This year, Nvidia's upgraded Nvidia NIM is the protagonist, which is a new trump card in the era of generative AI, and applies generative AI to USD through NIM, expanding the possibilities of AI in the 3D world.

01 Nvidia NIM Upgrade: both a gospel and a challenge

Nvidia announced that Nvidia NIM has been further optimized and standardized the deployment of AI models. NIM is a key part of Nvidia's AI layout. Huang Renxun has praised NIM many times for its innovations, calling it "AI-in-a-Box, essentially artificial intelligence in a box."

This upgrade undoubtedly consolidates Nvidia's leading position in the AI field and becomes an important part of its technical moat.

CUDA has always been considered a key factor in Nvidia's leading position in the GPU field. With the support of CUDA, GPUs have evolved from single graphics processors to general-purpose parallel computing devices, making AI development possible. However, although Nvidia's software ecosystem is very rich, for traditional industries that lack AI basic development capabilities, these scattered systems are still too complex and difficult to master.

To solve this problem, in March of this year, Nvidia launched NIM (Nvidia Inference Microservices) cloud-native microservices at the GTC conference, integrating all software developed over the past few years to simplify and accelerate the deployment of AI applications. NIM can deploy models as optimized "containers" that can be deployed in the cloud, data centers, or workstations, allowing developers to complete the work in a few minutes, such as easily building generative AI applications for co-pilots and chatbots.

Until now, the Nvidia-laid NIM ecosystem can provide a range of pre-trained AI models. Nvidia announced that it helps developers accelerate application development and deployment in multiple fields, and provides specific AI models in different fields (such as understanding, digital humans, 3D development, robot technology, and digital biology).

In the field of understanding, NIM can use Llama 3.1 and NeMo Retriever to enhance the processing capabilities of text data. In the digital human direction, it provides models such as Parakeet ASR and FastPitch HiFiGAN, supporting high-fidelity speech synthesis and automatic speech recognition, providing powerful tools for building virtual assistants and digital humans.

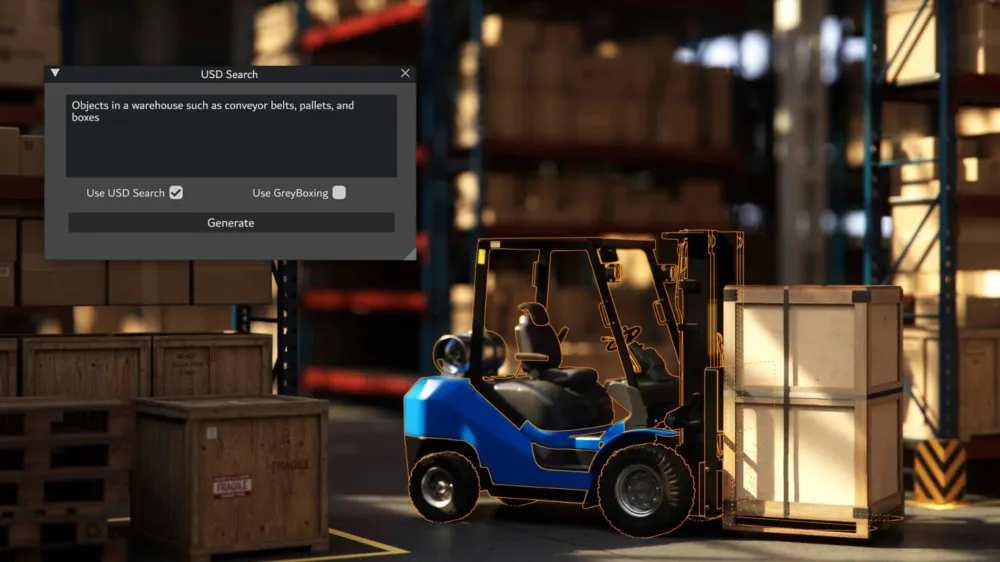

In 3D development, models such as USD Code and USD Search simplify the creation and operation of 3D scenes, helping developers to efficiently build digital twins and virtual worlds.

In the direction of robot embodiment, Nvidia has launched the MimicGen and Robocasa models, which accelerate the research and application of robot technology through the generation of synthetic motion data and simulation environments. MimicGen NIM can generate synthetic motion data based on remote operation data recorded by spatial computing devices such as Apple Vision Pro. Robocasa NIM can generate robot tasks and simulation-ready environments in OpenUSD, a general-purpose framework for developing and collaborating in the 3D world.

Digital biology models such as DiffDock and ESMFold provide advanced solutions for drug discovery and protein folding prediction in the field of digital biology, promoting the progress of biomedical research, and so on.

In addition, Nvidia announced that the Hugging Face inference-as-a-service platform is also supported by Nvidia NIM and runs in the cloud.

By integrating these versatile models, Nvidia's ecosystem not only improves the efficiency of AI development but also provides innovative tools and solutions. However, although the many upgrades of Nvidia NIM are indeed a "blessing" for the industry, they also bring many challenges to programmers.

By providing pre-trained AI models and standardized APIs, Nvidia NIM greatly simplifies the development and deployment of AI models, which is indeed a blessing for developers. However, does this also mean that the employment opportunities of ordinary programmers may further shrink in the future? After all, companies can complete the same work with fewer technical personnel, because these tasks have been pre-completed by NIM, and ordinary programmers may no longer need to perform complex model training and tuning work.

02 Teaching AI to think in 3D and building a virtual physical world

NVIDIA also demonstrated the application of generative AI on OpenUSD and Omniverse platforms at the SIGGRAPH conference.

NVIDIA announced that it created the world's first generative AI model that can understand geometry, materials, physics, and space based on the OpenUSD (Universal Scene Description) language and packaged these models into Nvidia NIM microservices.

With the enhancement and accessibility of Nvidia NIM microservices to OpenUSD, industries of all kinds can build physics-based virtual worlds and digital twins in the future. With the new generative AI based on OpenUSD and the Nvidia acceleration development framework built on the Nvidia Omniverse platform, more industries can now develop applications for visual industrial design and engineering projects, as well as simulations to build the next wave of physical AI and robots. In addition, the new USD connector connects robotics and industrial simulation data formats and developer tools, allowing users to stream large-scale NVIDIA RTX ray-traced data sets to Apple Vision Pro.

In short, introducing USD through NVIDIA NIM and understanding the physical world better through large models and building virtual worlds is a valuable digital asset. For example, in 2019, the Notre-Dame de Paris suffered a serious fire and the church was extensively damaged. Fortunately, Ubisoft game designers had visited the building countless times to learn its structure and completed the digital restoration of Notre-Dame de Paris. In the 3A game "Assassin's Creed: Revolution," they reproduced all the details of Notre-Dame de Paris, which greatly helped the church's restoration. At that time, designers and historians spent two years replicating it, but with the advent of this technology, we can speed up the reproduction of digital copies on a large scale in the future and refine our understanding and replication of the physical world through AI.

Designers can also build basic 3D scenes in Omniverse and use these scenes to adjust generative AI for controllable and collaborative content creation processes. For example, WPP and Coca-Cola were the first to adopt this workflow to expand their global advertising campaigns.

Nvidia also announced several new NIM microservices that will further enhance developers' capabilities and efficiency on the OpenUSD platform, including USD Layout, USD Smart Material, and FDB Mesh Generation.

NVIDIA Research, with more than 20 papers, participated in the conference and shared innovative results related to driving the development of synthetic data generators and inverse rendering tools. Two papers won the Best Technical Paper Award. This year's research shows that AI has improved its capabilities by improving image quality and unlocking new 3D representations; at the same time, improved synthetic data generators and more content have also improved AI's level. These studies showcase Nvidia's latest advances and innovations in AI and simulation fields.

NVIDIA stated that designers and artists now have new and improved ways to increase productivity by using generative AI trained on licensed data. For example, Shutterstock, a US image supplier, has released a commercial beta version of its generative 3D service. It allows creators to prototype 3D assets quickly with text or image prompts and generate 360 HDRi backgrounds to illuminate the scene; and Getty Images, a US image trading company, has accelerated its generative AI service to double the speed of image generation and improve output quality. The services are based on the multimodal generative AI architecture Nvidia Edify, which doubles the speed with new models, improves image quality and prompt accuracy, and allows users to control camera settings such as depth of field or focal length. Users can generate four images in about six seconds and enlarge them to 4K resolution.

Conclusion

In all the occasions where Jensen Huang has appeared, he always wears a leather jacket, depicting the exciting future brought by AI to the world.

We have also witnessed NVIDIA's growth, from a game GPU giant to an AI chip dominator, and then to a horizontal AI software and hardware full-stack layout. NVIDIA is full of ambition, rapidly iterating at the forefront of the AI technology wave.

From programmable shading GPUs and CUDA accelerated computing to the launch of Nvidia Omniverse and generative AI NIM microservices, to the promotion of 3D modeling, robot simulation, and digital twin technologies, it also means a new round of innovation in the AI industry is coming.

However, as large companies have more resources, including funds, technology, and manpower, they may be able to adopt and implement advanced technologies such as Nvidia NIM more quickly. Small and medium-sized enterprises may find it difficult to keep up with the pace of technological development due to limited resources. Coupled with differences in talent and technology levels, will this lead to further technological inequality in the future?

The ideal AI for humanity is to help liberate hands and labor, and bring a higher productivity world to humanity. But when productivity and means of production are controlled by a small number of people, will it cause a deeper crisis? These are the issues we need to consider.

Editor/Somer