Source: Toonkawa Technology Review

Author: Ye Ziling

The next battleground for the next soldier.

Earlier this year, foreign media revealed$Microsoft (MSFT.US)$A “crazy plan” with OpenAI: spend 100 billion dollars to customize an unprecedented data center. However, in the face of this major advantage,$NVIDIA (NVDA.US)$But the mood is complicated:

According to the news, OpenAI refused to use Nvidia's InfiniBand network devices and switched to the Ethernet camp [1].

As we all know, a data center often has thousands or even tens of thousands of servers; what connects these servers is network interconnection technology represented by InfiniBand and Ethernet.

Nvidia is the main player on the InfiniBand route and exclusively provides related hardware such as switches and cables; the rest of the technology companies are piled up on the Ethernet circuit.

OpenAI's “anti-water” is huge bad news for Nvidia.

You need to know that InfiniBand and Ethernet have been competing with each other for many years. InfiniBand was once far ahead: in 2015, more than half of the top 500 supercomputers were using InfiniBand. But right now, as major customers defy one after another, InfiniBand is losing the game.

In July of last year,$Advanced Micro Devices (AMD.US)$Nine major Silicon Valley companies, including Microsoft, have joined forces to establish the Super Ethernet Alliance (UEC) to completely defeat InfiniBand. In the first quarter of this year, Nvidia's InfiniBand network equipment revenue declined month-on-month [2]. Compared to businesses that have gone viral, such as data centers, it stands out.

So here's the question:

1. Why is Nvidia's “son” InfiniBand at a disadvantage?

2. For Nvidia, why is connectivity a race that cannot be lost?

Factional strife

The original purpose of InfiniBand was to solve the biggest bottleneck in computing power — transmission speed.

When the two servers are connected together, the computing power achieved by “1+1” will definitely be “less than 2,” because the data transmission speed is far less than the computing power of the server. Think of each server as a small town with 10,000 trucks; due to the objective environment, it can only transport 200 truckloads of goods to the neighboring town every day.

The data center is a kingdom made up of thousands of small towns. Transportation problems between towns will seriously hamper the development of the entire Kingdom.

The main culprit in limiting transmission speed is an outdated network protocol.

The so-called network protocol can be simply understood as a kind of “traffic rule.” Information transmission between computers is carried out in an orderly manner according to this “traffic rule.” The original traffic rules were a network protocol called TCP/IP.

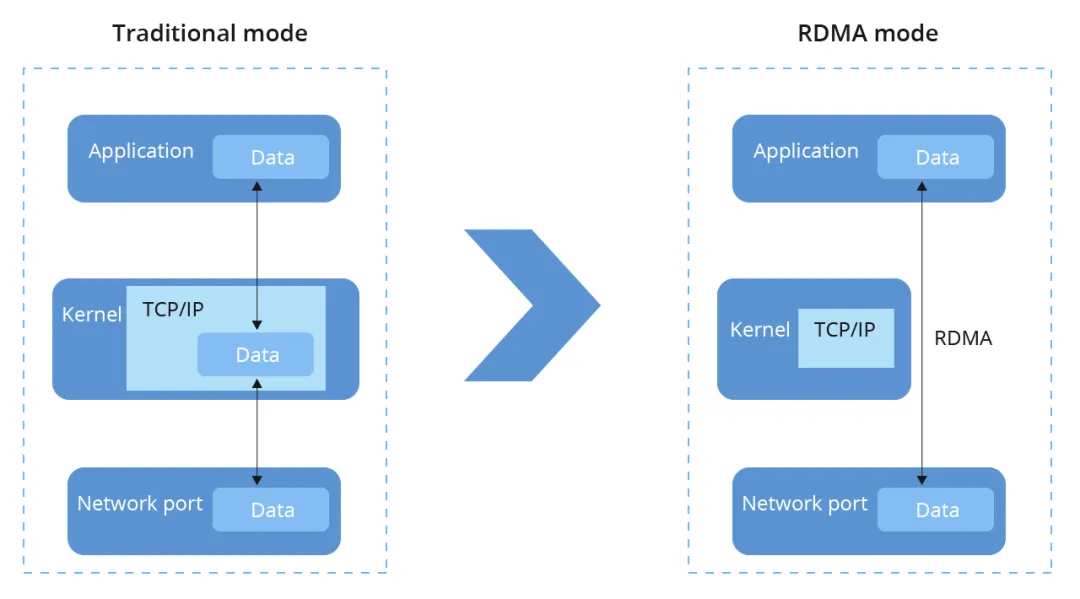

There is an obvious flaw in this traffic rule: when data is transmitted, it needs to go through the CPU, which takes up extreme CPU resources, causing delays to be particularly high.

There are a large number of manual toll booths on roads that are equivalent to trucking goods. Every time the car drives, you have to stop and take out your wallet to pay the bill, causing serious congestion, and the operating efficiency is imaginable.

In this context, a new RDMA networking protocol (remote direct memory access) came into being. As the name suggests, it can bypass the CPU and directly access another server's memory. In other words, the new traffic rules have removed all manual toll gates on expressways and changed them to ETC.

However, based on the RDMA network protocol, the industry has derived two different implementation directions:

The first is an “external innovator.”

Based on RDMA, a set of network protocols were reconstructed to achieve the ultimate performance. The result was Nvidia's InfiniBand. The new traffic rules allow data transmission to bypass the CPU and memory at the same time, which is equivalent to removing ETC and directly interacting with data through the GPU.

The name InfiniBand (unlimited bandwidth) is an expression of its ultimate philosophy.

The second is the “internal reformist.”

As a matter of fact, Ethernet is the most popular local area network technology, and almost all computer systems support Ethernet devices. The reformist approach is to use the RDMA network protocol to transform Ethernet.

It can be seen from this that the competition between InfiniBand and Ethernet is essentially a factional dispute along the same technology route.

At a time when the supply of computing power is seriously insufficient, the drastic innovation of InfiniBand should have been more popular in the market. However, the major Silicon Valley giants “flatly refused.” Not only Microsoft, but Meta is also choosing to fully embrace Ethernet.

The reason why InfiniBand is so unpopular is that innovation is too aggressive.

The price of radicalization

In 2019, around Israeli companies Mellanox, Microsoft,$Intel (INTC.US)$The three giants of Nvidia and Nvidia launched a fierce competition.

Mellanox is the sole provider of InfiniBand solutions, with a market capitalization of 2.2 billion US dollars. To this end, Intel has earmarked a cash flow of 6 billion US dollars, which they thought was in their hands; I didn't expect Nvidia to be more aggressive and take Mellanox into its pocket at a high price of 6.9 billion US dollars [7].

This is Nvidia's most expensive acquisition ever. However, the old yellow stud has brought significant financial returns to Nvidia.

As mentioned earlier, InfiniBand is just a “rule of the road”; if you want to use this technology, you still have to use hardware.

However, since InfiniBand's innovation is too aggressive, the physical link layer, network layer, and transmission layer have been redesigned. They are not suitable for traditional hardware, and the entire infrastructure, including special switches, network cards, and cables, needs to be replaced.

All of these supporting network devices are exclusively provided by Nvidia.

It's equivalent to InfiniBand redefining a more efficient set of traffic rules, but it doesn't apply to the town's original fuel trucks; in order to improve delivery efficiency, the town still has to purchase a batch of new energy trucks from Nvidia.

As can be seen from this, InfiniBand is actually a “dedicated” solution. By promoting this plan, Nvidia can expand bundled sales and sell dedicated supporting network facilities to customers.

As a result, InfiniBand has always been expensive to use. Technology companies need to spend 20% of their expenses on InfiniBand when building data centers; if they switch to a generic Ethernet solution, they only need half or less [8].

In order for technology companies to use InfiniBand, it can be said that Lao Huang has exhausted his routine:

For example, Nvidia sells both InfiniBand and Ethernet network cards. Both have exactly the same circuit board design, but Ethernet takes significantly longer to deliver [9].

Nvidia's small abacus is that although it is expensive, it has excellent performance. The InfiniBand solution can greatly improve AI training and make models earlier and put them on the market. Isn't this money just going to be repaid?

However, to Nvidia's embarrassment, the performance gap between InfiniBand and Ethernet was narrowed as the “internal reformist” camp continued to grow.

In 2014, the RoCE v2 network protocol, the latest result of the reformists, came out, changing the situation where InfiniBand excels. Last year, Nvidia launched a separate switch for InfiniBand and Ethernet. Despite differences in positioning, both can achieve 800Gb/s end-to-end throughput.

When the general plan also achieved 85 points, the special plan began to lose its appeal. With a 5-10 point lead, it's hard for tech companies to pay double the price.

Meanwhile, the Super Ethernet Alliance, which was established in July last year, plans to develop a new set of Ethernet protocols based on the RoCE v2 network protocol for the big model scenario, completely surpassing InfiniBand.

The new “anti-Nvidia coalition” was in full swing. As of March of this year, domestic technology companies, including ByteDance, Alibaba Cloud, and Baidu, have also joined the group.

Faced with the Super Ethernet Alliance's “Brawl of Justice,” Nvidia has relentlessly resisted.

Over the past year, Hwang In-hoon has mentioned InfiniBand less and less in public. In the future, the dispute between InfiniBand and Ethernet may gradually come to an end. However, Nvidia didn't give up on the internet cake and instead bet its chips on its Spectrum X Ethernet platform.

Because, the Internet is increasingly becoming a must-compete place for soldiers in the big model era.

Next battleground

In January of this year, the American consulting firm Dell'Oro Group published a report, which mentioned that with the explosion of artificial intelligence, technology companies' demand for communication connectivity surged, leading to a 50% expansion of the switch market [10].

The reason technology companies are so passionate about the Internet is because they have gradually hit the ceiling during the barbaric expansion over the past year. And interconnection technology, represented by InfiniBand and Ethernet, is the key to breaking the bottleneck.

The first problem technology companies face is that computing power costs are too expensive.

Nvidia's AI chips have always been known for being expensive: the latest B200 chips start at a single price of 30,000 to 40,000 US dollars. As we all know, the big model is a “computing power devouring gold beast” that can't be fed enough. In order to meet everyday use, technology companies usually need to purchase at least thousands of AI chips. This money burns faster than shattering banknotes.

If you develop your own chip, you will also encounter similar problems. Due to the slow iteration of the chip manufacturing process, increasing the upper limit of chip computing power requires more costs.

However, due to transmission speed limitations, the data center did not use the full computing power of the chip. Compared to stacking chips on hard scales, it is relatively more cost-effective to increase data transmission speed and improve the utilization rate of computing power.

The second issue is power consumption.

As data centers get bigger, power consumption is also skyrocketing. Zuckerberg mentioned in an interview that the power consumption of newly built data centers in recent years has reached 50-100 megawatts, and slightly larger ones have reached 150 megawatts. If this trend continues, 300, 500, or even 1,000 megawatts are only a matter of time [11].

However, according to the US Energy Information Administration, in the summer of 2022, California, where Silicon Valley is located, has a total power generation capacity of 8,5981 megawatts [12]. Faced with more and more “power monsters,” the power grid is really a bit sweaty.

In order to train GPT-6, Microsoft and OpenAI once set up a server cluster composed of 100,000 H100 sheets. After testing, it was discovered that the local power grid directly went on strike.

Currently, the solution between Microsoft and OpenAI is “distributed hyperscale cluster training across regions.”

The translation of an adult saying means that tens or even millions of AI chips are scattered across multiple cities or regions, and then connected into a whole using InfiniBand or Ethernet — the Internet has once again played a critical role [13].

If the rule of the big model world is to work hard to do miracles; then the value of connectivity is to raise the physical limit of vigorously producing miracles and make the scaling law flywheel run for a longer period of time.

In the age of artificial intelligence, connectivity is bound to be one of the most important topics; for Nvidia and other technology companies, this is a game they can't lose.

Epilogue

In Silicon Valley, Nvidia is becoming more and more like a “dragon.” In the connected field, most technology companies stand on the opposite side of Nvidia. As for GPUs, it goes without saying that the chips developed by major manufacturers to get rid of Nvidia have long been an open secret.

A big reason why Lao Huang is so unpopular is because he has basically earned all of his money.

Whether it's InfiniBand or AI chips, Nvidia has almost achieved a monopoly and has strong bargaining power. In contrast, technology companies are piling up to refine AI, but they are suffering from not having a mature business model. Everyone looked back and found that only one man in a leather coat made a lot of money. Inevitably, they were unhappy.

So, it's no wonder that big Silicon Valley companies are starting to “self-reliance.” After all, “poverty” is the driving force for progress.

Reference materials

[1] OpenAI Moves to Lessen Reliance on Some Nvidia Hardware, the information

[2] With the rise of Ethernet, Nvidia Infiniband is being encroached upon, semiconductor industry observation

[3] Record of Huang Renxun's latest 20,000 word speech: Will break Moore's Law and release a new product. The robot era has arrived, Tencent Technology

[4] IB or RoCE? AI data center network interconnection, Haipi Smart Tour

[5] Is InfiniBand finally ready for prime time, computerworld

[6] InfiniBand Insights: Driving High Performance Computing in the Digital Age, Speeding the Community

[7] CONNECTING THE DOTS ON WHY NVIDIA IS BUYING MELLANOX, The Next Platform

[8] GREASING THE SKIDS TO MOVE AI FROM INFINIBAND TO ETHERNET, The Next Platform

[9] Nvidia's Plans To Crush Competition — B100, “X100,” H200, 224G SerDes, OCS, CPO, PCIe 7.0, HBM3E, SemiAnalysis

[10] AI Demands Require New Network Buildouts Datacenter Switch Market by 50 Percent, According to Dell'Oro Group

[11] AI GPU bottleneck has been tested, but now power will constrain AI growth warns Zuckerberg, Tomshardware

[12] Energy Information Administration

[13] Why “connectivity” will be an important proposition for future technology investment, information equality

[14] The value geometry of AI Ethernet, Guosheng Securities

Edit/Somer