Source: Toonkawa Technology Review

Author: Chen Bin

After all, OpenAI went against group training.

Six months ago, OpenAI had an internal battle that garnered worldwide attention:

CEO Sam Altman (Sam Altman) was suddenly fired and reinstated 106 hours later, surrounded by employees.

Since then, Ilya Sutskever (Ilya Sutskever), one of the founders of “Assassination of the King,” has never appeared. Ilya is a big disciple of Hinton, the “godfather of deep learning,” and the soul of OpenAI. Before he disappeared, his last job was leading a security team called “Super Align.”

After the GPT-4o press conference, Ilya suddenly updated her tweet and announced her departure from OpenAI. The exit of the chief scientist hastened the split of OpenAI.

Three days later, another “super-aligned” scientist, Jan Leike (Jan Leike), also announced that he was running away carrying a bucket. Jane Lake publicly revealed that the two had serious differences with Altman and other senior officials, and that the security team was struggling.

OpenAI's culture is “deteriorating,” ignoring safetyism and shifting to “shiny products.”

Tech giants chose to leave one after another.

An OpenAI employee told the media, “Safety-conscious employees have lost confidence in him. Trust is collapsing little by little, like dominoes falling down one by one [1].”

As we all know, OpenAI is an organization made up of idealistic complexes. However, as to how to achieve this ideal, two different routes have gradually evolved within OpenAI. Ilya's departure meant that the idealism he represented was completely defeated by another idealism.

The scientist and the daydreamer

On a Sunday in 2005, there was an uninvited visitor outside the office of Professor Hinton of the University of Toronto.

The person here is a math student with an Eastern European accent and always seems to have a sad face. At the time, Hinton was one of the few scholars still studying deep learning and set up a laboratory at the University of Toronto.

The student said he spent the whole summer working part time French fries at a fast food restaurant, and now he wants to work in this lab.

Hinton planned to test this young man, who was anxious to show himself, and gave him a paper on reverse dissemination. This paper was written in the 80s of the last century and is one of Hinton's most famous research results. After a few days, the student came back and asked him, “Why don't you derive and use a reasonable function optimizer?”

Hinton took a breath, “It took me 5 years to think about this. [2]” This student is Ilya.

Hinton discovered that Ilya had a very strong sense of primitive intuition and was able to find the right technical path through her “sixth sense” [2]. As a result, Hinton sees Ilya as the only student who is more “genius” than himself [3].

Furthermore, Hinton soon discovered that “technical genius” was just one characteristic of Ilya.

Sergey Levine (Sergey Levine), a researcher who has worked with Ilya for a long time, once said that he likes big ideas and is never afraid to believe them. “There are many people who aren't afraid, but he's not particularly afraid. [2]”

In 2010, after reading a paper, Ilya boldly claimed that deep learning would transform computer vision—only someone needed to drive this research. In an era where deep learning was equated with folk science, Ilya's statement was clearly somewhat contrary to Tiangang.

However, it only took him 2 years to punch everyone in the face.

In 2012, AlexNet, created by Sinton, Ilya, and Krizevsky, with an image recognition accuracy rate of up to 84%, allowed the world to see the potential of deep learning and sparked a fervent pursuit in the industry.

That year, Google spent an astronomical price of 44 million dollars just to bring AlexNet's 3 authors under its command.

While working at Google, Ilya began to believe in something even bigger: superintelligence beyond humans is just around the corner.

On the one hand, it's because he discovered that the rules of the deep learning game had changed.

Previously, only a small number of people were studying deep learning, and resources were limited. In 2009, Hinton worked on a deep learning project at Microsoft for a short time, and couldn't even apply for a video card worth 10,000 US dollars, making him angry. “Microsoft is clearly a software seller with a shortage of funds. [2]”

However, after AlexNet, the future was accelerated by the influx of countless smart minds and hot money.

On the other hand, as early as a student, Ilya believed in scaling laws. The “sixth sense” told him that superintelligence is not that complicated; it only requires more data and computing power.

Moreover, Ilya once again proved that she was right.

At the 2014 NIPS academic conference, Ilya presented his latest research results: the Seq2Seq (sequence-to-sequence) model. Before Transformer was born, it was the soul of Google Machine Translation. With just enough data, the Seq2Seq model can perform very well.

Ilya mentioned during the roadshow that a weak model can never perform well. “The real conclusion is that if you have a very large data set and a very large neural network, then success is guaranteed. [4]”

The two faces of a scientist and a daydreamer unleashed a magical chemical reaction in Ilya.

As Ilya became more convinced of superintelligence, he paid more attention to safety issues day by day. It didn't take long for Ilya to meet her confidant.

An idealistic ceiling

In 2015, Elia received an invitation from Sam Altman, head of investment agency Y Combinator, to attend a secret party at Rosewood Hotel in Silicon Valley. Altman, however, is not the protagonist of this secret party.

Musk suddenly showed up and told everyone at the scene that he was planning to set up an AI lab.

The trigger for Musk to do this was his 44th birthday party a few weeks ago.

At the time, Musk invited Google CEO Larry Page and other friends to the resort for 3 days. After dinner, Musk and Paige had a heated argument over AI. Musk thought AI would destroy humans, while Paige was not convinced, taunting him as a “specialist” and biased against silicon-based life [5].

Since then, Musk hasn't talked much to Paige.

At the secret gathering, Musk and others mentioned that few scientists would consider the long-term consequences of their research. If large companies like Google are allowed to monopolize AI technology, it is likely to inadvertently cause huge damage.

So they came up with a completely new format:

Set up a non-profit laboratory that is not controlled by anyone.

They will also pursue the Holy Grail of AGI (General Artificial Intelligence), but they are not profit-oriented, and abandon most research results in favor of open source (open source). Musk and Altman believe that if everyone has access to powerful AI, then the threat of “malicious AI” will be greatly reduced.

“The best thing I can think of is for humans to build real AI in a safer way.” Another organizer, Greg Brockman (Greg Brockman), said [6].

Ilya was moved by this romantic idea. For this reason, he gave up the temptation to earn an annual salary of 2 million dollars and resolutely joined OpenAI.

In its first 15 months of existence, OpenAI did not set a specific strategic direction. Google scientist Dario Amodei (Dario Amodei) visited OpenAI at the time and asked what they were researching, but OpenAI's management couldn't answer for a while. “Our goal now... is to do something good. [7]”

A few months later, Amoudi moved to OpenAI to do something good together.

In March 2017, Altman and other leaders realized they needed to focus more. However, while planning the AGI roadmap, they discovered a serious problem: the computing power couldn't keep up. The computing power required for the big model doubles every 3-4 months; the form of a non-profit organization is clearly insufficient to support it.

At the time, Musk made a proposal to merge OpenAI into Tesla and put him in full control [8].

Musk, however, underestimated Altman's ambitions.

Altman is always looking for major scientific breakthroughs, hoping to build a trillion-dollar enterprise with this. In the past, YC's most well-known investment case was Airbnb. After Altman came to power, YC began crushing its head to search for various companies studying nuclear fusion, artificial intelligence, and quantum computing.

Mark Anderson, founder of a16z and venture capitalist, once said, “Under Altman's leadership, YC's level of ambition has increased tenfold. [9]”

In February 2018, Altman won over all of OpenAI's management to its own camp. Musk has since left the team, stopped talking to Altman, and cancelled follow-up funding for OpenAI.

Two months later, Altman released OpenAI's Articles of Association. In an inconspicuous corner, he made minor changes to his statement of the company's vision. “We anticipate that we will need to mobilize a large amount of resources to complete our mission.”

At this point, the once highly idealistic OpenAI has gradually stepped into another river.

First split

In February 2019, OpenAI announced GPT-2, but it wasn't open sourced to the outside world for the first time. Later, GPT-3 became more completely closed source, and OpenAI became CloseAI.

A month later, OpenAI changed its “non-profit” nature, set up a for-profit division, and accepted Microsoft's $1 billion investment.

The sudden 180-degree turnaround caused OpenAI to begin to split into two opposing factions:

The security doctrine represented by Dario Amoudi and Ilya believes that it is necessary to ensure that AI does not threaten humans before publicly releasing products;

Accelerationism, represented by Altman and Brockman, hopes to accelerate the spread of AI, so that more people can use AI to benefit the world.

As can be seen from this, the two factions acted in complete opposite ways:

Securism advocates first verifying safety and then publishing; accelerationism advocates first expanding the market and then making adjustments based on test results and feedback.

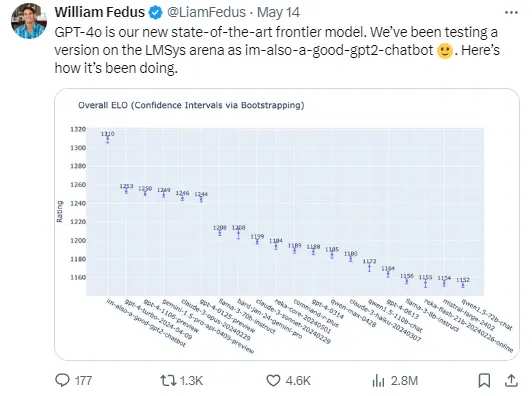

GPT-4o, which was released a few days ago, is a typical accelerationist style. Prior to the press conference, OpenAI anonymously released the powerful model “im-also-a-good-gpt2-chatbot,” which aroused widespread speculation and discussion in the developer community.

It was later proven that this model was the predecessor of GPT-4o; the purpose of Altman's hypocrisy was to get people eating melons to help him test it.

As differences intensified, in 2021, security activist Dario Amoudi, who wanted to do something good together, triggered OpenAI's first split. He believes that OpenAI is changing from a “brave man” to an “evil dragon,” becoming more and more commercialized, ignoring securism.

As a result, he led a group of core employees out and founded another AI company, Anthropic.

Amoudi has positioned Anthropic as a public benefit company that will enable them to pursue commercial profits and social responsibility at the same time. Today, Anthropic is OpenAI's biggest competitor.

Dario Amoudi's departure caused great turmoil within OpenAI, but Altman is still trying to maintain the balance between the two factions.

Until the release of ChatGPT.

A failure of idealism

ChatGPT is a temporary project.

At the time, OpenAI was fully developing GPT-4. However, there are rumors that Amoudi's Anthropic is developing a chatbot. As a result, Altman temporarily assigned employees to create a chat interface for the existing GPT-3.5. Altman refers to ChatGPT as a “low-key research preview” that can help OpenAI gather data on human interaction with AI.

At the time, OpenAI's internal employees set up a betting pool to guess how many users they could get in 1 week. The boldest bet was 100,000 people [11].

The final score was 1 million.

Two months later, this “low-key research preview” became the fastest app in history to surpass 100 million users.

The balance between the two factions was completely broken.

An internal employee told the media, “After the birth of ChatGPT, OpenAI had a clear way to generate revenue. You can no longer flaunt yourself with an 'idealistic lab'; now there are customers who want to be served. [12]”

Since her time as a student, Ilya has believed that superintelligence is not far off. As ChatGPT sparked industry chase, his safety anxiety grew worse, and his conflicts with other accelerationist management grew worse.

Some people think he's getting more and more arrogant, while others think he's starting to feel more like a spiritual leader.

At the 2022 OpenAI holiday party, Ilya began shouting “feel the AGI (feel the AGI)” with all the employees. He's said this over and over on many other occasions — as if superintelligence was within easy reach.

Prior to the palace fight last year, Ilya told a reporter that ChatGPT may be conscious and that the world needs to recognize the true power of this technology.

This media comrade has met Ilya more than once, and he doesn't have a good impression of him: every time they meet, he says a lot of extremely crazy things [13].

In July 2023, Ilya launched the “Super Align” project, launching a final rebellion against accelerationism.

In the context of AI, alignment (Alignment) is not some kind of black phrase; it is a technical term that refers to aligning cutting-edge AI systems with human intentions and values. However, Ilya believes that the level of intelligence of superintelligence will far surpass that of humans, and human intelligence is no longer sufficient to be used as a standard of measurement.

He mentioned that AlphaGo back then was a typical example.

With Lee Se-seok's 37th hand in the second game, it was in a position that no one expected. At the time, even the Chinese Go player, Qian Yuting, exclaimed on the commentator, “What the hell is this, isn't it wrong?” In hindsight, this hand of chess was exactly the key to AlphaGo's victory.

Even AlphaGo is so elusive, let alone the upcoming superintelligence.

As a result, Ilya brought on her colleague Jane Lake and formed the “Super Align” team. The content of the job is simple: build a powerful AI system within 4 years to replace human intelligence to perform alignment tasks, and OpenAI will provide 20% of the computing power for this project.

Unfortunately, Altman did not give security activists a chance to fight back, and did not deliver on the promise of 20% computing power.

At the end of last year, the last bullet fired by Ilya also failed to hit the point. When Ilya failed to “assassinate the king,” his fate with OpenAI was already predetermined.

Epilogue

Following the release of GPT-4o, Altman reaffirmed the revised company vision:

Part of OpenAI's mission is to give people very powerful AI tools for free (or at a great price). In his blog, he wrote, “We're a business, and a lot of things need to be charged, which will help us provide free, excellent AI services to billions of people (hopefully). [15]”

In 2016, “The New Yorker” published a special article about Altman.

At the time, Altman's main role was the head of YC. During the actual interview, the “New Yorker” reporter felt a kind of aggression in Altman.

“He is rapidly building a new economy within Silicon Valley, which appears to be designed to replace the original Silicon Valley. [9]”

Now, as OpenAI completely leaves the Ilya era, Altman will continue to fulfill its unfulfilled ambitions.

Reference materials

[1] “I Lost Trust”: Why the OpenAI Team in Charge of Safeguarding Humanity imploded, Vox

[2] Geoffrey Hinton | On Working with Ilya, Problems, and the Power of Intuition, Sana

[3] Deep learning revolution, Cade Metz

[4] NIPS: Oral Session 4 - Ilya Sutskever, Microsoft Research

[5] Ego, Fear and Money: How the A.I. Fuse Was Lit, New York Times

[6] Inside OpenAI, Elon Musk's Wild Plan to Set Artificial Intelligence Free, Wired

[7] The Messy, Secretive Reality Behind OpenAI's Bid to Save the World, MIT Technology Review

[8] The Secret History of Elon Musk, Sam Altman, and OpenAI, Semafor

[9] Sam Altman's Manifest Destiny, The New Yorker

[10] AI Debate: The Ethics of Sharing Understandable Programs, The Verge

[11] Inside the White-Hot Center of A.I. Doomerism, New York Times

[12] Inside the Chaos at OpenAI, The Atlantic

[13] Rogue Superintelligence and Merging with Machines: Inside the Mind of OpenAI's Chief Scientist, MIT Technology Review

[14] OpenAI Wants to Harness AI. It Should Pause Instead, The Information

[15] GPT-4o, Sam Altman

Editor/Somer