Source: Wall Street News

For every $1 spent on GPUs, cloud providers have the chance to earn $5 in hosting over 4 years. For every $1 spent on HGX H200 servers, API providers hosting Llama 3 services can earn $7 over 4 years.

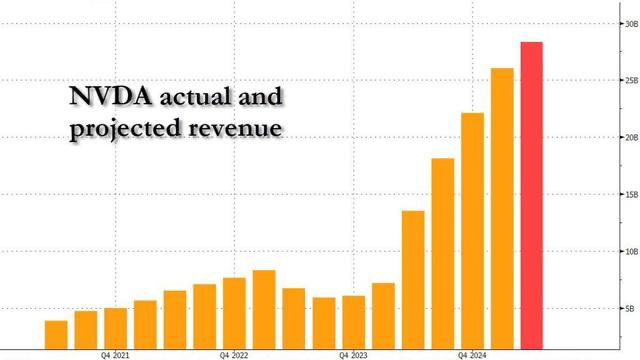

Overnight, “the most important stock on Earth” and S&P's third-most weighted stock$NVIDIA (NVDA.US)$Announcement of financial results for the first quarter of the 2024 fiscal year. Financial reports show that Nvidia's total revenue and data center revenue reached record highs for several consecutive quarters in the last quarter, rising 262% and 427% year-on-year respectively, far exceeding Wall Street expectations.

In the conference call that followed, Chief Financial Officer Colette Kress settled the accounts for the analysts, stressing that in today's hot market, buying Nvidia chips will soon pay for itself.

Kress said the strong growth in the data center business was driven by a surge in demand from enterprises and internet companies. She emphasized the importance of the cloud computing rental market, believing that cloud services could help customers recover part of the cost of purchasing Nvidia chips. She estimates that for every dollar spent on Nvidia's artificial intelligence infrastructure, cloud providers will have the opportunity to earn $5 in revenue by providing computing power services (GAAS) within the next four years.

Kress reiterated that Nvidia provides cloud customers with “the fastest model training speed, the lowest training costs, and the lowest inference costs for large-scale language models.” She revealed that the company's current customers include well-known artificial intelligence companies such as OpenAI, Anthropic, DeepMind, Elon Musk's XAI, Cohere, Meta, and Mistral.

Notably, Kress also shared Nvidia's and$Tesla (TSLA.US)$close cooperation. Tesla has stocked up a total of 35,000 H100 GPUs for AI training. Chris expects automobiles to become the largest enterprise vertical in Nvidia's data center business this year, bringing revenue opportunities of several billion dollars.

She also said,$Meta Platforms (META.US)$The Llama 3 large-scale language model was trained on 24,000 Nvidia H100 GPUs; the GPT-4O shown by OpenAI last week is supported by the Nvidia H200 chip, and the H200's inference performance is nearly double that of the H100.

Using Llama3 as an example, Kress explained how artificial intelligence companies can profit from providing API services. She stated:

For the Llama3 model with 700 billion parameters, an Nvidia HGX H200 server can output 24,000 tokens per second, providing services to more than 2,400 users at the same time. This means that for every $1 spent on an Nvidia HGX H200 server, the API provider hosting Llama3 can earn $7 in revenue from Llama3 token billing over the next four years.

After the financial report was released, Nvidia's share price soared 6% after the market, breaking through the $1,000 mark, with a market capitalization of $2.3 trillion, ranking third among S&P's constituent stocks.

Editor/jayden