Source: Brocade

Author: Qi Xin

Human society is quietly shifting from the Internet era to the computing power network era.

What few people are aware of is that in the prelude to a turning point of the times, in addition to GPUs and HBMs, there are also switches — switches that had little attention in the market before, are stifling AI computing power.

All-out attack$NVIDIA (NVDA.US)$The alliance that VS is poised to fight back is the third battle between AI after GPU and HBM: a wonderful duel over switches in the history of technology is about to unfold.

01 Cisco sequelae

If you use human structure to compare AI computing power, you can understand it as follows: AI chips (composed of GPU+HBM+Cowos) are the heart, acceleration software such as CUDA is the brain, optical modules are joints, cables and optical fibers are blood vessels, and network devices represented by switches are throats. A collection of different devices ultimately presents an entire cluster of servers.

Among them, AI chips, CUDA, optical modules, and cables have all been discussed over and over again, and have become conspiracies. What is surprising, however, is why switches, as one of the core networking devices, have always been snubbed and can only be used as AI weapons.

By definition, a switch (Switch) operates at the data link layer in the OSI network model to intelligently decide which port to forward data frames from, thus enabling data exchange and traffic management in the network. Therefore, the core role of switches is to improve network performance and efficiency, and to support network expansion and management. Generally understood, switches are hardware carriers of the “network effect.”

Also, judging from the size of the market, switches are also quite important. According to the latest data from IDC's “2023 Internet Market Tracking Report”, the global network equipment market size in 2023 was 71.4 billion US dollars, with switches exceeding 40 billion US dollars, which are the core computing power components second only to AI chips and servers. The scale is even much larger than the recently hotly discussed optical modules and high-speed storage HBM.

Lord Huang actually expressed the central position of switches in public. Lao Huang once revealed that the InfiniBand network (hereinafter referred to as IB) accounts for about 20% of the total cost of investment in the entire AI cluster. Let's take a quick look at science. The IB network is a computing power communication network between servers built by Nvidia with the help of its subsidiary Mellanox. The core switches used are self-produced IB switches.

Since they are so important from a technical point of view and not small in terms of market size, why are switches never taken seriously by everyone?

The author believes that the main reason is that switches are stereotyped as supporting actors in network architectures. After all, hearing the new terms HBM and COWos is a grand story of ten times the jump in space. As soon as the switch was submitted, everyone reflexively thought: Isn't this just a Cisco product from the time of the Internet bubble in 2000; can it still be sold?

over 20 years ago$Cisco (CSCO.US)$As the absolute leader in network equipment in the world, Nvidia enjoys the same position as today. In 2000, the market share of Cisco's network switches exceeded 60%, and the share of routers exceeded 80%. It can be said that without Cisco's network equipment, the Internet would not have flourished later. Back then, Cisco was known as an Internet seller.

Everyone probably knows the story later. With the bursting of the Internet bubble, Cisco's stock price was hit hard, falling more than 70% from its high point. It took 20 years for the company to barely fill the fanatical bubble brought about by that year's valuation. The technology industry naturally “loves the new and hates the old”. Naturally, the old face of exchangers is being taken around. What's more, the fear of being scolded back then is still deeply reflected in every investor's arc of reflection.

However, preconceptions are a thing of the past. At this point, it is imperative to re-examine our understanding of switches, especially AI switches. There are no other reasons. The signals from all sides are already very obvious. Here are just a couple of examples:

The world's leading companies have begun to fight fiercely on new switches and network architectures. In addition to Nvidia mentioned above, in fact, the Super Ethernet Alliance is also very active, and will be analyzed in detail later.

Investors in US stocks are also beginning to pursue exchange targets. Among the latest AI switches, the leader is undoubtedly Mellanox, a subsidiary of Nvidia, but since financial data is not separately listed and disclosed, not many details can be seen; the leader of third-party AI switches is not the Cisco mentioned earlier, but the late-stage star$Arista Networks (ANET.US)$Its stock price did not outperform NASDAQ in the first round of AI in the first half of 2023, but it has been rising at an accelerated pace since the end of 2023, which shows that US investors are re-examining its importance.

02 The third wall

Today, I am once again understanding the necessity of switches because the switching products themselves have undergone major changes, and the importance of switches in networks has also increased markedly, and it has even become one of the three high walls in AI infrastructure.

1. AI spawns a revolution in computing power networks

Seems like the network architecture has been at a standstill for a long time. If you delve deeper into the history of changes in network architecture, it is not difficult to find the last major change in the OSI network model. It also dates back to the “network moves with the cloud” when cloud computing exploded more than 10 years ago. After more than a decade of stagnation, we have observed that AI will spawn the latest revolution in connected network devices and will drive the network architecture to switch from the Internet era to the computing power network era.

How should computing power networks be understood? First of all, in the past, the core task of network devices on the Internet was to complete instant communication, so a switch is essentially a communication device; now, the construction logic of network devices in AI computing power centers is to concentrate on major tasks, that is, gather more and more powerful computing power. Switches are no longer just communication devices, but have become computing power devices themselves.

There are probably a lot of people here who are against it. Don't worry, keep reading the explanation later.

As we all know, the success of this round of AI is an engineering breakthrough that has produced miracles. The guiding rule behind it is the “scaling law”, the power law relationship between model performance and model scale described by scaling law. This law shows that when the scale of the model (such as the number of parameters, data set size, and computational resources) increases, the model's performance will improve.

In other words, in order to obtain the emergence of AI big model intelligence, scaling law tells you to keep piling up computing power and data. This is why, at the beginning of March 2024, Hwang In-hoon mentioned during his speech at Stanford University that in the next 10 years, Nvidia will increase the computational power of deep learning by 1 million times. This is not a large satellite in the bubble era, but a necessary condition for the emergence of AI intelligence.

Computing power needs to achieve such a horrible increase to meet the scaling law. Analyzed from a hardware perspective, there are three walls along the way:

1) Computing power wall: The core is centered around the GPU, and it is also the product that people pay the most attention to in AI computing power hardware. The most critical technical means to break down the computing power wall is to upgrade the manufacturing process and chip architecture. However, the increase in single-chip computing power brought about by the manufacturing process has already pale in the face of black hole-like AI requirements. After all, the effect of the upgrade to Apple's latest 3nm A17 chip is minimal. In fact, Nvidia's GPU only uses a 4nm process, and even the next B100 will not be upgraded to 3nm. The multiplication of computing power between generations may already be the limit for the increase in the computing power of a single chip.

2) Storage wall: The core is centered around HBM. The computing power of a single chip cannot keep up, which can be greatly mitigated by HBM. Instead, HBM has become a link of rapid progress. The recent sharp rise in Hynix and Micron stock prices is when the market is beginning to recognize the importance of this direction.

3) Communication wall: The combination of computing power and HBM solves the problem of a single card, but no matter how strong a single card is, it is far from keeping up with upstream and downstream computing power requirements. A further solution is to pile up materials. Leaving aside complicated technical terms, the principle is to create a simple and crude miracle by connecting as many high-quality computing power cards as possible to form a computing power cluster. This is essentially the same as the Falcon rocket's 27 engines. The key technology is data center networking technology, so the status of switches is different from the past.

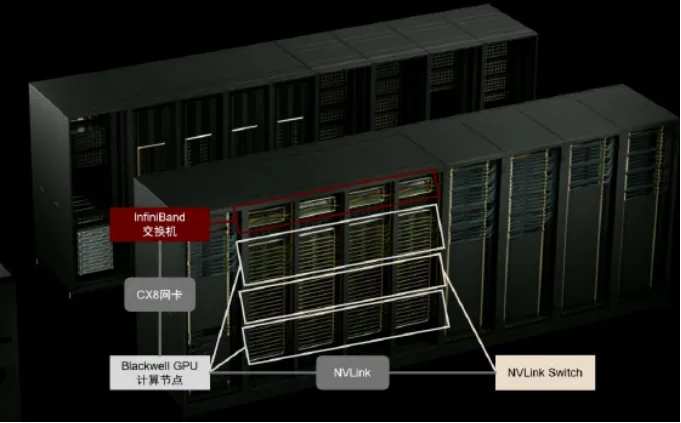

From the latest Nvidia GB200 computing cluster network architecture, we can see very clearly the technology of multiple storage networking: 1) The interconnection between GPU cards and GPU cards, and between cards based on the NVLink protocol. Since this part of the switch chip is monopolized by Nvidia, there is not much point of discussion in the industry chain, so it is no longer being developed. 2) To the next level, there is an IB switch, which connects multiple GPU card groups to form a complete rack. 3) Multiple cabinets are then interconnected through switches to form a powerful AI computing power center. The latter two steps are inseparable from AI switch support.

This architecture can be seen very clearly. In order to break through the communication wall and build a powerful computing power base, the switch not only acts as a communication device in the computing power network, but also becomes the computing power device itself. It is this change in the definition of a link in the industrial chain that has given the entire switch industry chain a basis for valuation.

In this round of computing power network revolution, switches have officially reached the C position in the industrial chain along with GPUs, HBM, advanced packaging, and optical modules.

2. When I first heard it, I didn't know what I liked; when I listened to it again, I was already someone in the song

In fact, this change in the industrial chain did not begin Nvidia's showdown with the world through GB200 in 2024; the earliest signal began 5 years ago.

In 2019, Nvidia spent $6.9 billion to defeat Intel and Microsoft to acquire Mellanox, which was mostly unknown at the time. Back then, the author also didn't understand what kind of switch a chip factory was using. I just felt that for Nvidia, which had a lot of money, this was a small acquisition. Naturally, they didn't carefully analyze the synergy effects in the industrial chain.

However, with the explosion of AI computing power, Mellanox soared in importance, becoming the prime supplier of Nvidia IB switches and Spectrum-X Ethernet switches, accounting for the highest share of AI network devices. It's no exaggeration to say that now that Arista's market capitalization is close to 100 billion US dollars, Mellanox can easily pay 300 billion US dollars, which is 43 times higher than the apparent premium of 6.9 billion US dollars back then, far exceeding the increase in Nvidia's market value over the past 5 years.

Lao Huang was very proud of this acquisition at the time. He once said that it was a combination of two leading global high-performance computing companies. Mellanox had already been placed in the same important position as Nvidia 5 years ago. Looking back, I have to lament that Lao Huang's gaze was really bad.

Mellanox, how can you be on a par with Nvidia? The main products provided by Mellanox are communication interconnection solutions within data centers, and the most core of these are a series of network equipment products developed based on the IB protocol. It is necessary to expand on the IB protocol.

In 1999, North American computer giants took the lead in organizing the IB Alliance to replace the PCIe bus protocol and become a new protocol standard for connecting smart devices. IB has built-in RDMA (Remote Direct Memory Access) function, which can directly connect inter-server memory and GPU memory; for example, in AI GPU clusters, RDMA technology can speed up interaction between cards and greatly reduce latency.

However, IB became less and less vocal, and the interconnection between smart devices was still firmly occupied by the more cost-effective PCIe protocol. This is because the IB protocol requires special network cards and switches to support, leading to high hardware costs, and therefore losing ground in competition with Ethernet solutions. First class is good, but few people can afford it.

Later, even IB starter Intel chose to quit; in the end, only Mellanox painstakingly insisted on this “wrong” line. Shortly after its establishment, Mellanox joined the IB Alliance and launched related products. By 2015, Mellanox's share in the global IB market reached 80%. Although it is leading, it has gone unnoticed in this niche market. It was taken over by Nvidia in 2019, and since then, IB has basically changed from a public agreement to Nvidia's private agreement, and has gone unnoticed.

It wasn't until 2023, an era where computing power was king, that the IB agreement soared to become widely known. With the advent of big AI models, the computing power gap was suddenly magnified to infinity, and the IB protocol, which is the key accelerator in parallel computing, became the optimal solution. The hardware carrier of this protocol was the IB switch.

Under the strong leadership of Nvidia and its Mellanox, the market is increasing the shipment volume of high-speed switches. IDC expects the market high-speed switch growth rate to be 54% and 60%, respectively, from 2023-2024, and is even showing signs of acceleration in 2024. Since Mellanox is a subsidiary of Nvidia and cannot be directly invested, US stock investors are instead looking for Arsita, the purest switch brand. After all, although it is not as good as Mellanox, it is also the largest supplier of high-speed switches for major cloud manufacturers.

In a nutshell, through the link of high-speed switches, we can easily find that the information revolution has entered the era of computing power networks from the Internet. If you're still only talking about the internet this year, you've obviously been left behind by the times; similarly, if you're still only talking about GPUs, then obviously you've only caught one of the three key points.

03 “League of the Lost”

1. What is Nvidia+mellanox doing: Wants to eat dry and wipe clean

From the switch's product genealogy chart, we can better understand Nvidia's layout. This picture is actually very informative, so I recommend watching it over and over again.

First of all, Nvidia's ambitions are huge. It has never just wanted to be a chip company that sells cards, but also wants to be a computing power solution provider in the AI era. Or a change in Nvidia's business model: I never just wanted to sell shovels; I sold you the entire mine; don't use “shovels seller” to describe Nvidia.

Nvidia's 2021-2025 product roadmap clearly publicizes this ambition globally. Among them, the bold one is its core GPU product. From the A100 iteration to the H100, then to this year's B100, to the 2025 X100, the route is very clear, and it is also the focus of attention in the AI industry chain.

However, what is often overlooked is the lower half of the chart. Nvidia also indicates the model changes in the supporting switches, which are divided into two IB and Ethernet series:

Using the Nvidia IB protocol, it is equipped with a Quantum series switch provided by Mellanox, which will be upgraded sequentially from 400G to 800G this year, to 1.6T next year. It is worth mentioning that in this process, the optical module also needs to be upgraded from 800G to 1.6T and then to 3.2T, and Mellanox can also be partially provided.

Many customers are now very passive since they can only buy Nvidia GPUs, so many companies are unwilling to purchase IB solutions and still stick to Ethernet solutions. For this type of customer, Nvidia can also supply Spectrum-X series Ethernet switches, which are also compatible sequentially from 400G to 800G this year, to 1.6T next year, but the interconnection efficiency is less than the IB solution; these switches are also provided by Mellanox.

Therefore, in Lao Huang's idea, if a supercomputing center is built specifically for AI, then the fastest IB switch solution is used; for existing Ethernet computing power centers, if the customer twists and turns, Nvidia can also match and provide an Ethernet solution, then use a Spectrum-X switch. Simply put, it's a business for the present and the future, Nvidia has got you covered.

As can also be seen from the picture, Nvidia doesn't just want to sell chips and switches at the same time; its ambitions far exceed that.

If customers buy Nvidia's chips and switches at the same time, they are not far from purchasing Nvidia's AI computing power cluster solution (top half of the chart). In the overall solution, Nvidia promotes the entire AI computing power cluster built by its own GPU+its own network device+its own CUDA for downstream customers. This is the AI factory model, and the value will be several times more than selling GPU cards alone.

More importantly, if the whole picture is realized, this wave of AI will be deconstructed by Nvidia in a new version of software and hardware. Software companies such as cloud vendors are responsible for crazy kicketing and trial and error business models, and hardware company Nvidia is responsible for building full AI computing power and protection from droughts and floods.

An off-topic note is that this picture also shows that Nvidia is not prepared to get involved with HBM and advanced manufacturing processes; TSMC and Hynix are still very safe at present.

2. Nvidia Phobia: League of Counterattackers

Don't look at the bosses of major manufacturers; asking for a card is a very good attitude towards Nvidia; but in reality, fear of Nvidia is growing day by day in Silicon Valley, and the bosses are all hotly discussing how to get rid of it at the table. Faced with Nvidia's strong clear card offensive, the previously scattered non-Nvidia camp also showed unprecedented unity.

The barriers to GPU chips and CUDA networks seem to be too high. Although Google, for example, has been struggling with TPU for many years, there have been few results. More people have chosen to lie back and accept the reality of Nvidia's monopoly on GPUs, so Nvidia's GPUs can only have a gross margin of over 90%, making it the most violent hardware in history.

However, since the technical barriers are relatively low and are in the early stages of industrial transformation, switching protocols and switches have become the best choice for everyone to break through Nvidia's fortress.

At this point, the switch, a network device that has not been taken seriously for a long time, has suddenly jumped into a secret battle ground based on AI computing power. In order to catch up with Nvidia's IB program, the “Ultra Ethernet Consortium” (Ultra Ethernet Consortium) was formally established in July 2023. This alliance quickly became a lifesaver for major manufacturers.$Intel (INTC.US)$,$Microsoft (MSFT.US)$,$Meta Platforms (META.US)$,$Broadcom (AVGO.US)$,$Advanced Micro Devices (AMD.US)$,$Cisco (CSCO.US)$,$Arista Networks (ANET.US)$, Ebiden, HP, etc. have joined one after another.

Immediately after the establishment of the Super Ethernet Alliance, the RoCEV2 (RDMA over Converged Ethernet) solution was launched. The software layer absorbed the key technology RDMA mentioned earlier. The direction of the sword is very clear, which is to strive to benchmark IB performance.

Currently, there are two latecomer advantages of Ethernet:

According to industry chain research, Nvidia's IB solution is 20-30% more expensive than the Ethernet solution. The Ethernet solution can win back the game by focusing on cost performance.

Lots of people. Traditional data centers are basically Ethernet protocols. For upgrading to Super Ether, compatibility is much better. After all, protocols are the language of hardware conversation, so naturally it has become mainstream.

AMD, the second-largest GPU in the Super Ethernet Alliance, explains these two points more clearly: Ethernet will become AMD's basic protocol for building computing power clusters. Because Ethernet has better performance, more powerful large-scale clustering capabilities, and the most core openness, it hopes to work with leading switch manufacturers to reduce networking costs and build a more cost-effective network.

It is also for these two reasons that many people are still very confident about the Super Ethernet Alliance. Whether Ethernet wins out or IB dominates the world, the final answer can only be left to time to verify. But no matter what, this round of rivalry between Nvidia and the Super Ether League should be very exciting, and it will surely become a classic bridge in the history of technology that will be mentioned over and over again in the future.

However, I tend to think that Super Ethernet's chances of winning are still overrated. Because under the guidance of Scaling Law, the AI computing power network competition is about speed rather than price. If the crowds aren't the best solution, then it's probably just a bunch of people. It's like a bicycle is definitely a more economical way to travel, and there are many riders, but no one uses it to ride at high speeds, so there isn't much time left for the Ethernet Alliance.

3. Domestic production is still half behind the Ethernet Alliance

The original text can be settled at this point, but it is estimated that there are still quite a few people who are concerned about the progress of switches in domestic computing power networks, and I will go into more detail here.

In computing power infrastructure investment in the Internet age, thanks to advanced investment from operators, China's basic network speed and penetration rate are leading the world. This also directly spawned China's mobile Internet boom later. As a result, Chinese Internet companies have global competitiveness.

Many people think that in the AI era, we can also draw on gourds and stage movies where the latecomers took the lead.

However, the sad reality we have to face is that in the era of computing power networks, our computing power infrastructure is completely backward. Not only do we have quite a few lessons to learn about GPUs, HBM, and advanced packaging; switches that represent computing power clustering capabilities also don't have any advantages. On the one hand, we don't have IB switches; we can only use Ethernet switches, and the intergenerational change of Ethernet switches also lags behind the overseas generation 1 generation, which is half behind the Super Ethernet Alliance.

Fortunately, like other network devices, China has actually always had strong competitiveness in switches. This was also due to Huawei's efforts more than 20 years ago and the incubation of the later Xinhua 3. Today, switches in domestic AI computing power networks still follow the pattern in backbone networks and data center networks, and Xinhua III and Huawei are still the main players.

The best time to plant a tree was ten years ago, followed by now. Although we are far behind when it comes to AI switches, depending on historical accumulation and collective strength, if we catch up now, we may not be able to take the table in this game.

Editor/Somer