This article comes from the research report of Shen Wan Hongyuan Research."the Competition of artificial Intelligence chips: GPU is popular, ASIC embraces the Future"The writer is Liu Yang, a securities analyst

Zhitong Financial APP learned that Shenwan Hongyuan Research published a research report on the analysis and comparison of various types of artificial intelligence chips, as follows:

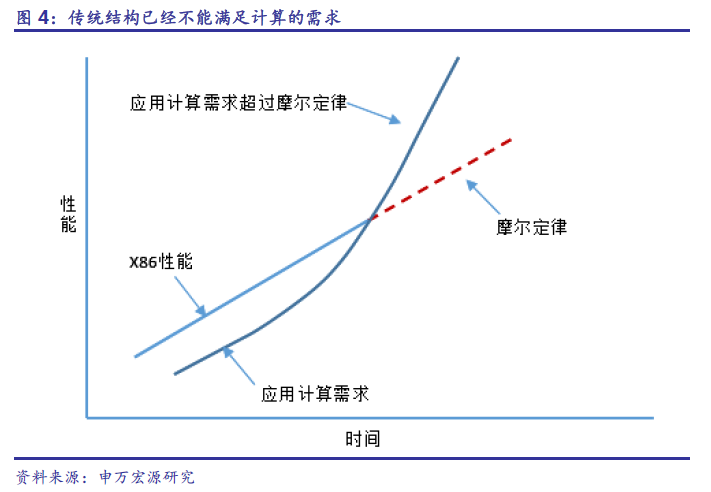

AI accelerates demand to exceed Moore's Law supply of CPU computing power

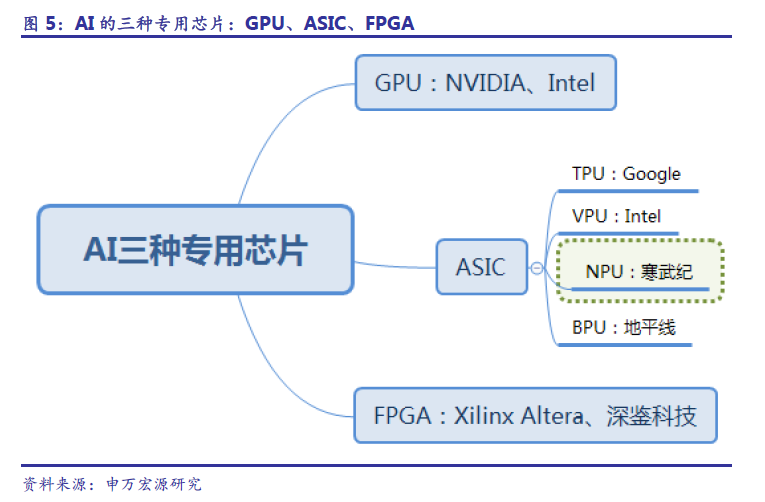

The three major supports of artificial intelligence areHardware, algorithms and dataThe hardware refers to the chip running the AI algorithm and the corresponding computing platform. In terms of hardware, GPU parallel computing neural network is mainly used at present. At the same time, FPGA and ASIC also have the potential to become a new force in the future.

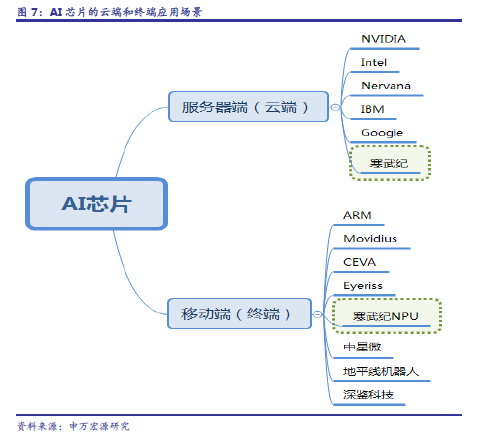

At present, AI chips can be divided into:Cloud (server) and terminal (mobile) chips.The cloud mainly refers to the special neural network servers that need to be used in public clouds, private clouds and data centers, while terminals refer to mobile application terminals such as mobile phones, vehicles, security, audio, robots and so on. Some manufacturers have the ability to design both cloud and terminal chips.

Machine learning continues to evolve, and deep learning appears. Artificial intelligence is the vocabulary of application category, and machine learning is the most effective way to realize artificial intelligence at present. Deep learning is a subcategory of machine learning, and it is also the most effective one among the existing machine learning methods.

The artificial neural network algorithm of deep learning is different from the traditional computing model. The traditional computer software is programmed by the programmer according to the function principle that needs to be realized, and can be input to the computer to run, and its calculation process is mainly reflected in the link of executing instructions. The artificial neural network algorithm of deep learning includes two calculation processes:

1.Training:The existing sample data are used to train the artificial neural network.

2. Execute:Use a trained artificial neural network to run other data.

At present, deep learning is the most effective algorithm in the field of AI. Deep learning model needs a lot of data training to achieve ideal results. CPU has the advantages of dealing with all kinds of data and strong logical judgment, and strong ability to solve single complex problems. The two requirements are not exactly matched, and deep learning needs an alternative hardware to meet the computing needs of massive data.

In addition to CPU, the mainstream types of chips used by AI are:GPU 、 FGPA 、 ASIC .

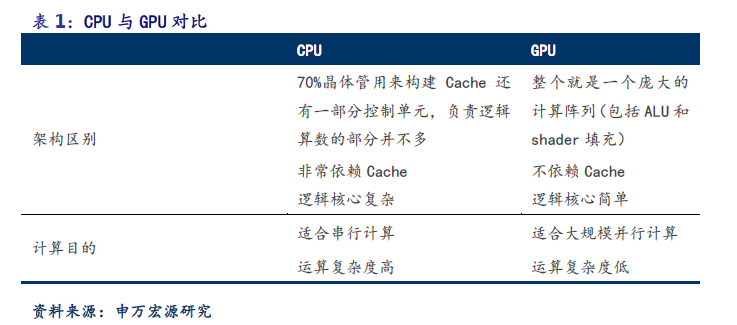

GPU will be the first to benefit from the outbreak of demand such as security.

GPU is a more mature ecosystem that first benefits from the outbreak of artificial intelligence. GPU is similar to CPU, but it is a kind of microprocessor that specializes in image operation. GPU is designed to perform complex mathematical and geometric calculations that are necessary for graphic rendering. GPU can provide tens of times or even hundreds of times the performance of CPU in floating-point operations, parallel computing and other partial computing. NVIDIA Corp Company has launched related hardware products and software development tools one after another since the second half of 2006. at present, it is the leader of artificial intelligence hardware market.

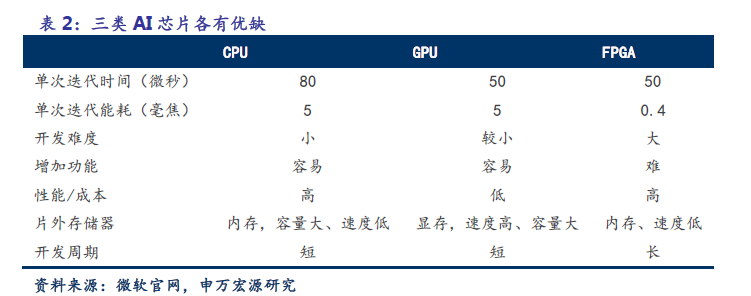

As an image processor, GPU is originally designed to deal with the need for large-scale parallel computing in image processing. Therefore, when applied to deep learning algorithms, it has three limitations:

1. The advantages of parallel computing can not be brought into full play in the process of application. Deep learning includes two computing links: training and application. GPU is very efficient in deep learning algorithm training, but in application, it can only process one input image at one time, and the advantage of parallelism can not be brought into full play.

two。 The hardware is fixed and does not have programmability. The deep learning algorithm is not completely stable. If the deep learning algorithm changes greatly, GPU cannot configure the hardware structure as flexibly as FPGA.

3. The efficiency of running deep learning algorithm is much lower than that of FPGA. Academic and industrial studies have proved that the GPU computing method is not completely matched with the deep learning algorithm, and the performance peak can not be fully utilized. To achieve the same performance in running the deep learning algorithm, the power consumption of GPU is much higher than that of FPGA.

FPGA: the Intermediate solution of Energy efficiency

FPGA is an AI whiteboard with medium energy efficiency, high flexibility and high cost. FPGA is called Field Programmable Gate Array, and users can program repeatedly according to their own needs. FPGA has lower power consumption than GPU, shorter development time and lower cost than ASIC. At present, the application prospect of FPGA in two fields is very great:Industrial Internet field, industrial robot equipment field.

As the future development direction of manufacturing industry, industrial big data, cloud computing platform and MES system are all important platforms to support industrial intelligence. They need to complete complex processing of a large amount of data, in which FPGA can play an important role.

Compared with GPU and CPU, FPGA hasHigh performance, low energy consumption, hardware programmableThe characteristics of. Although FPGA is highly valued, even the new generation of Baidu, Inc. 's brain is developed on the FPGA platform, and companies such as Microsoft Corp and IBM all have special FPGA teams to accelerate servers, but they are not specifically developed for deep learning algorithms, and there are still many limitations:

1. The computing power of the basic unit is limited.In order to achieve reconfiguration, there are a large number of very fine-grained basic units within FPGA, but the computing power of each unit (mainly relying on LUT lookup tables) is much lower than that of ALU modules in CPU and GPU.

2. Speed and power consumption need to be improved.Compared with the custom chip ASIC,FPGA, there is still a large gap in processing speed and power consumption.

3. FPGA is relatively expensive.In the case of large scale, the cost of a single FPGA is much higher than that of ASIC, so FPGA is more suitable for enterprise users, especially in the military and industrial electronics fields with reconfiguration and high performance requirements.

ASIC: top Energy efficiency and embrace the Future

ASIC is a kind of integrated circuit designed for a special purpose, with a specific function of the optimal power consumption AI chip, specially designed for a specific purpose. Unlike the flexibility of GPU and FPGA, customized ASIC cannot be changed once it is manufactured, so the high initial cost and long development cycle make the entry threshold high. At present, most of the giants who have AI algorithms and dream of being good at chip research and development are involved, such as Google's TPU.

Another future development of ASIC is brain-like chips.Brain-like chips are ultra-low power chips based on neural morphological engineering and human brain information processing, which are suitable for real-time processing of unstructured information and learning ability, and are closer to the goal of artificial intelligence. Because it is perfectly suitable for neural network related algorithms, ASIC is better than GPU FPGA,TPU1 in performance and power consumption, which is 14-16 times that of traditional GPU, and NPU is 118 times that of GPU. The Cambrian has issued a set of instructions for external applications, and it is expected that ASIC will be the core of AI chips in the future.

GPU is widely used in the training layer, and ASIC performs well in the executive layer. GPU, TPU and NPU are suitable for different artificial intelligence operations. Different kinds of chips are suitable for different scenarios.

Cambrian terminal sparkle and cloud propulsion

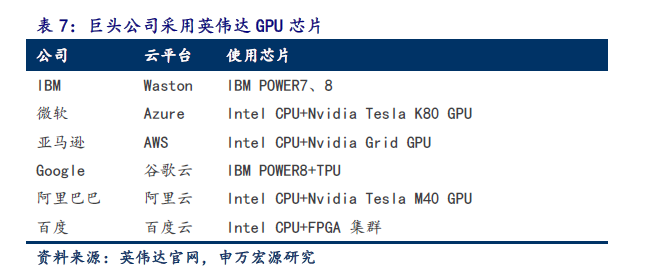

At present, AI chips can be divided into:Cloud (server) and terminal (mobile) chips. Cloud AI chip is similar to supercomputer, while terminal AI chip pays more attention to energy consumption. The first-mover advantage and floating-point computing peak determine that GPU is currently dominant in the cloud.

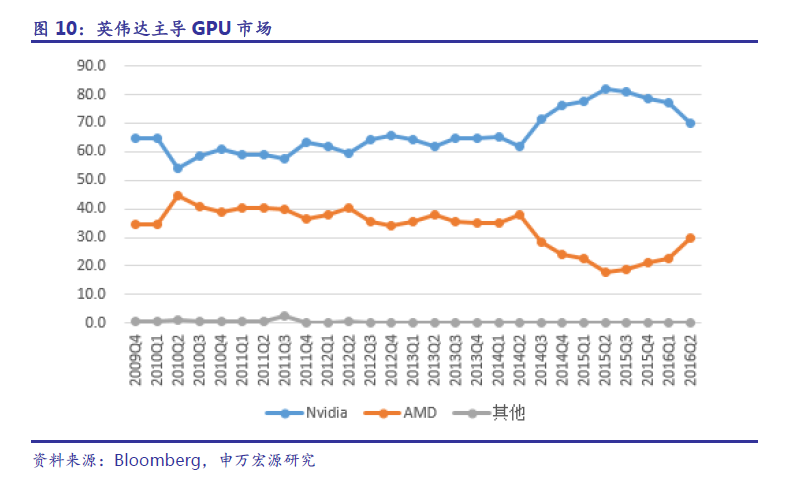

Products continue to iterate, NVIDIA Corp monopolizes the GPU market.NVIDIA Corp accounts for more than 70% of the global market share of GPU, and GPU products account for 84% of the company's revenue in 2016.

Cambrian takes the lead in ASIC in an all-round way, instruction set is a killer mace innovation, terminal applications are gradually enhanced, and NPU has actually landed.

Mobile phone is the most important mobile terminal product. The A11 used by Apple Inc's iPhone X is a neural network-based chip "Bionic neural engine", which processes the facial data of its Face ID. Huawei released the world's first mobile AI chip Kirin 970 in September 2017 and applied it to Mate 10 mobile phones. This is a deep cooperation between Huawei and Cambrian, integrating NPU dedicated to neural networks.

According to test data released by Huawei, Kirin 970's HiAI heterogeneous computing architecture has about 50 times energy efficiency and 25 times performance advantages when dealing with the same AI application tasks. On Sept. 4, some media disclosed a congratulatory letter sent to Huawei by the Chinese Academy of Sciences, saying that the Cambrian 1A Deep Learning processor, developed by the Cambrian Company and with independent intellectual property rights, has achieved more than 4-core CPU25 performance and 50 times energy efficiency in artificial intelligence applications. This time, Kirin 970 chip integrates Cambrian 1A processor as its core artificial intelligence processing unit, which realizes local, real-time and efficient intelligent processing on mobile phones. (editor: Hu Min)